Baby Talk

By Darshana Naryanan

Infants are born with the ability to babble and cry in the accents of their mothers through a combination of neurological, physical, and environmental responses.

Infants are born with the ability to babble and cry in the accents of their mothers through a combination of neurological, physical, and environmental responses.

Some restless infants don’t wait for birth to let out their first cry. They cry in the womb, a rare but well-documented phenomenon called vagitus uterinus (from the Latin word vagire, meaning to wail). Legend has it that Bartholomew the Apostle cried out in utero. The Iranian prophet Zarathustra is said to have been “noisy” before his birth. Accounts of vagitus uterinus appear in writings from Babylon, Assyria, ancient Greece, Rome, and India.

The obstetrician Malcolm McLane described an incident that occurred in a hospital in the United States in 1897. As he was prepping a patient for a cesarean section, her unborn baby began to wail and kept going for several minutes—prompting an attending nurse to drop to her knees, hands clasped in prayer. Yet another child is said to have cried a full 14 days before birth. In 1973 doctors in Belgium recorded the vitals of three wailing fetuses and concluded that vagitus uterinus is not a sign of distress. An Icelandic saga indicates that the phenomenon has been observed in other animals—”the whelps barked within the wombs of the bitches”; in Icelandic lore, vagitus uterinus in dogs foretells great events to come.

Air is necessary for crying. The coordinated movements of muscles in the stomach and rib cage force air out of the lungs and up through the vocal cords—two elastic curtains pulled over the top of the trachea—causing them to vibrate and produce a buzz-like sound. These sound waves then pass through the mouth, where precise motions of the jaws, lips, and tongue shape them into the vocal signals that we recognize—in this case, the rhythmic sounds of a cry.

Vagitus uterinus occurs—always in the last trimester—when there’s a tear in the uterine membrane. The tear lets air into the uterine cavity, thus enabling the fetus to vocalize. Vagitus uterinus provided scientists with some of the earliest insights into the fetus’s vocal apparatus, showing that the body parts and neural systems involved in the act of crying are fully functional before birth.

Mauro Fermariello / Science Source

Loud, shrill, and penetrating—a baby’s cry is its first act of communication. It is a simple adaptation that makes it less likely that the baby’s needs will be overlooked. And babies aren’t just crying for attention. While crying, they are practicing the melodies of speech. In fact, newborns cry in the accents of their mothers. They make vowel-like sounds, growl, and squeal—these protophones are sounds that eventually turn into speech.

Babies communicate as soon as they are born. Rigorous analyses of the developmental origins of these behaviors reveal that, contrary to popular belief—even among scientists—they are not hardwired into our brain structures or preordained by our genes. Instead, the latest research—including my own—shows that these behaviors self-organize in utero through the continuous dance between brain, body, and environment.

In the beginning, there is nothing. The world is formless and empty; darkness lies upon the amniotic waters. The embryo, still too young to be called a fetus, floats inside the amniotic sac of the uterus. It sees nothing and cannot hear, smell, or taste. It feels nothing—no heat, cold, pressure, or pain. It cannot sense its own position or orientation. It cannot move.

In the seventh week of pregnancy, the embryo—c-shaped with a big head and tiny tail, eyes, ears, mouth, trunk, and webbed limbs—begins to move. It measures about 10 millimeters from crown to rump—the size of a grape—and its heart, lungs, brain, and spinal cord are forming. In that same week the embryo begins to feel touch, which is the first of its senses to gain function. The world is no longer formless or empty.

Movements trigger sensations, and sensations trigger movements. Through this cycle of sensorimotor activity, the embryo begins to discover its body, and world. It begins to develop vocal behavior.

The first film of a living human fetus was made in January 1933 by Davenport Hooker, an anatomist at the University of Pittsburgh. We now consider it natural to observe the fetus in its home environment, but ultrasound technologies are fairly young. Used originally to detect welding flaws in pipes, ultrasonography was first used to image a fetus in the late 1950s by Ian Donald, an obstetrician in Glasgow. Real-time ultrasound scans have been around for only about 50 years, since the 1970s.

Hooker wanted to study the developmental sequence of human sensorimotor activity. Scientists had done similar studies on a range of other species: salamanders, toadfish, terrapin, loggerhead turtles, pigeons, chickens, rats, cats, sheep, and guinea pigs. Hooker had no way to look inside a human womb, so in 1933 he arranged with a local hospital to film prematurely delivered or surgically aborted embryos or fetuses—all of which were derived from clinically advised operations—before they died. At the time, both the medical community and the general public viewed aborted fetuses as acceptable subjects for nontherapeutic research. The anthropologist Lynn M. Morgan observes in her 2009 book, Icons of Life: A Cultural History of Human Embryos: “It is not that Hooker, his colleagues, or his audience de-humanized the fetus . . . they had never humanized fetuses to begin with.”

Wolfgang Moroder / Wikimedia Commons

Early on Hooker realized that fetal movements are too rapid to be studied accurately unless the same movement can be viewed over and over again, as with film. So he purchased a surplus World War I motion picture camera and modified it to free his hands by replacing the crank with a foot pedal. Hooker then used strands of thin human or thicker horse hair to lightly stroke the mouth, palms, trunk, limbs, and back to elicit reflex responses.

Over a period of 25 years, Hooker and his colleague Tryphena Humphrey, then an assistant professor of neuroanatomy at the University of Pittsburgh, filmed 149 fetuses, some of whom were spontaneously aborted (the medical term for miscarriage) and others electively aborted for “the health, sanity, or life of the mother,” as Hooker wrote.

Hooker and Humphrey found that very young embryos could not move until 7.5 weeks, when lightly stroking the upper lip, lower lip, or wings of the nostrils—collectively called the perioral area—caused it to sharply move its head and trunk away from the offending strand of hair. The rest of its body still lacked sensation. Between 8 and 9.5 weeks, the fetus had feeling in its lower jaw area, the sides of its mouth, and its nose. By 10.5 to 11 weeks, the upper regions of its face, such as the eyelids, could feel touch. Two or three days after that, at around 11.5 weeks, the entire face of the fetus was sensitive to touch. In that same week, the fetus moved its lower jaw for the first time. At 12 to 12.5 weeks, the fetus had mature reflexes—it closed its lips when Hooker stroked them (in contrast to moving its whole upper body). By 13.5 to 14.5 weeks of age, the fetus was opening and closing its mouth, scrunching its face into a scowl-like expression, moving its tongue, and even swallowing.

The work of Hooker and Humphrey uncovered the close evolutionary ties we have to the other animals who share our planet. Though timelines may vary, terrapin reptiles, carrier pigeon birds, and fellow mammals such as rats, cats, and sheep share a similar development sequence. In all of these species—ours included—the tactile system is the first to come online, and sensation begins in the perioral area, which is innervated by the fifth and largest cranial nerve: the trigeminal nerve. This nerve carries sensory inputs into the brain, giving us the ability to experience touch in our faces and in the insides of our mouths. It also brings motor outputs from the brain to the muscles responsible for jaw movements, giving us the ability to feed and vocalize.

DNA Illustrations / Science Source

Some fetuses did vocalize. “Between 18.5 and 23.5 weeks,” Hooker reported, “the chest and abdominal contractions and expansions increase in incidence and amplitude, until brief but effective respiration . . . occurs . . . Whenever respiration occurs, phonation—only a high-pitched cry, of course—accompanies its initiation and may be repeated at intervals following the establishment of breathing.”

Recent studies of prematurely born infants—conducted just a few years ago by Kimbrough Oller at the University of Memphis—have shown that fetuses born as young as 32 weeks (eight weeks before full term) do more than cry. They produce protophones, the infant sounds that eventually turn into speech.

How then does our ability to vocalize come to be? Is it in our nature— instinctive, inherent, or hardwired? Or is this ability acquired, learned, or absorbed from our environment? Is there some nurture to speak of, even in the womb? Ultimately, this nature versus nurture debate—often framed as a fight for causal supremacy between two opposing factions—gets us nowhere.

I learned this lesson while working in the Developmental Neuromechanics and Communication Lab, led by Asif Ghazanfar at Princeton University, where I earned my doctorate. When a behavior, such as vocalizing, is present at birth, it is often thought to be largely in our nature. But looking inside the womb reveals that the behavior is the outcome of a developmental process, and at no point can that process be neatly partitioned into silos of nature or nurture.

Nature shapes nurture, and nurture shapes nature. It is not a duality—it’s more like a Möbius strip.

On the afternoon of July 7, 2011, I was with my lab mate Daniel Takahashi, a Brazilian-Japanese medical doctor turned neuroscientist. We were hunched over a padded bench in a dimly lit room of a 1920s neo-Gothic building. I had an ultrasound wand in one hand and a popsicle stick coated with marshmallow fluff in the other. Our pregnant patient lay on the bench between us, as she had done countless times over the past year.

From head to toe, Takahashi and I wore medical-grade protective gear—gloves, gown, surgical mask, face shield, booties, and bonnet. The equipment was in part to meet the stringent standards of the university and federal regulatory boards but also for protection. The transmission of germs between us and our patient could be perilous, particularly for her.

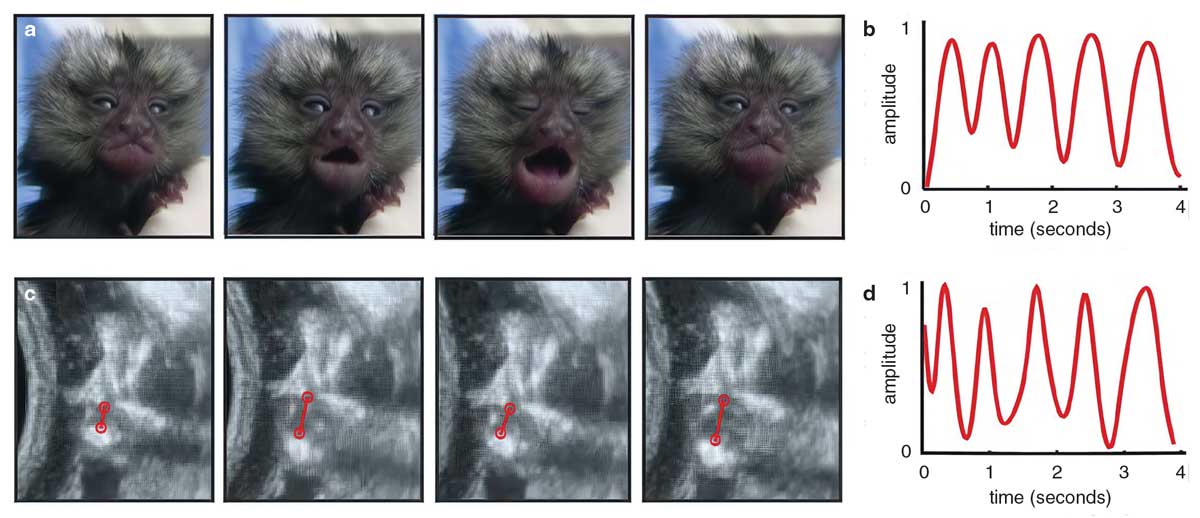

Our patient was not concerned about germs, the ultrasound gel dripping off her stomach, or even Takahashi’s gently restraining hands. Her mind was on the marshmallow fluff. We were thankful for this distraction, as our task was not an easy one. We had to find her tiny acrobatic fetus in the vastness of her womb and observe its face for as long as she would let us while recording the fetus through a DVD player hooked up to the ultrasound machine. On this day, skill and luck collided. Takahashi and I were able to watch the face of the fetus for almost 30 minutes and, for a few moments, we saw it moving its mouth.

Our pregnant patient was a marmoset monkey (Callithrix jacchus), one of five females and five males housed in pairs in a large indoor enclosure a few doors away from where we were conducting the ultrasound examination. We called her Laurie Rose. She was the first of our monkeys to get pregnant and carry her baby to term. We were able to observe, quantify, and characterize the mouth movements of her fetus from day one of movement to the day before birth—an approach not feasible in humans.

Nature shapes nurture, and nurture shapes nature. It is not a duality—it’s more like a Möbius strip.

Marmosets are a New World monkey species found in Brazil. They had a common ancestor with humans about 35 million years ago. Despite the distant ties and their squirrel-like appearance, marmosets resemble humans in a number of ways. Physiologically, they develop 12 times faster than we do but have roughly the same brain and body parts. They live in family units of 3 to 15—mother, father, and children (the monkey equivalent of a nuclear family). Occasionally, extended family members and unrelated adults will live with them. They engage in both monogamy and polygamy, and older family members tend to assist in child-rearing.

Marmosets are chatty and take turns to speak to one another, like we do. Newborn marmosets cry and make a number of other sounds. These sounds are comparable to human protophones but are more adult-like in their form. Most remarkably, Takahashi and Ghazanfar discovered that marmoset babies learn to “talk” using parental feedback, similar to how human babies learn. It is highly likely that the vocal development of a marmoset fetus is similar to human vocal development.

At first, Laurie Rose’s fetus—we called him Jack—made very few mouth movements, and they were often accompanied by motions of the head. These first actions looked different from the movements Jack made after birth.

Hooker and other scientists of that era believed that all fetal movements are primarily reflexive, evoked by external stimuli. We now know that the earliest fetal motions are spontaneous, first triggered in the muscles themselves and later by electrical pulses sent from the spinal cord or brain stem to the muscles. Fetal movements can be spontaneous or evoked by a range of stimuli, such as the mother rubbing her belly or the fetus touching its own body.

Actions of the fetus—purposeless though they may appear—are important. They support the growth of muscles, tendons, ligaments, cartilages, and bones. They steer the development of the sensorimotor circuits. General movements are storms of activity that help the fetus develop a sense of its own form and body position. Isolated movements help connect the body to the brain through tactile feedback. Twitches, which occur during sleep, are the brain’s way of testing its circuitry. The behavioral neuroscientist Mark Blumberg likens the act to a switchboard operator throwing on switches, one at a time, to test which light turns on with each switch.

As Jack got older, he grew more active. He moved his mouth more. I calculated the entropy of his movement patterns and found that it was decreasing, which meant that Jack’s actions were getting less chaotic. He was moving his mouth independently from his head. Hooker had found a similar trajectory in humans: When he stroked the mouth of the 7.5-week-old embryo, it moved away its entire upper body, but with time its body parts acquired greater autonomy, and at 12.5 weeks the fetus responded by closing its mouth alone.

How does the fetal body become more finely tuned? It is hard to look under the hood of a living being and know exactly what is happening, but a group of roboticists in Japan are attempting to reverse-engineer human development and eventually build humanoid robots. They provide us with clues. At the University of Tokyo Graduate School of Information Science and Technology, Yasuo Kuniyoshi, Hiroki Mori, and Yasunori Yamada simulated the motor development of a fetus. At the start line, the computer-generated fetus was placed inside an artificial womb, enclosed in an elastic membrane, and bathed in fluid. It had immature neural circuits with a biologically accurate musculoskeletal body and tactile receptor distribution.

The neural circuits of the simulated fetus triggered movement. As the fetus moved, its tactile receptors detected the pressure of fluid against its moving body. The information was passed on to its nervous system. The action of each muscle affected the sensory input it received as well as the sensory input received by adjacent muscles. Sometimes an isolated movement brought one body part into contact with another, inducing active stimulation of the touching part and passive stimulation of the touched part.

The neural circuits of the simulated fetus began to learn. Initially, large undifferentiated circuits controlled multiple body parts—for example, a single motor unit controlling the head and trunk. As development progressed, the brain responded to feedback from its body, causing these large units to subdivide into smaller, more precisely controlled units—for example, separate control of the head and trunk, driven by the separate experiences they have while moving.

Jack’s fetal mouth motions were nearly identical to those he made while calling out to his family as an infant. Fetal Jack had developed the behavioral repertoire he would so often use in life.

Neuroscientists have a mnemonic for the biological process that adjusts the strength of connections between neurons: ”Fire together, wire together. Out of sync, lose your link.” The catchy rhyme, which is credited to neurobiologist Carla Shatz at Stanford University, means that the brain rewires and fine-tunes itself by strengthening the connections between neurons that are repeatedly activated together and weakening the connections between neurons that are not activated together. The weak connections are ultimately pruned.

Fetal movements self-organize through the continuous and reciprocal interactions between brain, body, and environment. In the simulation, as in real life, the musculoskeletal body of the fetus determined both the possibilities and constraints of movement. Human heads, for example, rotate around two socket pivots, giving us a decent range of motion but not as much as an owl’s head, which rotates around a single socket pivot.

As the simulated fetus grew larger, it came into more physical contact with the uterine wall. When this happened, the weak and continuous tactile signals from the amniotic fluid were followed by the short, blunt force of the wall hitting its flesh. The brain took note, and the fetus modified its actions.

The fetal body, like ours, varies in sensitivity to touch—the mouth region is dense with tactile receptors, whereas the top of the head is not. As the simulated fetus matured, it frequently touched its more sensitive parts while barely touching other ones. This behavior mirrors observations of real-life fetuses, who often direct their arm movements to their mouths and even open their mouths in anticipation of their hands arriving there.

As the simulated fetus continued to grow, its larger body parts (such as the head) came to be more constrained by the uterine wall, and only small regions (such as the mouth) could move freely. The neural circuits responded to all these contingencies, and the body of the fetus became more precisely controlled (for example, separate functions of the head and mouth, more maneuverable orofacial muscles). The fetus was now practicing infant-like movements. In real life, the most frequent actions in the third trimester are face-related—hands touching the face, facial expressions like scowling, and mouth movements. All the behaviors seen in a newborn have at some point been observed in the fetus.

Narayanan, D. Z., et al. 2022.

Like the simulated fetus, Jack began moving his mouth independently while rarely moving his head. I used a dynamic time warping (DTW) algorithm—often used to detect forged signatures—to match the temporal profiles of his mouth movements to those he eventually made as an infant. DTW generates a “distance” score to indicate the extent to which one profile must be “warped” to match the other. The algorithm uncovered that, in parallel to his mouth and head decoupling, a subset of Jack’s mouth motions were becoming nearly identical to those he made while calling out to his family as an infant. Fetal Jack had developed the behavioral repertoire he would so often use in life.

My work with Ghazanfar and Takahashi established—for the first time in a primate—that the ability to vocalize at birth is not innate. Rather, it undergoes a lengthy period of prenatal development, even before sound can be produced.

Marmosets and other nonhuman primates have precursors to language, but language is unique to humans. Even as newborns, humans show a preference for speech: They recognize their native languages, and they remember the stories they have frequently heard in the womb. Babies don’t merely cry—they cry in the accent of their mother tongue. To understand the development of these uniquely human behaviors, we need to turn to hearing.

The sensory systems of birds and mammals, humans included, develop in a fixed order—with hearing developing second to last, just before the visual system. The human auditory system comes online between 24 and 28 weeks, when the spiral ganglion neurons connect the auditory receptors—the hair cells of the inner ear—to the brain stem and temporal lobe. Hearing becomes fully functional in the third trimester.

Hearing is often studied in sheep. The size of the middle and inner ear of sheep is similar to ours, and hearing develops in utero in both species. In a 2003 study from the University of Florida led by Sherri L. Smith, who is now at Duke University, researchers implanted a tiny electronic device in the inner ear of fetal sheep to study what speech signals the auditory receptors of the fetus picked up and transmitted to its brain. They then played 48 English sentences through a speaker positioned near the mother sheep. The sounds captured by the device were replayed to adult humans, who attempted to decipher the original sentences from the speech signals recorded in the fetus’s inner ear. In total, they deciphered about 41 percent of the sentences. They tended to miss or confuse high-frequency consonants, such as s, h, and f. A word such as ship could be easily confused with slit or sit. The low-frequency vowels were heard more reliably.

Vowel sounds penetrate the fetus’s inner ear better than high-frequency consonants because the uterine environment acts as a low-pass filter, absorbing and muffling sound frequencies above 600 hertz. Although individual speech sounds are suppressed in the womb, what remains prominent are the variations in pitch, intensity, and duration—what linguists refer to as the prosody of speech.

Exposure to speech in the womb leads to lasting changes in the brain, increasing newborns’ sensitivity to previously heard languages.

Prosody is what gives speech its musical quality. When we listen to someone speak, prosody helps us interpret their emotions, their intentions, and the overall meaning of their message. Different languages have different prosodic patterns. Native speakers of English, French, or Italian use contrasts in syllable duration to make words more salient. When native English speakers utter the phrase “to Ro:me,” we draw out the vowel o in Rome (but not in to). A native Japanese speaker would use a change in pitch instead. They would say “^Tokyo kara” (“to Tokyo”) to draw the listener’s attention to the more important word Tokyo. In a 2016 study, French psychologists Nawal Abboub, Thierry Nazzi, and Judit Gervain used near-infrared spectroscopy to image the brains of newborns in one of Europe’s largest pediatric hospitals. They found that the infants had learned the prosodic patterns—duration contrast versus pitch contrast—of their mother tongue.

Language learning begins in the womb, and it begins with prosody. Exposure to speech in the womb leads to lasting changes in the brain, increasing newborns’ sensitivity to previously heard languages. The mother’s voice is the most dominant and consistent sound in the womb, so the person carrying the fetus gets first dibs on influencing the fetus. If the mother speaks two languages, her infant will show equal preference and discrimination for both languages.

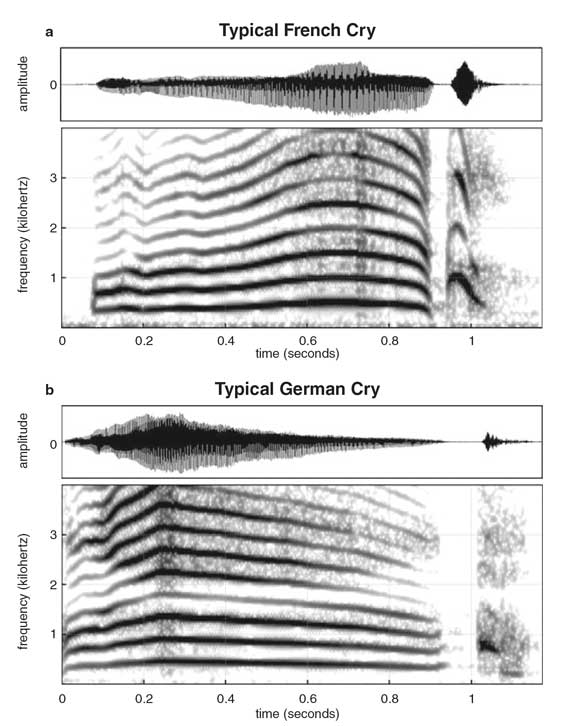

Newborns cry with the melody of their parents’ native language. French babies, for example, tend to cry with a rising tone (a), whereas German babies tend to cry with a falling tone (b). Both patterns mimic the prosody of the language they heard most often in utero.

Mampe, B., et al. 2009.

The fetus’s knowledge of native prosody goes beyond perception. A 2009 collaboration between German and French speech development researchers collected and analyzed the cries of 30 German and 30 French newborns from strictly monolingual families. They found that the French newborns tended to cry with a rising—low to high—pitch, whereas the German newborns cried more with a falling—high to low—pitch. Their patterns were consistent with the accents of adults speaking French and German.

The newborns had not just memorized the prosody of their native languages; they were actively moving air through their vocal cords and controlling the movements of their mouth to mimic this prosody in their own vocalizations. Babies are communicating as soon as they are born, and these abilities are developing in the nine months before birth.

There is no genetic blueprint, program, or watchmaker who knows how it must turn out in the end. The reality of how these behaviors come to be is far more sophisticated and elegant. They develop through continuous interactions across multiple levels of causation—from genes to culture. The processes that shape them unfold over many timescales—from the milliseconds of cellular processes to the millennia of evolution.

This article is adapted from a version previously published on Aeon.

Click "American Scientist" to access home page

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.