Making Sense of the Brain

By Brian Hayes

In his new book, Matthew Cobb skillfully guides readers through the history of neuroscience, highlighting sometimes dazzling advances while acknowledging that our understanding of the brain is still primitive.

June 12, 2020

Science Culture Biology Physiology Review Scientists Nightstand

THE IDEA OF THE BRAIN: The Past and Future of Neuroscience. Matthew Cobb. 470 pp. Basic Books, 2020. $32.

A symphony, a sonnet, a theorem, a joke: These are all products of human thought and imagination. Where do thought and imagination come from? They abide in the brain, which provides the material substrate for all our ideas and feelings. Hooking these two facts together suggests that creating art or mathematics or humor is just a matter of evoking certain patterns of excitation in a network of nerve cells. Fire the right neurons in the right order, and out comes Beethoven’s Fifth Symphony: Dah dah dah dum! To me, this conception of the brain as an engine of creativity is both irrefutable and inconceivable. I am convinced it is true, and yet I cannot fathom how Beethoven’s brain performed that trick. I don’t even know how my own brain came up with the sentences I am writing at this instant.

Matthew Cobb doesn’t know the answers either, but that is not an indictment of his book The Idea of the Brain. On the contrary, a good test of any work on neuroscience is whether it inspires sufficient respect for the depths of our ignorance. Cobb gets high marks.

The biggest questions in neuroscience—how do we learn, remember, think?—remain deeply puzzling.

The first half of the book surveys the history of ideas about the mind and the brain from antiquity up to about 1950. Throughout most of that period, the brain was not yet recognized as the seat of thought and memory, and therefore much of the history is necessarily a catalog of outlandish errors. Aristotle believed that “pleasure and pain, and all sensation plainly have their source in the heart,” just as he also believed that the Earth is the center of the universe. For well over a thousand years, the Western world took his word for it on both points. The two fallacies were finally overturned at roughly the same time—in the 16th and 17th centuries.

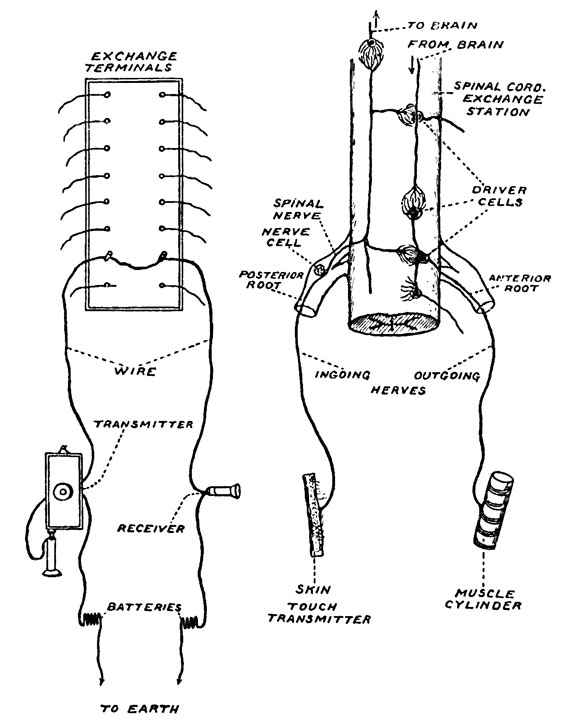

Even after the brain was widely accepted as the “nerve center” of the body, no one had any direct evidence bearing on how it might work. That left plenty of room for philosophical speculations and fanciful analogies—a not-always-helpful expression of human imagination and creativity. René Descartes, for example, proposed that the nerves are hollow tubes carrying “animal spirits” from sensory organs to the brain and from the brain to the muscles. When your foot is too close to the fire, animal spirits surge to the brain and then back to your leg, causing the limb to pull away from danger. This description of reflex action seems rather modern and mechanistic—except for the animal spirits. It’s not clear whether those spirits represent a physical substance (a gas or a liquid pumped through the tubes) or something more ethereal and supernatural.

From The Idea of the Brain.

The hydraulic or pneumatic metaphor was later exchanged for an electrical one. In the latter case, there was experimental support for the idea, starting with Luigi Galvani’s famous demonstration that an electric charge applied to an exposed nerve will cause a frog’s leg to twitch. Thus the nerves seemed to be acting as conductors of an electric current. Soon the nervous system was being compared to a telegraph network, with messages flowing into a central office (the brain) and back out to the rest of the body.

In fact, nerves are neither hollow tubes nor wires. At the end of the 19th century, Camillo Golgi and Santiago Ramón y Cajal produced clear microscopic images and drawings showing that the basic structural unit of both brain tissue and the peripheral nervous system is the individual neuron, or nerve cell. A typical neuron has a bushy array of dendrites that receive incoming signals, a cell body that processes the signals, and a single output fiber, the axon, that forms junctions (synapses) with other neurons. Over subsequent decades further research revealed the biochemical details of how signals propagate along the dendrites and axons, and how they pass from one cell to the next at the synapse. Although we now know there’s more going on in neurons than this simple one-way flow of information, the “neuron doctrine” remains the bedrock foundation of brain science.

Cobb takes an expansive and inclusive approach to historical narrative. In telling the story of the neuron doctrine, he mentions not just Cajal and Golgi—who were the two Nobel Prize winners—but also several supporting actors: Wilhelm His (who named the dendrite), Albert von Köllicker (who named the axon), and Wilhelm von Waldeyer (who came up with the term neuron), plus August Forel, Louis-Antoine Ranvier, and Arthur van Gehucten. There’s even a cameo part for Fridtjof Nansen, the polar explorer. I am grateful to learn something about these many peripheral figures, but there are moments when encyclopedic breadth comes at the expense of depth. I have counted 551 names of individuals in Cobb’s index, which works out to less than a page per person on average.

From The Idea of the Brain.

Part 2 of The Idea of the Brain, covering the period since 1950, begins with a provocative statement: “In reality, no major conceptual innovation has been made in our overall understanding of how the brain works for over half a century.” I can think of a few neuroscientists active in the past 50 years who might take exception to this claim, but many others would agree—and so would I.

Cobb is not arguing that neuroscience has made no progress since 1950. On the contrary, advances in instrumental and experimental techniques have been dazzling. We can now probe individual neurons in the living brain, recording changes in their internal state. Noninvasive scanning technologies reveal which regions of the brain are most active during various mental tasks such as reading or counting. The ambitious new field of connectomics aims to construct detailed wiring diagrams for blocks of brain tissue comprising millions of neurons—and eventually, wiring diagrams for entire brains. We’ve come to understand a lot about perception and the control of movement—the input and output channels of the brain. But the biggest questions—how do we learn, remember, think?—remain deeply puzzling.

At age seven I learned that 7 × 9 = 63. Committing that fact to memory must have produced a physical change somewhere in my brain—and evidently it was an enduring change, since I can still summon the fact to mind 63 years later. As Cobb explains, studies in simple animals such as sea slugs have shown that learning alters synapses, either strengthening or weakening the connections between neurons. But which synapses, between which neurons, embody a given memory? Is each memory stored at some specific site in the brain, or are memories distributed over many thousands of scattered neurons? There is evidence to support either of these possibilities. The neurosurgeon Wilder Penfield discovered that stimulating certain locations in the cerebral cortex can evoke vivid and very specific memories of long-ago events. On the other hand, Karl Lashley, in experiments with animals, found that memory impairments caused by surgery were proportional to the total amount of tissue destroyed, suggesting that “the whole of the brain contributed to the making and recollection of memories.”

Another question seems even more perplexing: How do changes in synaptic strength encode all the kinds of information we remember—multiplication tables, childhood birthday parties, the scent of lilacs, how to ride a bicycle? Cracking the neurological code looks to be a much harder problem than deciphering the genetic code was.

In assessing the current state of neuroscience, Cobb has much to say about the complicated relations between brains and computers. On the one hand, certain kinds of computers and computer programs are inspired by biological neural networks; they are designed to learn from examples and they perform tasks that lie in the traditional realm of brainwork, such as recognizing faces and translating between languages. On the other hand, the computer has been adopted as a model for understanding the brain. The basic components of computer architecture—logic circuits, memory, long-term storage, input and output channels—could also be plausible building blocks of a brain.

Cobb is skeptical of arguments for a close connection between brains and computers. Even when a computer performs the same task as a brain, it may not employ the same methods. Likewise, although the brain shares functions such as memory and storage with the computer, it surely implements those functions very differently. We don’t yet know how memories are organized in the brain, but they are surely not filed away at sequentially numbered addresses, as they are in the computer. Cobb also emphasizes that modern computers are strictly digital systems, built on devices with two discrete states (1/0, true/false), whereas neurons can modulate their activity over a continuous range.

In Cobb’s judgment, the linkage between brains and computers is merely a metaphor, not to be taken any more seriously than the hydraulic or electrical analogies of earlier eras. And he suggests that the computational metaphor may have reached the end of its useful life. Here I disagree. I would argue that the brain really is a computer, in the same way that the heart really is a pump. The brain is not made of silicon, it doesn’t use binary arithmetic, and it doesn’t run Windows, but the brain computes. And unless there’s something spooky happening inside our heads, the brain is subject to the same constraints as any other machine on what it can and can’t compute.

Part 2 concludes with a chapter on consciousness, which Cobb bravely introduces with an epigram from the British psychologist Stuart Sutherland:

Consciousness is a fascinating but elusive phenomenon; it is impossible to specify what it is, what it does, or why it evolved. Nothing worth reading has been written about it.

It would be harsh to insist that Sutherland’s statement remains true and that Cobb’s final chapter is therefore not worth reading, but I think Cobb himself would acknowledge that consciousness is a murky area, in which no one knows the right questions to ask, much less the answers. If we set aside the problem of consciousness, I can imagine a theory of the brain that would explain animals’ strategies for escaping danger, finding food, and ensuring the future of their genes. But consciousness—that enduring sense of an “I”—brings us back to explaining sonnets and symphonies.

Part 3 consists of a single chapter on the future. Cobb’s outlook is optimistic, though veiled in vagueness. He goes on record maintaining that “the mystery of consciousness will eventually be solved, in ways I cannot begin to guess.” Of course, “eventually” covers a lot of territory. For perspective, it’s worth noting that Cobb also predicts it will take 50 years to understand the brain of a maggot (an organism whose nervous system Cobb himself has studied).

The Idea of the Brain is a thorough, thoughtful, and enjoyable account of neuroscience for the general reader. Cobb is especially sure-footed in guiding us through the history of the field, which gets only brief and nodding attention in some other recent books. My one serious complaint is that Cobb shies away from the more mathematical and computational aspects of the subject. For example, the concepts of sparse coding, low-dimensional manifolds, and Bayesian statistics are all mentioned but not adequately explained. In some instances, Cobb concedes that he does not fully understand the concepts. Yet some of them are indispensable elements of promising, current theories of brain function—elements with which readers who work through the rest of this ambitious book deserve a chance to get better acquainted.

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.