The Man Behind the Curtain

By Tony Rothman

Physics is not always the seamless subject that it pretends to be

Physics is not always the seamless subject that it pretends to be

DOI: 10.1511/2011.90.186

“I want to get down to the basics. I want to learn the fundamentals. I want to understand the laws that govern the behavior of the universe.” Thousands of admissions officers and physics department chairs have smiled over such words set down by aspiring physicists in their college-application essays, and that is hardly surprising, for every future physicist writes that essay, articulating the sentiments of all of us who choose physics as a career: to touch the fundamentals, to learn how the universe operates.

Erich Lessing/Art Resource, NY

It is also the view the field holds of itself and the way physics is taught: Physics is the most fundamental of the natural sciences; it explains Nature at its deepest level; the edifice it strives to construct is all-encompassing, free of internal contradictions, conceptually compelling and—above all—beautiful. The range of phenomena physics has explained is more than impressive; it underlies the whole of modern civilization. Nevertheless, as a physicist travels along his (in this case) career, the hairline cracks in the edifice become more apparent, as does the dirt swept under the rug, the fudges and the wholesale swindles, with the disconcerting result that the totality occasionally appears more like Bruegel’s Tower of Babel as dreamt by a modern slumlord, a ramshackle structure of compartmentalized models soldered together into a skewed heap of explanations as the whole jury-rigged monstrosity tumbles skyward.

Of course many grand issues remain unresolved at the frontiers of physics: What is the origin of inertia? Are there extra dimensions? Can a Theory of Everything exist? But even at the undergraduate level, far back from the front lines, deep holes exist; yet the subject is presented as one of completeness while the holes—let us say abysses—are planked over in order to camouflage the danger. It seems to me that such an approach is both intellectually dishonest and fails to stimulate the habits of inquiry and skepticism that science is meant to engender.

In the first week or two of any freshman physics course, students are exposed to the force of friction. They learn that friction impedes the motion of objects and that it is caused by the microscope interaction of the two surfaces sliding past one another. It all seems quite plausible, even obvious, yet regardless of any high falutin’ modeling, with molecular mountain ranges resisting each other’s passage or running-shoe soles binding to tracks, friction produces heat and hence an increase in entropy. It thus distinguishes past from future. The increase in entropy—the second law of thermodynamics—is the only law of Nature that makes this fundamental distinction. Newton’s laws, those of electrodynamics, relativity … all are reversible: None care whether the universal clock runs forward or backward. If Newton’s laws are at the bottom of everything, then one should be able to derive the second law of thermodynamics from Newtonian mechanics, but this has never been satisfactorily accomplished and the incompatibility of the irreversible second law with the other fundamental theories remains perhaps the greatest paradox in all physics. It is blatantly dropped into the first days of a freshman course and the textbook authors bat not an eyelash.

To a physicist, moreover, the material world is divided into billiard balls and springs. An ideal spring oscillates forever, but anyone who has ever watched a real-world spring knows that forever usually lasts just a few seconds. We account for this mathematically by inserting a frictional term into the spring equation and the fix accords well with observations. But the insertion is completely ad hoc, adjustable by hand, and to claim that such a fudge somehow explains the behavior of springs is simple vanity.

Perhaps our complacency is due to the fact that we have written down a plausible equation. Physicists have long believed that mathematics is the Rosetta Stone for unlocking the secrets of Nature and since a famous 1960 essay by Eugene Wigner entitled “The Unreasonable Effectiveness of Mathematics in the Natural Sciences,” the conviction has become an article of faith. It seems to me, though, that the “God is a mathematician” viewpoint is one of selective perception. The great swindle of introductory physics is that every problem has an exact answer. Not only that, students are expected to find it. Such an approach inculcates our charges with an expectation that is, in fact, exactly contrary to the true state of the world. Vanishingly few problems in physics have exact solutions and a physicist’s career is one of finding approximations and hopefully not being too embarrassed by them.

In a freshman course we introduce the simple pendulum—nothing more than a mass on the end of a string that oscillates back and forth. Initially Newton’s laws lead to an equation that is too hard to solve and so we admonish students to simplify it by assuming that the pendulum is executing small oscillations. Then the exercise becomes easy. Well, not only is the original problem too difficult for freshman, it has no exact solution, at least not in terms of “elementary functions” like sines and cosines. Advanced texts tell you that an exact solution does exist, but the use of the term exact for such animals is debatable. In any case, replace the string by a spring and the problem can easily be made impossible to everyone’s satisfaction. One must distinguish the world from the description afforded by mathematics. As Einstein famously put it, contra Wigner, “As far as the laws of mathematics refer to reality, they are not certain; as far as they are certain, they do not refer to reality.”

The maxim might be taken to heart a few weeks later in a freshman course when instructors introduce their students to Newton’s law of gravity. The famous law works exquisitely well, of course, but a singular strangeness goes unremarked. According to the equation, if two objects become infinitely close to one another, the force of attraction between them becomes infinite. Infinite forces don’t appear in Nature—at least we hope they don’t—and we dismiss this pathology with the observation that real objects have a finite size and their centers never get so close to each other that we need to worry. But the first equation in any freshman electricity and magnetism course is “Coulomb’s law,” which governs the attraction or repulsion of electrical charges and is identical in form to Newton’s law. Now, in modern physics we often tell students that electrons and protons are point particles. In that case, you really do need to worry about infinite forces and it is exactly this difficulty that led to modern field theories, such as quantum electrodynamics. Well Newton himself said, “Hypotheses non fingo”: “Look guys, the equation works, usually.”

Electricity and magnetism courses certainly hold their share of mysteries. The highlight of any beginning course on this subject, at least for the instructor, is Maxwell’s equations, the equations that unified electricity and magnetism into electromagnetism. Soon after postulating them, we demonstrate to our students that light consists of traveling electric and magnetic fields, oscillating at right angles to one another. We next assert that light exerts a pressure on matter; it is this radiation pressure that provides for the detonation of hydrogen bombs and the possibility of solar sails. A common explanation of light pressure in undergraduate texts is that the electric field of the light wave causes electrons to accelerate in one direction, then the magnetic field pushes them forward. Not only is this explanation completely wrong, despite its appearance in fifth editions of books, but to correct it requires introducing a famous ad hoc suggestion known as the Abraham-Lorentz model, which does reproduce the phenomenon. To put the Abraham-Lorentz model on a firm footing, on the other hand, led to the development of quantum electrodynamics. Quantum electrodynamics itself is, however, famously riddled with infinities, and to abolish them requires the further ad hoc procedure of renormalization, which was so distasteful to Paul Dirac that he ceased doing physics altogether. Although the theory of renormalization has advanced since those days, many physicists would echo Richard Feynman, one of the technique’s inventors, who called it “hocus-pocus.” Thus it is not entirely clear whether physics has ever provided an adequate underpinning to the wisdom so blithely dispensed in first-year texts.

One of the great moments in the lives of young physicists is their first encounter with “Lagrangian mechanics.” God parts the firmament to reveal Truth. Lagrangian mechanics finds its roots in ancient Greece and in the 17th century suggestion of Pierre de Fermat that light travels between two points in the shortest possible time. Fermat’s principle of least time allows one to derive the famous law of refraction, Snell’s law and, apparently, explains the behavior of some dogs in retrieving bones. The idea that Nature minimizes certain quantities eventually led to the “principle of least action,” which states that you take a quantity known as the action, minimize it and—Presto!—Newton’s equations for the system miraculously emerge! (For classical systems the action is basically the system’s kinetic minus potential energy, a quantity known as the Lagrangian, multiplied by the time.) The realization that Newton’s laws themselves follow from the principle of least action is genuinely awe inspiring and the young physicist is immediately convinced that, logically if not historically, the action precedes Newton. Moreover, if you recollect (with pain) how difficult it was to even write down Newton’s equations in freshman physics for those infuriating systems of ropes and pulleys, it becomes surprisingly easy in the Lagrangian formalism, as the technique is known.

But the great swindle of freshman physics is indeed the conceit that problems have exact solutions. A standard question during my sophomore year was to write down the equations for a double pendulum, which is merely one pendulum swinging from the tip of another. With the Lagrangian formalism, the task is a simple one. What went unsaid, or was perhaps unknown at the time, is that the double pendulum is a chaotic system, and so to solve the equations is strictly impossible. What then did we really learn from the exercise?

Nevertheless, in terms of its avowed purpose of deriving equations, the Lagrangian formalism works extraordinarily well for “standard systems” where the damned pulleys are connected by ideal, unstretchable ropes. Unfortunately, once the ropes are allowed to stretch with time, for example, the Lagrangian technique becomes anything but straightforward and the fixes needed to apply it to a given problem become so elaborate that virtually every textbook author either makes a misstatement or avoids those scenarios altogether. Indeed, the general situation is so delicate that one is often unsure whether one has obtained the correct result.

This is not a moot point. Einstein did not consider his theory of gravitation—general relativity—complete until he could derive his field equations from an action, a feat that the mathematician David Hilbert accomplished five days before Einstein himself. General relativity allows for many model cosmologies, most not resembling the real universe, but in any case today it is known that when deriving the field equations for certain of them from an action one gets an incorrect result. A fair amount of esoteric research has gone into understanding why the failure occurs and how to patch it up but, as far as I am aware, all fixes require assuming the correct answer to begin with—the Einstein equations. Modern physicists take the primacy of Lagrangian mechanics seriously: contemporary practitioners, be they cosmologists or string theorists, invariably begin by postulating an action for their pet theory, then derive the equations, but if one does not have a set of previously accepted field equations, how is one certain that one has obtained the correct answer, especially in this day when theory is so far removed from experiment?

It would be surprising if the strange world of subatomic and quantum physics did not lead the field in mysteries, conceptual ambiguities and paradoxes, and it does not disappoint. The standard model of particle physics, for instance (the one containing all the quarks and gluons), has no fewer than 19 adjustable parameters, about 60 years after Enrico Fermi exclaimed, “With four parameters I can fit an elephant!” Suffice to say, “beauty” is a term not frequently applied to the standard model.

One doesn’t have to go so far in quantum theory to be confused. The concept of electron “spin” is basic to any quantum mechanics course, but what exactly is spinning is never made clear. Wolfgang Pauli, one of the concept’s originators, initially rejected the idea because if the electron was to have a finite radius, as indicated by certain experiments, then the surface would be spinning faster than the speed of light. On the other hand, if one regards the electron as a point particle, as we often do, then it is truly challenging to conceive of a spinning top whose radius is zero, not to mention the aggravation of infinite forces.

Unfortunately, quantum texts habitually ignore the difficulties that infect the heart of the field. The most fundamental of these is the notorious “measurement problem.” The equation that governs the behavior of any quantum system, Schrödinger’s equation, is as deterministic as Newton’s own, but as many people know, quantum mechanics predicts only the probability of an experiment’s outcome. How a deterministic system, in which the result is preordained, abruptly becomes a probabilistic one at the instant of measurement, is the great unresolved mystery of quantum theory, and yet virtually none of the dozens of available quantum textbooks even mention it. One well-known graduate text completes the irony by including a section titled “measurements” without addressing the issue.

Quantum text authors, perhaps because of the perversity of their subject, are particularly adept at sweeping conceptual difficulties under the rug. Nowhere is this more apparent than in the celebrated “two-slit” experiment, which is universally invoked to illustrate the wave-particle duality of light and which brings you face to face with the bedrock inscrutability of Nature. The experiment is simple: Shine a light beam through a pair of narrow slits in a screen and observe the results. For our purposes, the great paradoxes illustrated by the two-slit experiment, that light can act like a wave or a particle but not both at the same time, are not central. What is central is that explanations of the experiment’s results invoke both classical lights waves, on the one hand, and photons—quantum light particles—on the other.

Everett Collection

Also central is that in analyzing this experiment textbook authors essentially throw up their hands and surrender. Recollecting that light is an electromagnetic wave, authors invariably begin by talking about the intensity of the incident light, which is a measure of the strength of the electric and magnetic fields. Then in a complete non sequitur, they shift the conversation to photons, as if the quantum-mechanical beastlets have electric and magnetic fields like classical light waves. They don’t. In fact, an accurate description of the famous experiment requires a more subtle quantum-mechanical entity known as a coherent state, which is the closest thing to a classical light wave.

What’s more, by resorting to a classical optics analogy of the experiment, authors are forgoing any explanation whatsoever. “Explanation” in physics generally means to find a causal mechanism for something to happen, a mechanism involving forces, but textbook optics affords no such explanation of slit experiments. Rather than describing how the light interacts with the slits, thus explaining why it behaves as it does, we merely demand that the light wave meet certain conditions at the slit edge and forget about the actual forces involved. The results agree well with observation, but the most widely used of such methods not only avoids the guts of the problem but is mathematically inconsistent. Not to mention that the measurement problem remains in full force.

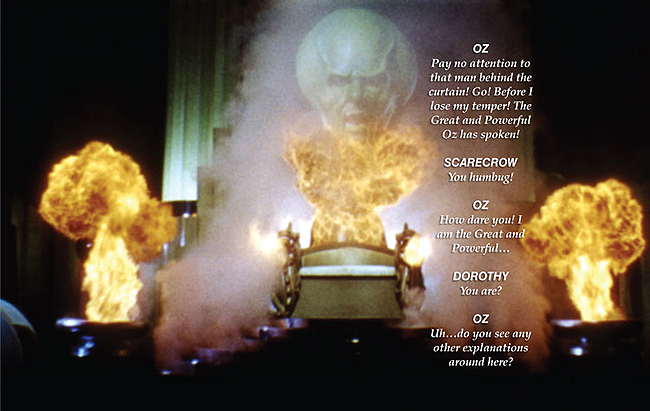

Such examples abound throughout physics. Rather than pretending that they don’t exist, physics educators would do well to acknowledge when they invoke the Wizard working the levers from behind the curtain. Even towards the end of the twentieth century, physics was regarded as received Truth, a revelation of the face of God. Some physicists may still believe that, but I prefer to think of physics as a collection of models, models that map the territory, but are never the territory itself. That may smack of defeatism to many, but ultimate answers are not to be grasped by mortals. Physicists have indeed gone further than other scientists in describing the natural world; they should not confuse description with understanding.

Click "American Scientist" to access home page

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.