The Nature of Scientific Proof in the Age of Simulations

By Kevin Heng

Is numerical mimicry a third way of establishing truth?

Is numerical mimicry a third way of establishing truth?

DOI: 10.1511/2014.108.174

Empiricism lies at the heart of the scientific method. It seeks to understand the world through experiment and experience. This cycle of formulating and testing falsifiable hypotheses has amalgamated with a modern form of rationalism—the use of reasoning, mathematics, and logic to understand nature. These schools of thought are couched in centuries of history and, until recently, remained largely distinct.

The Royal Collection © 2014 Her Majesty Queen Elizabeth II/The Bridgeman Art Library.

Proponents of empiricism include the 18th-century Scottish philosopher David Hume, who believed in a subjective, sensory-based perception of the world. Rationalism is the belief that the use of reasoning alone is sufficient to understand the natural world, without any recourse to experiment. Its roots may be traced to the Greek philosophers Aristotle, Plato, and Pythagoras; its more modern proponents include Kant, Leibniz, and Descartes.

A clear example of both practices at work is in the field of astronomy and astrophysics. Astronomers discover, catalog, and attempt to make sense of the night sky using powerful telescopes. Astrophysicists mull over theoretical ideas, form hypotheses, make predictions for what one expects to observe, and attempt to discover organizing principles unifying astronomical phenomena. Frequently, researchers are practitioners of both subdisciplines.

Problems in astrophysics—and physics, in general—may often be rendered tractable by concentrating on the characteristic length, time, or velocity scales of interest. When trying to understand water as a fluid, it is useful to treat it as a continuous medium rather than as an enormous collection of molecules, because it makes it vastly easier to visualize (and compute) its macroscopic behavior. Although the Earth is evolving on geological time scales, its global climate is essentially invariant from one day to the next—hence the difficulty in explaining the urgency of climate change to the public. The planets of the Solar System do not orbit a static Sun, as it performs a ponderous wobble about its center of mass due to their collective gravitational tug, but it is often sufficient to visualize it as being so. Milankovitch cycles cause the eccentricity and obliquity of the Earth’s orbit to evolve over hundreds of thousands of years, but they are essentially constant over a human lifetime.

This separation of scales strips a problem down to its bare essence, allowing one to gain insight into the salient physics at the scale of interest.

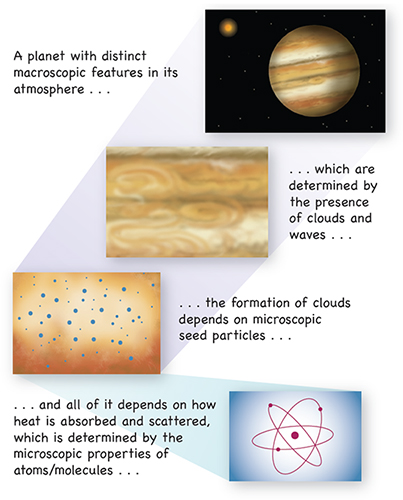

Multiscale problems, on the other hand, do not lend themselves to such simplification. Small disturbances in a system might show up as big effects across myriad sizes and time scales. Structures on very large scales “talk” to features on very small scales and vice versa. For example, a grand challenge in astrophysics is understanding planet formation—being able to predict the diversity of exoplanets forming around a star, starting from a primordial cloud of gas and dust. Planet formation is an inherently multiscale problem: Uncertainties on microscopic scales, such as how turbulence and the seed particles of dust grains are created, hinder our ability to predict the outcome on celestial scales. Many real-life problems in biology, chemistry, physics, and the atmospheric and climate sciences are multiscale as well.

By necessity, a third, modern way of testing and establishing scientific truth—in addition to theory and experiment—is via simulations, the use of (often large) computers to mimic nature. It is a synthetic universe in a computer. One states an equation (or several) describing the physical system being studied, programs it into a computer, and marches the system forward in space and time. If all of the relevant physical laws are faithfully captured, then one ends up with an emulation—a perfect, The Matrix–like replication of the physical world in virtual reality.

In astronomy and astrophysics, this third way has come into its own, largely due to the unique status of astronomy as an experimental science. Unlike other, laboratory-based disciplines, astronomers may not exert full control over their experiments—one simply cannot rearrange objects in the sky. Astronomical phenomena often encode information about a subpopulation of a class of objects at a very specific moment in their evolution. To understand the entire population of a class of objects across cosmic time requires large computer simulations of their formation and evolution. Examples of different classes of objects include exoplanets, stars, black holes, galaxies, and even clusters of galaxies. The hope is that these simulations lead to big-picture understanding that unifies seemingly unrelated astronomical phenomena.

During the 1940s through the 1980s, the late, distinguished Princeton astrophysicist Martin Schwarzschild was one of the first to use simulations to gain insights into astronomy, harnessing them to understand the evolution of stars and galaxies. Schwarzschild realized that the physical processes governing stellar structure are nonlinear and not amenable to analytical (pencil and paper) solutions, because it requires an understanding of the physics of nuclear burning, while galaxies are hardly perfect spheres. He proceeded to investigate them both using numerical solutions generated by large computers (at that time).

Both lines of inquiry have since blossomed into respected and full-fledged subdisciplines in astrophysics. Nowadays, an astrophysicist is as likely to be found puzzling over the engineering of complex computer code as he or she is to be found fiddling with mathematical equations on paper or chalkboard.

From the 1990s to the present, the approach of using computer simulations for testing hypotheses flourished. As technology advanced, astronomical data sets became richer, motivating the need for more detailed theoretical predictions and interpretations. Computers became more prevalent and faster, alongside rapid advances in the algorithmic techniques developed by computational science. Inexorably, the calculations produced by large simulations evolved to resemble experimental data sets in size, detail, and complexity.

Computational astrophysicists now come in three variants: engineers to build the code, researchers to formulate hypotheses and design numerical experiments, and others to process and interpret the resulting massive output. Supercomputing centers function almost like astronomical observatories. For better or worse, this third way of establishing scientific truth appears to be here to stay.

In a series of lunchtime conversations with astrophysicist Piet Hut of the Institute for Advanced Study in Princeton, I discovered that we were both concerned about the implications of these ever-expanding simulations. Computational astrophysics has adopted some of the terminology and jargon traditionally associated with the experimental sciences. Simulations may legitimately be regarded as numerical experiments, along with the assumptions, caveats, and limitations associated with any traditional, laboratory-based experiment. Simulated results are often described as being empirical, a term usually reserved for natural phenomena rather than numerical mimicries of nature. Simulated data are referred to as data sets, seemingly placing them on an equal footing with observed natural phenomena.

Illustration by Barbara Aulicino.

The Millennium Simulation Project, designed and executed by the Max Planck Institute for Astrophysics in Munich, Germany, provides a pioneering example of such an approach. It is a massive simulation of a universe in a box, elucidating the very fabric of the cosmos. The data sets generated by these simulations are so widely used that entire workshops are organized around them. Mimicry has supplanted astronomical data.

It is not far-fetched to say that all theoretical studies of nature are approximations. There is no single equation that describes all physical phenomena in the universe—and even if we could write one down in principle, solving it would be prohibitive, if not downright impossible. The equations we study as theorists are merely approximations of nature. Schrödinger’s equation describes the quantum world in the absence of gravity. The Navier-Stokes equation is a macroscopic description of fluids. Newton’s equation describes gravity accurately under terrestrial conditions, superseded only by Einstein’s equations under less familiar conditions.

To understand the orbital motion of exoplanets around distant stars, it is mostly sufficient to consider only Newtonian gravity. To understand the appearance of these exoplanets’ atmospheres requires approximating them as fluids and understanding the macrosopic manifestations of the quantum mechanical properties of the individual molecules: their absorption and scattering properties. Each of these governing equations is based on a law of nature—the conservation of mass, energy, or momentum, or some other generalized, more abstract quantity such as potential vorticity. One selects the appropriate governing equation of nature and solves it in the relevant physical regimes, thus creating a model that captures a limited set of salient properties of a physical system. The term itself is widely abused—a “model” that is not based on a law of nature has little right to be called one.

A fundamental limitation of any simulation is that there is a practical limit to how finely one may slice space and time in a computer such that the simulation completes within a reasonable amount of time (say, within the duration of one’s Ph.D. thesis). For multiscale problems, there will always be phenomena operating on scales smaller than the size of one’s simulation pixel. Astrophysicists call these subgrid physics—literally physics happening below the grid of the simulation. This difficulty of simulating phenomena from microscopic to macroscopic scales, across many, many orders of magnitude in size, is known as a dynamic range problem.

As computers become more powerful, one may always run simulations that explore a greater range of sizes and divide up space and time ever more finely, but in multiscale problems there will always be unresolved subgrid phenomena. Astrophysics and climate science appear to share this nightmare. In simulating the formation of galaxies, the birth, evolution, and death of stars are determining the global appearance of these synthetic galaxies themselves. Galaxies typically span tens of thousands of light years across, whereas stars operate on scales that are roughly 100 billion times smaller.

The climate of Earth appears to be significantly influenced by clouds, which both heat and cool the atmosphere. On scales of tens to hundreds of kilometers, the imperfect cancellation between these two effects is what matters. To get the details of this cancellation correct, we need to understand how the clouds formed and how their emergent properties developed, which ultimately requires an intimate understanding of how the microscopic seed particles of clouds were first created. Remarkably, uncertainties about cloud formation on such fine scales are hindering our ability to predict whether a given exoplanet is potentially habitable. Cloud formation remains a largely unsolved puzzle across several scientific disciplines. In both examples, it remains challenging to simulate the entire range of phenomena, due to the prohibitive amount of computing time needed and our incomplete understanding of the physics involved on smaller scales.

Another legitimate concern is the use of simulations as “black boxes” to churn out results and generate seductive graphics or movies without deeply questioning the assumptions involved. For example, simulations involving the Navier-Stokes equation often assume a Newtonian fluid—one that retains no memory of what was done to it in the past and offers more resistance or friction when layers of it are forced to slide past one another. Newtonian fluids are a plausible starting point for a rich variety of simulations, ranging from planetary atmospheres to accretion disks around black holes. Curiously, several common fluids are non-Newtonian. Dough is an example of a fluid with a memory of its past states, whereas ketchup tends to become less viscous when it is increasingly deformed. Attempting to simulate these fluids using a Newtonian assumption is an exercise in futility.

To use a simulation as a laboratory, one has to understand how to break it—otherwise, one may mistake an artifact as a result. In approximating continua as being discrete, one has to pay multiple penalties. Spurious oscillations or enhanced viscosity that are artifacts of this procedure may easily be misinterpreted as being physically meaningful. When one slices up space and time in a simulation, it may introduce features that look like real waves or make the fluid more viscous in an artificial way. The conservation of mass, momentum, and energy—cornerstones of theoretical physics—may no longer be taken for granted in a simulation and depend on the numerical scheme being employed, even if the governing equation conserves all of these quantities perfectly on paper.

Despite these concerns, a culture of “bigger, better, faster” is prevailing. It is not uncommon to hear discussions centered on how one can make one’s code more complex and run even faster on a mind-boggling number of computing cores. It is almost as if gathering exponentially increasing amounts of information will automatically translate into knowledge, that the simulated system attains self-awareness. As terabytes upon terabytes of information are being churned out by ever more massive simulations, the gulf between information and knowledge is widening. We appear to be missing a set of guiding principles—a metacomputational astrophysics, for lack of a better term.

Questions for metacomputational astrophysics include: Is scientific truth more robustly represented by the simplest or the most complex model? (Many would say simplest, but this view is not universally accepted.) How may we judge when a simulation has successfully approximated reality in some way? (The visual inspection of a simulated image of, say, a galaxy versus one obtained with a telescope is sentimentally satisfying, but objectively unsatisfactory.) When is “bigger, better, faster” enough? Does one obtain an ever-better physical answer by simply ramping up the computational complexity?

An alternative approach is to construct a model hierarchy—a suite of models of varying complexity that develops understanding in steps, allowing each physical effect to be isolated. Model hierarchies are standard practice in climate science. Focused models of microprocesses (turbulence, cloud formation, and so on) buttress global simulations of how the atmosphere, hydrosphere, biosphere, cryosphere, and lithosphere interact.

With increasingly complex simulations, there are also questions surrounding the practice of science. It is not unheard of to encounter published papers in astrophysics where insufficient information is provided for the reproduction of simulated results. Frequently, the computer codes used to perform these simulations are proprietary and complex enough that it would take years and the dedicated efforts of a research team to completely re-create one of them. Scientific truth is monopolized by a few and dictated to the rest. Is it still science if the results are not readily reproducible? (Admittedly, “readily” has a subjective meaning.)

There are also groups and individuals who take the more modern approach of making their codes open source. This has the tremendous advantage that the task of scrutinizing, testing, validating, and debugging the code no longer rests on the shoulders of an individual, but of the entire community. Some believe this amounts to giving away trade secrets, but there are notable examples of researchers whose careers have blossomed partly because of influential computer codes they made freely available.

A pioneer in this regard is Sverre Aarseth, a Cambridge astrophysicist who wrote and gave away codes that computed the evolution of astronomical objects (planets, stars, and so on) under the influence of gravity. Jim Stone of Princeton and Romain Teyssier of Zurich are known for authoring a series of codes that solve the equations of magnetized fluids, which have been used to study a wide variety of problems in astrophysics. Volker Springel of Heidelberg made his mark via the Millenium Simulation Project. In all of these cases, the publicly available computer codes became influential because other researchers incorporated them into their repertoire.

A related issue is falsifiability. If a physical system is perfectly understood, it comes with no freedom of specifying model inputs. Technically, astrophysicists call these free parameters. Quantifying how the sodium atom absorbs light provides a fine example—it is a triumph of quantum physics that such a calculation requires no free parameters. In large-scale simulations, there are always physical aspects that are poorly or incompletely understood and need to be mimicked by approximate models that specify free parameters. Often, these pseudomodels are not based on fundamental laws of physics but consist of ad hoc functions calibrated on experimental data or smaller-scale simulations, which may not be valid in all physical regimes.

An example is the planetary boundary layer on Earth, which arises from the friction between the atmospheric flow and the terrestrial surface and is an integral part of the climatic energy budget. The exact thickness of the planetary boundary layer depends on the nature of the surface; whether it is an urban area, grasslands, or ocean matters. Such complexity cannot be directly and feasibly computed in a large-scale climate simulation. Hence, one needs experimentally measured prescriptions for the thickness of this layer as inputs for the simulation. To unabashedly apply these prescriptions to other planets (or exoplanets) is to stand on thin ice. Worryingly, there is an emerging subcommunity of researchers switching over to exoplanet science from the Earth sciences who are bringing with them such Earth-centric approaches.

To form large-scale galaxies, one needs prescriptions for star formation and how supernovas feed energy back into their environments. To simulate the climate, one needs prescriptions for turbulence and precipitation. Such prescriptions often employ a slew of free parameters that are either inadequately informed by data or involve poorly known physics.

As the number of free parameters in a simulation increase, so does the diversity and variety of simulated results. In the most extreme limit, the simulation predicts everything—it is consistent with every outcome anticipated. A quote attributed to John von Neumann describes it best, “With four parameters, I can fit an elephant and with five I can make him wiggle his trunk.” Inattention to falsifiability has been chided by Wolfgang Pauli, who remarked, “It is not only incorrect, it is not even wrong.” A simulation that cannot be falsified can hardly be considered science.

Simulations as a third way of establishing scientific truth are here to stay. The challenge is for the astrophysical community to wield them as transparent, reproducible tools, thereby placing them on an equally credible footing with theory and experiment.

The author is grateful to Scott Tremaine and Justin Read for feedback on a draft version of the article.

Click "American Scientist" to access home page

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.