This Article From Issue

May-June 2006

Volume 94, Number 3

Page 195

DOI: 10.1511/2006.59.195

To the Editors:

The article "Reverse Engineering" by Brian Hayes (Computing Science, March-April) provided a clear explanation of a very difficult topic. However, I have a question regarding reversible logic.

A quick calculation gave me 40,320 reversible circuits with 3 inputs and 3 outputs, with about 750 of them being their own inverses. My question then is why Edward Fredkin and Tommaso Toffoli chose the ones they did.

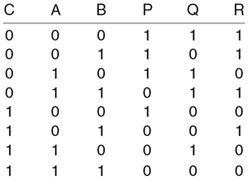

Among the many circuits that have useful properties, one is given by the following table, using the same notation as in the article:

I have interchanged the input columns for clarity. If we consider the input C as a control input, then when C = 0, P is the NAND function of A and B, and when C = 1, P is the NOR function of A and B. This circuit is reversible and its own inverse. Both the NAND and NOR functions are computationally universal as defined in the article. In addition, design with either NAND or NOR gates is simple and is taught to computer engineers early in their educational careers.

Ed Kresch

Villanova University

Villanova, PA

To the Editors:

In "Reverse Engineering," Brian Hayes states that no energy is lost in changing a bit from one to zero. This is a First Law of Thermodynamics argument (conservation of energy). The Second Law of Thermodynamics, on the other hand, does require that energy be lost.

His example of a car burning fuel is illustrative. He proposes that you could "unburn the gasoline by bringing those molecules back together in the right order." This also is a First Law argument. The Second Law says that this is impossible. According to the Second Law, no matter what information you have, or how hard you try, you cannot collect all the molecules or recover all the energy that was released. Inevitably some of the molecules and energy will escape, so if you want to unburn the gasoline, you will have to supply the missing molecules and energy.

Changing a bit from 1 to 0 also requires an input of energy. As you change a 1 to a 0, it goes through an intermediate stage where it is neither one nor zero. This represents an energy loss. According to the Second Law, you cannot recover all this energy. In order to complete the process of going from the intermediate stage to zero, you will have to supply the missing energy.

Greater efficiency in design may greatly decrease the energy consumption, but the Second Law says that it will never be reduced to zero.

Building a computer of millions of logic gates also involves the Third Law of Thermodynamics (complexity). The Third Law, paraphrased for this situation, would mean that millions of logic gates acting together would require more energy than the same number of logic gates acting separately.

William Levak

Ann Arbor, MI

Mr. Hayes replies:

Why did Edward Fredkin and Tommaso Toffoli choose two specific gate designs out of more than 40,000 possibilities? A historical question like this one is best answered by someone who was there at the time, so I asked Dr. Toffoli.

The Fredkin gate, he explained, was of special interest because it is not only reversible but also "conservative," meaning that the number of 0 bits and the number of 1 bits both remain constant as signals pass through the system.

Dr. Fredkin had in mind a computer in which bits are represented by physical particles, such as electrons or even billiard balls—objects that cannot be created or annihilated at will. The Fredkin gate, which either swaps two signals or passes them through unchanged, automatically enforces such a conservation law.

The Toffoli gate became a focus of attention for a different reason. Dr. Toffoli was looking for a way to embed the discrete logic of a digital computer within a physical system having a continuum of possible states, such as a mechanical linkage of levers or gears. Certain distinguished positions of the components would represent the Boolean values 0 and 1, but all the parts of the device would have to be capable of passing through intermediate positions as well.

Dr. Toffoli showed that a device of this kind, built out of wheels and crank-arms, could implement the logical function now known as the Toffoli gate. For related reasons the same gate was later taken up by Richard Feynman and others in studies of quantum computing.

However, as Dr. Kresch observes, many other reversible gates are also of potential interest. A gate similar to the one he discusses was important in proofs that reversible logic is a computational universal (equivalent in power to a Turing machine).

To answer Mr. Levak, arguments over the thermodynamics of computation have been going on for more than 40 years, and I suppose they may well continue for another 40 years.

The situation that Mr. Levak discusses—changing the state of a single bit—was analyzed in some detail by Rolf Landauer in 1961. Although the hardware commonly used for this purpose certainly does dissipate energy, Landauer showed that the loss is not inevitable.

To state his result more precisely, he showed that the energy cost hasno minimum. Therefore, it can be made arbitrarily small.

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.