This Article From Issue

May-June 2013

Volume 101, Number 3

Page 233

DOI: 10.1511/2013.102.233

TURING’S CATHEDRAL: The Origins of the Digital Universe. George Dyson. xxii + 401 pp. Pantheon/Vintage Books, 2012. $29.95 cloth, $16.95 paper.

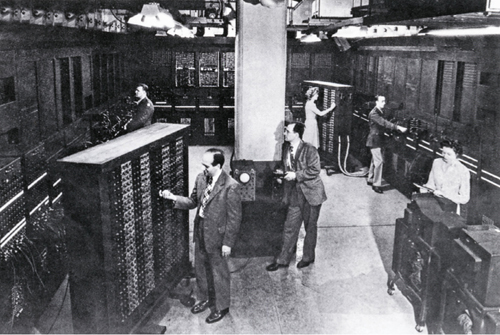

From Turing’s Cathedral.

The history of the Institute for Advanced Study in Princeton, New Jersey, involves a MANIAC. This early computer was aptly named—not only for the frenetic pace of activity it underwent 24 hours a day in its heyday, but also for the worldwide havoc in which it unwittingly played a role. Its calculations were key to both the development of nuclear bombs and the transformation of society into one based on digital media.

The MANIAC (for Mathematical and Numerical Integrator and Computer) became operational in 1951. It had five kilobytes of memory, less than what is allocated to displaying a single icon on a computer screen today. As technology historian George Dyson describes in Turing’s Cathedral, the MANIAC, which was not the first computer but which was widely emulated, “broke the distinction between numbers that mean things and numbers that do things.” He goes on: “Our universe would never be the same.”

The story Dyson tells—of the transition from analog to digital in the 20th century—is an unavoidably technical one, but there are plenty of personal stories to keep it moving. John von Neumann, a brilliant mathematical theorist who was the motivating force behind this computer, had a penchant for fast cars and reckless driving. In contrast, his main engineer, Julian Bigelow, had a talent for keeping jalopies going. In fact, although the book is named for Alan Turing, it could be seen as largely a biography about von Neumann and the cult of personality that surrounded him. Turing’s theoretical work is cited early on as the basis for all computers, but his personal story isn’t mentioned until the last third of the book (and part of the discussion is taken up with a comparison of Turing’s personality and von Neumann’s).

From Turing’s Cathedral.

The Institute for Advanced Study was not the most natural place for a computer revolution to take place. It had been founded for purely theoretical work, and many of the faculty there shunned any tools beyond blackboard and chalk. When von Neumann began importing engineers in droves for his computer project, conflicts over legitimacy ensued, and postwar shortages meant that the resulting arguments extended to building materials and even sugar. Under these conditions, Bigelow and the other engineers’ ability to jerry-rig and improvise became essential.

Woven into the story are bitter exchanges about who should have received credit for what inventions. Von Neumann emphasized open content and discouraged the patenting of results in order to most quickly advance the technology. But he had his own lucrative consulting contracts, and an underlying theme of the book is that he did not go to great effort to correctly acknowledge the work of others.

From Turing’s Cathedral.

The legacy of the MANIAC and other computers is the breakthrough in both logic architecture and software that they began. But in the background there is also the history of nuclear weapons, the modeling of which was a driving force for building the computers. Indeed, without the pressure during that era to produce nuclear weapons, it’s likely that many of these machines would not have been built. Von Neumann was excited about the possibilities of computers for use in everything from meteorology to the life cycles of stars, but he had no compunctions about developing bombs. His view was likely colored by his hatred of the Nazi regime and his desire to prevent the Soviets from developing a similar legacy.

I found it refreshing that Dyson is quick to point out the part women played in the development of computers, both in supportive and key roles. Many of the first coders, who translated queries into language a machine could understand, were women—including von Neumann’s wife, Klári. Dyson emphasizes that “the view that early coders, such as Klári, were ‘doing the arithmetic’ without any understanding of the physics is wrong.”

Von Neumann died young, at age 53, of cancer. Without its messiah, the computer project at the Institute of Advanced Study lost support and was terminated. Bigelow had been given a permanent appointment shortly before these events. For reasons left unclear in the book, he decided to turn down several offers from other places in order to stay where he wasn’t really wanted any more. It seems likely that he could have done much more to accelerate advances in computing had he moved to an institution that valued his expertise more. By turning its back on computing for the next 20 years, the institute lost its shot to be at the forefront of many fields. Dyson quotes his father, physicist Freeman Dyson, who was at the institute at the time: “When von Neumann tragically died, the snobs took their revenge and got rid of the computing project root and branch. The demise of our computer group was a disaster not only for Princeton but for science as a whole. . . . We had the opportunity to do it, and we threw the opportunity away.”

Nonetheless, the legacy of von Neumann and the MANIAC affected fields considered cutting edge today. One of the earliest research projects von Neumann championed was a study by Nils Aall Barricelli on the foundations of artificial intelligence and the evolution of simple “organisms” within a computer. One wonders what von Neumann might have helped happen had he lived longer.

Dyson rarely gives overt personal opinions about the stories he is telling. Instead he orders events within the text to make apparent a certain narrative flow, or an analogy between disparate subjects. Sometimes this classical-historian tendency can be a bit much—an entire chapter on the Colonial history of the area where the Institute was founded seems largely extraneous. And some of the references are rather oblique—for instance, he mentions the security trial of Robert Oppenheimer but does not provide much detail about it. In addition, mathematics-averse readers may find their eyes glazing over at certain segments of technical detail, although the same discussions may appeal to those who like to know how algorithms and the machines that ran them really worked. And there are existential discussions that would sound bizarre out of context, such as whether advanced beings would reproduce digitally, and whether computers were originally created for their benefit. But Dyson is skilled at tying it all together. He hints at where he thinks computer science is headed—namely to machines that can execute multiple commands in parallel, instead of waiting for other pieces of the machine to tell them what to do. There’s no real way to predict where the digital universe is going, as Dyson notes—but then, that’s what makes it so interesting.

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.