Tiny Lenses See the Big Picture

By Fenella Saunders

New digital camera research takes inspiration from insect compound eyes.

New digital camera research takes inspiration from insect compound eyes.

DOI: 10.1511/2013.103.270

The single-imaging optic of the mammalian eye offers some distinct visual advantages. Such lenses can take in photons from a wide range of angles, increasing light sensitivity. They also have high spatial resolution, resolving incoming images in minute detail. It’s therefore not surprising that most cameras mimic this arrangement. But John Rogers, a materials scientist at the University of Illinois at Urbana-Champaign, and his colleagues think that cameras with hundreds of tiny lenses working in unison, much like an arthropod compound eye, could also have their uses. “Evolution has come up with a lot of clever solutions for very difficult problems,” he says, “and a lot of those solutions are not compatible, in geometry or material type, with engineered devices that exist today.”

The compound eye excels in some visual areas. “It allows visualization of one’s surroundings in all directions equally well, so there’s no peripheral part of the field of view in the sense that everything has the same crisp level of focus,” Rogers explains. “That’s much different from what you find in a commercial digital camera where you have a finite field of view, things are crisp and focused in the center and then things get worse out into the peripheral part of the field.

“Because of the very tiny lenses, the focal lengths are very short, and what that essentially provides is an infinite depth of field, so objects very close to the insect or in a distant part of the field all look sharp and in focus,” Rogers adds. “And the way that the eye is laid out, it creates an enhanced acuity to motion within the field of view.”

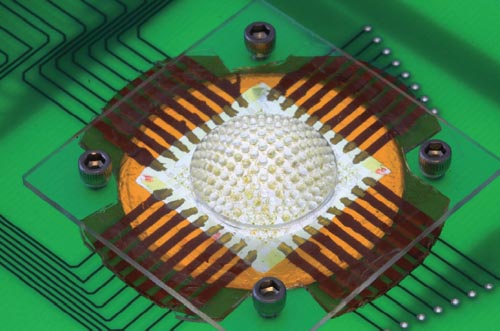

Photograph courtesy of the University of Illinois and Beckman Institute.

As Rogers and his colleagues report in the May 2 issue of Nature, they have developed a prototype camera lens that takes inspiration from a type of diurnal arthropod visual system called the apposition eye: Each optical unit (called an ommatidia) contributes just one point of photoreception to the overall image. “You can think about it like a hemispherical array of pinhole cameras,” Rogers explains. Although classic science fiction films might wish you to believe otherwise, most insect eyes do not form a complete image in each facet. As with most visual systems, including the human one, a lot of vision takes place in the brain, where information from each ommatidia is integrated to form a seamless picture of the world.

Some insect eyes, such as those of fire ants and bark beetles, have slightly more than a hundred lenses. Others, such as dragonflies, have tens of thousands. The camera lens from Rogers’s group currently has about 200.

To have a wide-angle field of view like an insect’s, the camera lens has to be hemispherical. However, creating and wiring a lens on a curve is a daunting task that would not lend itself to mass production. Instead, Rogers and his colleagues mold a flat lens array out of a transparent elastic polymer, which they can deform into a dome shape. Such flexible, molded components have become relatively common in optics and electronics research over the past decade (see, for instance, “Bring on the Soft Robots,” March–April 2012, linked in the box on the right), so there are already scalable manufacturing techniques in place for these materials.

Wiring this bendable array of lenses required some creative thinking. Each lens has a radius of about 400 micrometers, whereas each photoreceptor is about 160 micrometers wide, so alignment must be precise. Rogers’s group developed a mesh of silicon photoreceptors, one for each lens, connected together with flexible wires. As a result the lenses and photoreceptors do not develop any distortions when the array is deformed. The finished array is about 15 millimeters wide.

“We only put small bits of silicon that are doing the light detection right at the focal spot of each of the lenses in the lens array, and that is the only place where the mesh is physically bonded to the lens layer,” Rogers says. “Everywhere else are these serpentine wavy wires that essentially act like springs. They can buckle and deform out of the plane during this inflation process. So you can stretch the whole thing, but the strain that the tiny silicon chips see is very small, even though the entire system is being blown up like a balloon. During this inflation, the whole thing has to operate elastically, in a predictable way, like a rubber band, not a piece of taffy.”

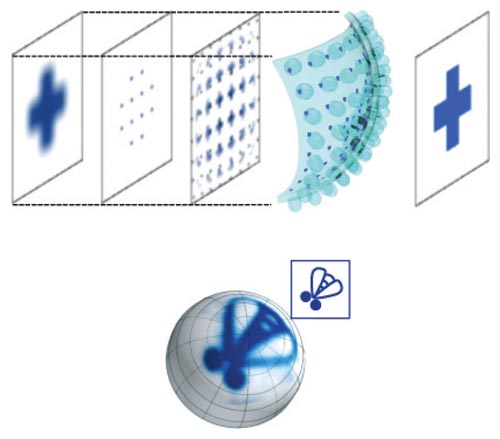

Images courtesy of John A. Rogers

The single point of light from each photoreceptor is fed into a computer, which processes all the points together to create a full image. The resolution of the image can be improved by rotating the array back and forth, so that each photoreceptor ends up contributing more than one point to the final image. This mechanism is a bit like the use of the fovea in the human eye, where one portion of the retina is concentrated with receptors, and the eye scans back and forth to keep this point at the center of current visual focus, while the brain integrates all the information to create a seamless image of the scene.

Currently the camera is able to create monochrome images of simple graphics. The resolution is somewhat limited because the relatively small number of optical elements can only sense a scant amount of light. Increasing the density of lenses, or the number of photoreceptors per lens, could make for a sharper picture, Rogers says. But the current image does not lose focus when the subject matter is moved through the array’s visual field. Also, as objects move farther away from the camera, they appear smaller but don’t become blurry.

Although there are many improvements the team wishes to explore, Rogers envisions the system being of use in surveillance devices, such as micro aerial vehicles, which could be used in disaster relief. The device may also be helpful in surgery, such as for endoscopic procedures, where a wide field of view would be advantageous for the physician.

“To do something realistic from a commercial standpoint really demands that we increase the resolution by a significant amount,” Rogers says. “But I have to tell you, there’s something about this kind of system that I just find appealing at a gut level, so our work is also motivated by pure discovery.”

Click "American Scientist" to access home page

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.