This Article From Issue

March-April 2025

Volume 113, Number 2

Page 75

Chris Pickard is a materials scientist who employs what are called first principles methods—modeling techniques that work out material properties using fundamental rules such as quantum mechanics and Newton’s laws. Trained as a condensed matter physicist, he refocused on materials science just as interest in the field was exploding amid advancements in computation. Switching between empirical and theoretical sciences was good preparation for a field that works closely with experimentalists and testers, and that is itself becoming more empirical under the influence of machine learning. Pickard spoke with American Scientist associate editor Nicholas Gerbis about his early successes in studying hydrogen under high pressure, and his hopes for the future of his field. This interview has been edited for length and clarity.

Courtesy of University of Cambridge

You entered the field in the early 1990s, just as computing power began to enable more complex first principles models. Did you have a sense back then of where things were heading?

The visionaries had an idea of where it would go, but in practice we weren’t dealing with very complex or realistic systems. We started, really, with a treatment of the electrons in materials, and we used approximations of equations of quantum mechanics—Schrödinger’s equation [a probabilistic description of how quantum particles such as electrons behave] and so on—to describe how the atoms in a material will interact with each other and move around. But the things we’re doing now have gone way beyond where we started. It’s quite phenomenal, the progress we’ve made.

Over time, you’ve been able to reduce constraints, treat atoms less like stacked billiard balls, and cast a wider net for desired characteristics. But where do you begin your search?

I was one of the early people who realized that our computer codes were getting fast enough that we could start to try lots of different possibilities for stacking those atoms together in different patterns. This is what’s known as structure prediction. I developed an algorithm called ab initio random structure searching. You’re coming from first principles using quantum mechanics, then you’re randomly creating, starting with arrangements of atoms. You feed those into your computer, and you let them lower their energy to a nearby local minimum. If you did that once, you’d probably get a computational mess. But we had access to multicore massive supercomputers where we could do thousands of these at once. If you try thousands of things at random, one or two of them might turn out to give you something new.

Even with approximations and constraints, is there a limit to how deeply and finely you can drill down into the atomic or quantum world?

If you just have a couple of atoms in a unit cell, which is the repeating unit in a crystal, maybe you don’t have to put too many constraints. But as you go to bigger systems, the number of possibilities genuinely grows exponentially quickly. This is what we call the exponential wall. It’s sitting out there. At some point there’s complexity we’ll probably never defeat—a large enough collection of atoms. The art in this field is using constraints that can allow you to climb that wall a bit further.

In your early research, the time required to process quantum mechanical calculations limited the questions you could answer. Yet it was during this time that you made one of your most impactful discoveries.

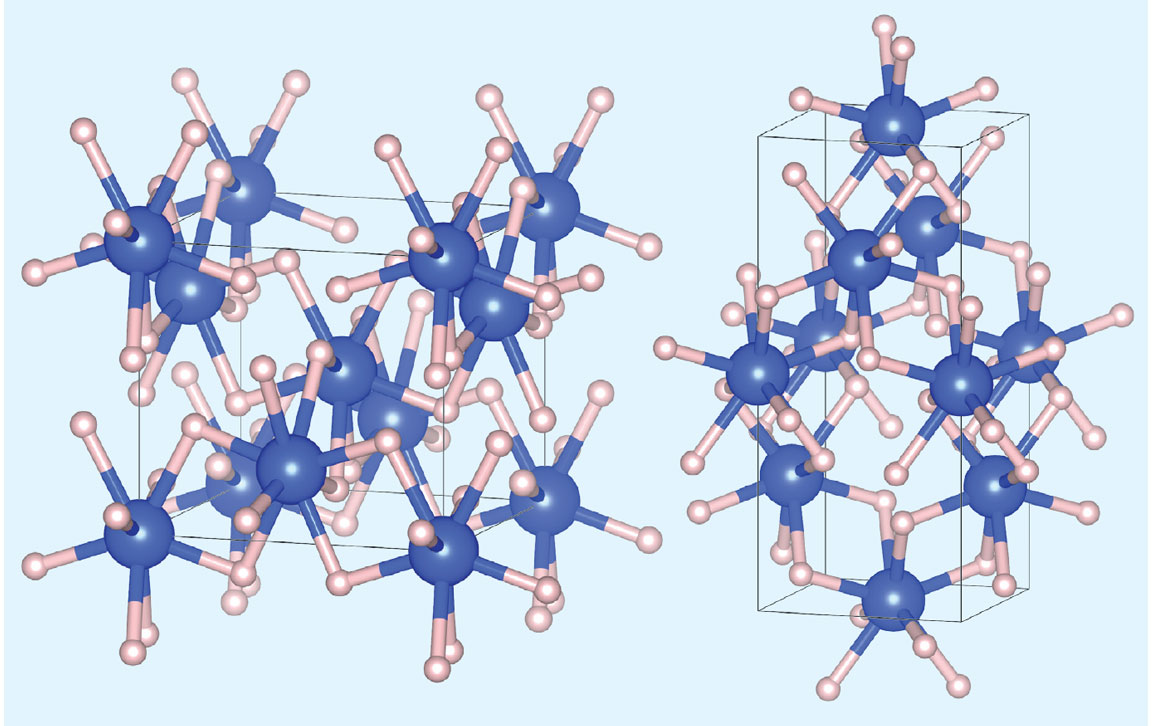

When I started doing postdoc structure prediction work, by chance, my long-term collaborator Richard Needs, another professor in the Cavendish Laboratories at [the University of] Cambridge, came into my office and saw me with all my crystal structures on the computer screen. He asked me, “What’s that? What are you doing?” The next day, he met me in the coffee area, and he said, “Can you do what you’ve been doing with carbon, but for hydrogen?” I said, “Well, that’s much easier. There are far fewer electrons.” I was very positive. Richard explained that there was this massive problem of understanding the phases of hydrogen—which is most of the universe—at high pressure. [In addition to physical phases like solid, liquid, and gas, hydrogen can enter exotic molecular phases under high pressure, exhibiting different crystalline, density, symmetry, and vibrational properties.] The reason is that the protons are extremely difficult to scatter x-rays from; you can hardly see them in a lot of experiments. They can maybe see some spectroscopic signatures, but they had no idea where the atoms were. I started working on that with Richard. And that’s why I got into the high-pressure field; without his intervention, I’d have been doing low-pressure materials all along.

That’s probably the earliest and biggest hit we had. I published a paper on the phases of hydrogen at high pressure, where we mapped out all the different sequences. We showed that the phase III of hydrogen [a molecular phase, marked by dramatic spectroscopic changes, that occurs at high pressures found naturally in gas giants like Jupiter] matched the spectroscopic signatures. We identified some really curious phases, which were like sandwich layers, a bit like graphene, with hydrogen molecules in between and graphene again. People had never speculated that these phases of hydrogen might exist. And now, all these years later, the results in that paper, plus a few modifications by myself and others, basically define what we know about the structure of hydrogen at these high pressures.

That must have felt like a real validation.

When I got those structures, I can remember—I was at that point at the University of St Andrews in Scotland, my first academic position—the results, they popped off the computer. I remember seeing these new structures coming out, and I’d never seen anything like this before. I just had that feeling. That’s it. That’s what we’ve been looking for. Of course, you can’t carry on working then. I just went for a walk and sat in the sand dunes looking over the beach to digest the consequences of what we’d seen.

Did that open a whole new research area for you?

The early days of doing this structure prediction from first principles were extremely productive. Everything we tried, something new came out. It was like having a new telescope, looking down through the telescope, and seeing all these galaxies and so on. It’s not so easy now, because I think we picked off a lot of the simple problems. As time goes on, people ask more detailed questions. But that was a very exciting time, those few years.

Does hydrogen, which is almost on the scale at which quantum rules take over, present special challenges?

Absolutely. It’s on the edge. That’s what makes it really complex. The lightness of the proton means that you can’t entirely assume that the atom itself is behaving classically. Of course, the electrons are behaving quantum mechanically, but with hydrogen the quantum mechanics of the proton come into play as well.

Maybe I was overly optimistic or naive when I said to Richard, “Of course, hydrogen’s easy.” But for me, it was easy, because it only had one electron; it was a simple system. But the fact that it’s on the edge of being a quantum particle as well means it’s incredibly rich. Keep studying hydrogen, you keep finding new things.

Has machine learning affected your work and your field’s ability to tackle more complex structures?

There’s been a huge revolution in the last five years. Now, instead of doing all your calculations solving the Schrödinger equation, we calculate the Schrödinger equation for a set of example structures, and then we train a machine learning model or neural network to interpolate between those different configurations. When we calculate the energies of the atoms or move the atoms around, we can use the neural network to guess what the energies might have been from a quantum mechanical simulation. It might be 100,000 times faster. One of the really exciting applications in this area—not by me, but by my colleagues—has been to explore the amorphous state for realistic materials [in which molecules are disordered and noncrystalline]. Model amorphous states have been studied for a very long time, but now, with this machine learning acceleration, we can do it for essentially any chemistry you might think of.

Can you find the first principles within the machine learning results?

You can look at it in different ways. On the one hand, the beauty of doing things from first principles is, somewhat counterintuitively, it’s easy for people who are not experts to use. Because the method is rooted in the solid equations of reality, there aren’t too many parameters for users to fiddle around with. This was always the attraction. When I got into the field in the early '90s, I was part of the group of people who were reacting against empirical models—models based on parameters that people tuned. We were very proud that we were doing things from first principles. There were approximations, but within those approximations we could lock down those calculations. Now, of course, we’ve gone back to empiricism. Machine learning is an empirical approach: It’s statistically fitting functions with uncertainties. You don’t necessarily have to have done everything from first principles; you can adjust things to match with experiments.

I would say that this is something that we’ll start to grapple with. If someone does a quantum mechanical calculation in one lab and then someone else does it in another lab, if they agree on their parameters, they should get the same answer. But different people have different machine learning models, maybe trained on the same data or maybe on different data. They may not agree. That may be fine, but it means you’ll have to try and understand these uncertainties in the models.

I imagine people in fields with such potential to produce world-changing materials must be under considerable pressure. Has that been your experience?

It was something I was challenged on when I was applying for a fellowship based on this structure prediction. “All well and good, Chris, you can predict these crystal structures. But how are you going to make them?” I said, “Okay, I’m producing a map, where I’m telling you where the cities are, but I’m not giving you the roads between them.” The task is to have not just computational materials discovery, but computational materials discovery with a set of instructions as to how to make it as well. This is a challenge. In organic chemistry, there are a lot of skills in creating a lot of varieties of molecules. It’s more difficult in solid-state chemistry. I’m positive about the advances in machine learning and so on. Maybe we can do more, explore wider ranges of configurations, temperature conditions, pressure conditions, precursors, to maybe find a computational route to helping with the instructions.

What are the next materials you hope to tackle? What else is your research group working on right now?

There’s a range of projects that we’re looking at. I’ve had a long research project working on prediction, discovery, and then also experimental discovery of novel superconductors based on pure hydrogen or materials with high hydrogen content. We’re looking at the behavior of metals with maybe some exotic electronic structure effects. Also, we have a project that has been going for a few years where we’re using these techniques to try and discover, computationally, new materials for batteries—new cathode materials. Of course, that’s a difficult challenge. There are lots of very good materials for batteries already out there, and I wouldn’t claim that we’re going to make any big change to that.

Courtesy of Chris Pickard

What are your hopes for the future of your field?

It’s kind of a gold rush moment, where everyone can do things that no one’s done before. This breakthrough in machine learning is making a lot of things that I thought I would never do in my career suddenly move forward 10 or 20 years. It’s interesting that it’s happened at the same time as a lot of hype around AI. Of course, there’s that public excitement. But for us, it’s an optimization or an accelerant to an existing technology.

Even though I came from very much the first principles, ab-initio-or-bust kind of way, as time goes on and you get confronted by more realistic problems, you appreciate that this balanced approach is probably the most productive one. I like to say the ideal situation is to do the right amount of computing and the right amount of experiments to get to the answer that you’re looking for as quickly as possible. You don’t have to be a hero and do it only one way or the other. Working together moves things on faster.

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.