This Article From Issue

July-August 2023

Volume 111, Number 4

Page 248

More than a Glitch: Confronting Race, Gender, and Ability Bias in Tech. Meredith Broussard. 248 pp. The MIT Press, 2023. $26.95.

The biases caused by and perpetuated through algorithms are no glitch: They are consistent with our world as it exists now. In More than a Glitch: Confronting Race, Gender, and Ability Bias in Tech, Meredith Broussard offers a thorough exploration of how algorithmic systems encode and perpetuate injustice—and what we can do about it.

Broussard’s 2018 Artificial Unintelligence: How Computers Misunderstand the World demonstrated how we’ve been misled by tech enthusiasts and developers in their quest for artificial general intelligence (AGI). More than a Glitch is no less important to understanding where we are now with tech. Explaining the current state of algorithmic injustice, she offers strong calls to action, along with very concrete steps, to help us manage, mitigate, and recognize the biases built into algorithms of all types. A thread running through the book is the idea of technochauvinism, a “bias that considers computational solutions to be superior to all other kinds of solutions.” This bias insists that computers are neutral and that algorithms are fair. But this bias is its own type of ignorance, because what we build into algorithms captures and perpetuates existing social biases, all while being touted as “fairer” because of the assumed neutrality of algorithms.

Broussard defines glitch as “a temporary blip,” which is how tech boosters often want to explain away publicized instances of bias in tech as one-offs that can be dealt with through individual fixes. For instance, the National Football League (NFL) famously used race in its algorithms to determine payouts for players with brain injuries, with Black players receiving lower amounts by thousands of dollars. The NFL’s individual fixes included eliminating data such as race, and paying penalties, although only after they were ordered to by a federal judge. But algorithmic biases are more than a temporary blip or something we can deal with through individual patches; simply fixing blip by blip is not a structural solution and won’t let us move quickly enough. Our biases are systemic and structural, and they are reflections of the world.

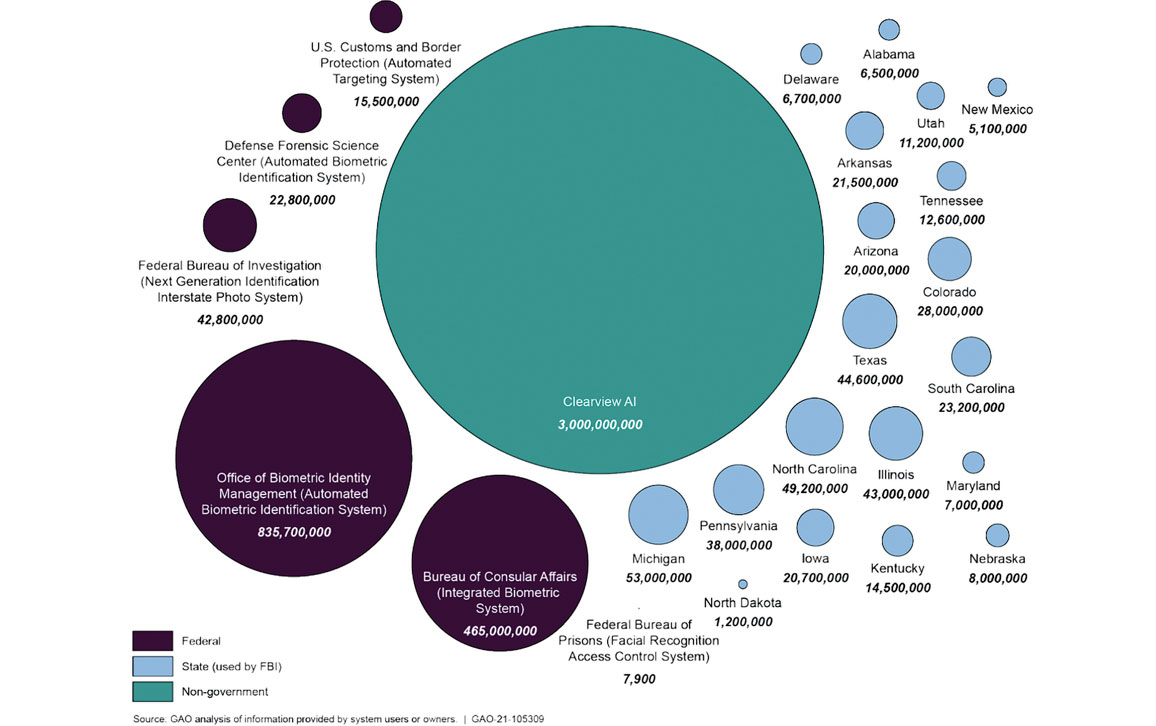

Figure from Government Accountability Office report GAO-21-105309

By the end of the book, Broussard offers clear ways to recognize and manage biases and work toward a more just world through public interest technology and the use of algorithmic audits. She highlights the work of groups already doing tech monitoring and reporting, along with many recent cases in which tech companies have failed us, and she suggests how we can add to these efforts through different types of algorithmic auditing and an emphasis on public interest technology. She calls for the public to “hold algorithms and their creators accountable” to the public, and demonstrates ways we can do just that.

The book is divided into 11 chapters, each of which could be read on its own or in concert with other readings. Each chapter is grounded in many contemporary cases of biases built into algorithms that then perpetuate or cause discriminatory harm. Her journalistic approach makes this work come alive through accounts of not only the way algorithms work, but also of how the use of algorithms has caused real harm, including arrests, educational setbacks, administrative nightmares, and even death.

One compelling example from Chapter 3 is from the area of facial recognition and its use in policing. Broussard explains that facial recognition is far less effective than most people imagine, but police precincts continue to invest money in this technology and try to justify its use. And people’s lives are at stake. Broussard shares the story of Robert Williams, who was called into a precinct by the Detroit police, which he thought was a crank call. They arrested him later in the day at his home, flashing an arrest warrant, to the confusion of Williams and his family. He was held for 18 hours without anyone telling him the details of why he had been arrested.

It turned out that Williams had been “identified” as having stolen $3,800 in watches from a grainy image fed into DataWorks Plus software sold to Michigan State Police, but the thief wasn’t him. The database used by DataWorks is huge, consisting of 8 million criminal photos and 32 million U.S. Department of Motor Vehicle (DMV) photos. The grainy image from the robbery of someone in a baseball cap was matched by the algorithm with Robert Williams’s DMV photo and taken as truth by police: A clear example of unchecked technochauvinism.

Chapter 4 details the case of Robert McDaniel, a Chicago resident who was identified by predictive policing software as someone likely to be involved in a shooting (here, individual police departments aren’t specific about what software they use, but CompStat is one example; software giants such as Palantir, Clearview AI, and PredPol make predictive policing software products that are marketed to large police departments). The algorithm wasn’t specific about whether McDaniel was supposed to be a shooter or the victim, but the result of identifying McDaniel provoked police visits where they “wanted to talk” to him, social workers being brought in by police, and the police paying close attention to him. This attention was unwanted and dangerous, as he explained to police. He was shot two different times because people began to think he was a snitch or informant, due to the police attention. McDaniel continued to explain that they were putting him in danger, but the Chicago police saw their software as validated. Broussard explains that we’ve spent “taxpayer dollars on biased software that ends up making citizens’ lives worse” due to the belief that such technologies will make things safer and fairer (very often, they won’t). For the technologies offered in Chapters 3 and 4, the call to action is to move away from this type of technology development altogether.

But the book is about much more than policing. Chapter 5 explores algorithmic and predictive grading in schools. Algorithms, Broussard argues, can also fail at making predictions that are ethical or fair in education. She presents a number of cases of students harmed through the use of grading algorithms that factor in location and subsequently award lower grades to students in lower-income areas. During the early part of the COVID-19 pandemic, many aspects of life began happening online, including taking standardized tests such as AP, SAT, ACT, and IB exams. In the case of IB (International Baccalaureate) exams, in-person testing was not possible and an algorithm was created to give students grades based on algorithmic predictions. They used a standard practice in data science: Their solution was to take all available data on students and their schools, and to feed it into their system and construct a model to output individual grade predictions. Broussard shares the case of Isabel Castañeda, a student hoping to earn credit for a high IB score in AP Spanish. Castañeda is fluent in English and Spanish, and has studied Korean for years. Her IB Spanish grade was a failing grade. Essentially, she was punished for where she lived and went to school, a school with very low success rates on IB exams. The use of such programs have real impacts in terms of individual costs, such as having to retake tests and college classes, as well as the colleges to which one is accepted. Broussard urges communities and administrators to “resist the siren call” of these systems, however appealing they may be.

Social fairness and mathematical fairness are different. Computers can only calculate mathematical fairness.

Chapter 6 centers on disability design for blind students and the case of a Deaf tech employee. I found this the least compelling of the chapters, probably because this subject is within my domain of expertise. The call to action also seemed to be weaker than in other chapters; it concerned incorporating ethics into computer science, as well as the wins of design thinking (an approach to problem solving using a conceptual, user-centered approach), which are less lauded by some critics. Design thinking’s emphasis on empathy and ethnography can be a problem for disabled people, who are often seen by nondisabled people as pitiable, and designers would do better to talk to actual disabled people, which is something Broussard would recommend. She ends this chapter writing about the need to center BIPOC (Black, Indigenous, and People of Color) disabled people in these conversations, as those with multiple intersecting marginalized identities are often forgotten in tech conversations.

Broussard’s other chapters talk about the gender biases encoded into systems, medical diagnostics that use race, and the process of making algorithms. Broussard is best when drawing from her experiences in data journalism and from her own life to illuminate the cases she shares. For instance, in the Introduction she explains fairness through the lens of childhood negotiation and bargaining; and in Chapter 8, she shares her experience of having an AI scan at the end of her breast cancer treatment and then later, running one of the many algorithms for breast cancer detection.

I almost skipped the chapter on cancer. As someone who has had cancer three times and gets scanned often, I sometimes skip things about cancer because it’s too much. But this chapter, Chapter 9, was my favorite. Rather than being about the experience of cancer or its treatment, it is about how one experiences technology, and also about trying to understand how relevant algorithmic technologies work in this context. In this chapter, Broussard shows the weird quirks of current tech—at one point buying a CD-reader for her computer because her scans won’t download off of her health portal in a readable way. She then tried out the algorithms for herself with her own scans, post-cancer treatment. The algorithm she tries (which she found out later was created by one of her neighbors) ended up with the “correct” result, confirming her breast cancer. However, the experience raised questions of access, compatibility, medical records systems, user interfaces, and the importance of human involvement in the process.

The penultimate chapter, Chapter 10 gives us a guide forward, describing algorithmic auditing as a powerful tool to help mitigate and manage currently unjust data systems. In the intro, she told us that:

Social fairness and mathematical fairness are different.

Computers can only calculate mathematical fairness.

This difference explains why we have so many problems when we try to use computers to judge and mediate social decisions.

In order to address social fairness—and Broussard believes everyone should—we need to be looking for the biases that exist in the tech we’ve created. She writes about her own work as a consultant in algorithmic auditing, and shares how this approach is not a one-size-fits-all approach, which is why we need auditing suited to the needs of different projects and technologies. She highlights different watchdog and data audit projects that are doing good work in addressing data justice: Data for Black Lives, AI for the People, the Stop LAPD Spying Coalition, and more.

She also highlights different regulatory changes and policies that are relevant to this work, including proposed legislation from the European Union from 2021 that calls for high-risk AI to be controlled and regulated, and that would divide AI into high-risk and low-risk categories for the purposes of monitoring and regulating those using high-risk AI. The proposal asks for a ban on facial recognition for surveillance, criminal justice algorithms, and test-taking software that uses facial recognition, which coincides nicely with the Ban the Scan proposal from Amnesty International.

The book is not a historically oriented work on bias in computing or in medicine. Nearly all of Broussard’s cases and material come from the past decade, if not the past few years. She draws from other work that speaks to similar themes, such as that of Mar Hicks, Safiya Noble, and Ruha Benjamin. What this book offers is an orienting view that is both broad and specific on algorithmic biases and informed solutions for how to grapple with where technology is right now.

Broussard’s action items emerge over each chapter and don’t converge on just one approach. We need to be thinking about a variety of approaches, including outright bans of and policymaking on some types of algorithms due to their potential for great harm, as well as a willingness to slow down or abandon the adoption of algorithms in educational contexts. Other approaches to addressing algorithmic bias involve automated auditing, as long as such programs are “updated, staffed, and tended” using software packages such as AI Fairness 360 or platforms such as Parity and Aequita, which have mitigation algorithms designed to de-bias; Broussard herself works with O’Neil Risk Consulting and Algorithmic Auditing (ORCAA) on a system called Pilot for automated auditing. ORCAA advocates for internal audits and has worked with Joy Buolamwini’s Algorithmic Justice League to address algorithm analysis with an intersectional framework, looking at different subgroups to assess performance and to identify where an algorithm can produce harm.

External audits are another approach; these are audits conducted by external agencies or watchdogs, as well as investigations triggered by whistleblowers. Exposing.ai, one watchdog group, lets you find out if your photos are being used to train facial recognition. Some external audit groups, such as The Markup, fact-check tech firms and demonstrate an important role for data journalists and investigative journalism in this area. Broussard also applauds Ruha Benjamin’s idea of tech discrimination as an “animating principle” that makes it possible to see where technology may harm people or violate their rights. In all of these approaches, we need work that is aware of unchecked algorithms generating unfairness and bias—and a wariness of technochauvinists who mistakenly think they’ve produced a neutral tool.

More than a Glitch speaks to a wide audience, while also nodding to important scholarship if readers seek more depth. Broussard keeps the book short for all that she covers, which makes it a great introduction and reference. Her description of technochauvinism is not presented as a theoretical worry, but as a very real problem, made clear in case after case she references without excess theory building; for that, she points to other scholarly literature in the field that people can read. Thus, More than a Glitch is a useful companion to other literature, and it is also useful by itself as an introductory text. The cases are true and startling, in a way that invites conversations and reflections that we need to be having.

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.