Gravitational Waves and the Effort to Detect them

By Peter Shawhan

A worldwide network of detectors may soon measure subtle ripples in spacetime itself, ushering in a new era of astrophysical research

A worldwide network of detectors may soon measure subtle ripples in spacetime itself, ushering in a new era of astrophysical research

DOI: 10.1511/2004.48.350

Somewhere out in space, two black holes may be colliding at this very moment, entwining their powerful gravitational fields in a death spiral that will culminate in a cataclysmic merger.

Being black holes, they will emit no telltale x-ray burst, not even a flash of light, nothing at all to be seen by today’s powerful telescopes. But the energy released by this violent event will radiate into the cosmos as ripples in the geometry of space and time. An expanding group of investigators hopes eventually to detect the subtle gravitational echoes that reach the Earth from such dramatic astrophysical events. The signals are expected to be so minute that the apparatus needed to detect them must be capable of registering changes in length that are much smaller than the breadth of an atomic nucleus. Remarkably, this is now technologically feasible. Although substantial challenges remain in achieving the necessary sensitivities, the next few years should see the emergence of a worldwide network of instruments capable of measuring the gravitational radiation that astrophysicists are sure is out there waiting to be revealed.

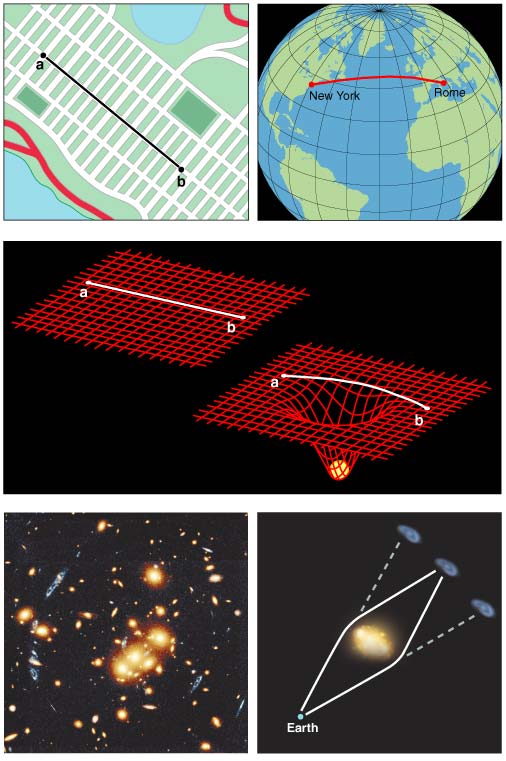

To understand how gravitational radiation arises and what sort of physical apparatus is needed to detect it requires at least a rudimentary understanding of Einstein's general theory of relativity. This theory posits that time is a dimension similar to the three dimensions of space and that the combined four-dimensional "spacetime" can be treated using the language of geometry.

The complete history of an object's position as a function of time is described by a "world line," which threads through the four-dimensional coordinate system, from past to present to future. If no force acts on the object, it will move with a constant velocity, and its world line will be a straight line at some fixed angle relative to the coordinate axes.

An object near a large mass feels the force of gravity accelerate it, so that its world line follows a curved path relative to the coordinate system. For example, if a ball is thrown straight up into the air, a graph of its height versus time traces out a parabola. At least, that is the conventional view, dating back to Newton. Einstein took the bold step of casting that notion aside and postulating that a massive body curves the coordinate system itself. Rather than following a curved path in a Cartesian coordinate system, the ball actually follows a "straight" path (a geodesic) in a curved coordinate system, returning to the thrower's hand at a later time because the geodesic leads it there. Gravity, therefore, is not really a force but is a manifestation of curvature in the geometry of spacetime.

The difference between these two points of view may sound like a matter of definition, but Einstein's theory made a few specific predictions that have since been experimentally verified. For example, the British astrophysicist Sir Arthur Eddington took advantage of a 1919 solar eclipse to measure the deflection of starlight passing near the Sun, finding it to be in agreement with theory. His result was trumpeted on the front pages of newspapers around the world, instantly establishing Einstein's popular reputation.

General relativity says that the geometrical curvature induced by a massive object does not arise everywhere instantaneously. Rather, it travels outward from its source at the speed of light. Thus, if a massive object alters its shape or orientation, or if a collection of objects changes its spatial arrangement, the gravitational effect—the curvature of spacetime—propagates away as a gravitational wave.

A gravitational wave may be described as a time-varying distortion of the geometry of space, temporarily altering the effective distance between any given pair of points. If the causative shift in mass is abrupt, the wave will take the form of a short pulse, much like the ripple produced after dropping a rock into a still pond. In the case of a periodic change, the wave will be sustained, much like the carrier wave for a broadcast radio signal. In either case, the amplitude of the wave will be inversely proportional to the distance from the source.

Unlike ordinary gravitational acceleration, which always points toward the source, a gravitational wave acts perpendicularly to the direction in which it is traveling, and thus is called a transverse wave. In this sense it is like light, rather than like sound, which propagates as longitudinal waves.

At any instant, a gravitational wave stretches space in one direction while shrinking it in the perpendicular direction. General relativity predicts two possible orientations for the stretching and shrinking, which physicists describe as two "polarization states." A given source may produce either one or a combination of both, depending on the particular motions of matter in directions transverse to the line of sight.

Any object encountered by a gravitational wave is stretched and shrunk along with the space in which it lives, and this is the basis for designing a detector. The key point is that the amplitude of a wave is described in physics terms by a strain, that is, a change in length per unit length. Thus, large objects will be affected more in an absolute sense than small objects.

Just what sorts of events generate gravitational waves? The short answer is big ones, where a lot of mass gets thrown around quickly. Indeed, the emission of gravitational radiation, being a relativistic effect, requires huge masses moving at velocities comparable to the speed of light. No man-made apparatus can produce gravitational waves large enough to be measured with any feasible instrument. The only possible sources are massive and energetic astrophysical systems. But not all such systems must give rise to gravitational waves. To generate the type of space distortion that can propagate away as a wave, the source must have an asymmetric distribution of matter that rapidly changes shape or orientation.

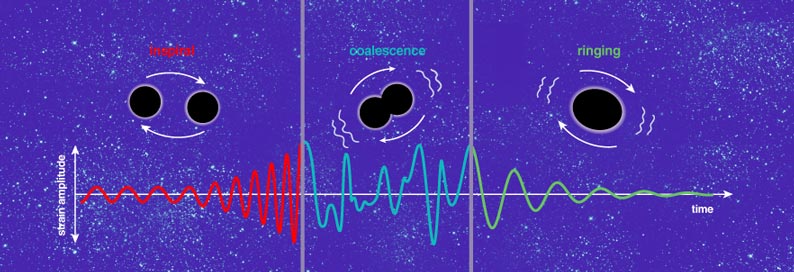

To better grasp these principles, consider a binary system in which two massive but spatially compact objects (neutron stars or black holes) orbit each other closely. Such a system emits gravitational waves at twice the orbital frequency. Why twice? Because from the perspective of the observer viewing it edge-on, the double star system changes from appearing comparatively small (with the two objects aligned along the observer's line of sight) to appearing "stretched" (with the masses located out to either side) at twice the orbital frequency.

The resultant gravitational waves carry away energy and angular momentum, causing the orbital distance and period to decrease. The shrinking orbit in turn causes the frequency of emitted gravitational waves to get higher and higher while their amplitude increases. The rate of orbital decay accelerates until the objects finally collide. Astrophysicists call this decay and merger an "inspiral."

Compact binary systems have received much attention as gravitational-wave sources in part because the waveform of their emissions can be accurately modeled, at least for neutron stars and black holes with masses up to a few times the mass of the Sun. Equally important is the fact that a handful of these systems are known to exist in our galactic neighborhood.

Indeed, Russell Hulse and Joseph Taylor, both now at Princeton, earned a Nobel Prize in 1993 for their discovery and observations of one such binary star system, called PSR B1913+16. That system consists of two neutron stars, one of which is a pulsar, meaning that it emits pulses of radio waves in our direction at extremely regular intervals. Each of the two stars has a mass of about 1.4 times the mass of the Sun. Careful tracking of the timing of the pulses, carried out over many years, has yielded the complete orbital parameters of the system with remarkable precision. In particular, the orbital period, currently about 8 hours, is decreasing by 77 microseconds per year, which will cause these two stars to merge in roughly 300 million years.

This observation agrees precisely with the predictions of general relativity for the orbital decay that ought to result as a consequence of the gravitational radiation from this system. A few other binary pulsars that will merge in a few billion years or less have since been identified, including the spectacular discovery last year of PSR J0737–3039A/B, a unique double pulsar system, which currently has an orbital period of 2.45 hours and will merge 85 million years from now.

Given the knowledge of the decaying orbits of these binary pulsars, the existence of gravitational radiation is not seriously in doubt. Nevertheless, direct observations of gravitational waves have so far been lacking. It's easy to understand why: None of the detectors yet constructed for this purpose has sufficient sensitivity. But when such measurements are eventually made, they will help to address certain questions about the universe, including some that cannot be answered by any other means.

Astronomers hope that future measurements of gravitational waves will help to determine, for example, the abundance of binary neutron stars and other compact binary systems. Radio telescopes can detect such systems only if one of the objects is a pulsar. This limitation may explain why no observational information is yet available for binary systems containing a black hole and a neutron star, although such systems could be relatively abundant. Gravitational-wave astronomy should be able to detect these odd pairings as well as binary systems containing two black holes, something conventional astronomy would be hard-pressed to discern.

Gravitational-wave measurements should also help to determine what neutron-star material is really like. For example, if the crust of neutron stars is stiff enough to maintain shapes that are not axially symmetric, these objects will emit gravitational waves as they spin. Even if the crust is not that rigid, some astrophysicists postulate that rapidly spinning neutron stars must emit gravitational waves because they build up an instability that leads to bulk motion of the star material within.

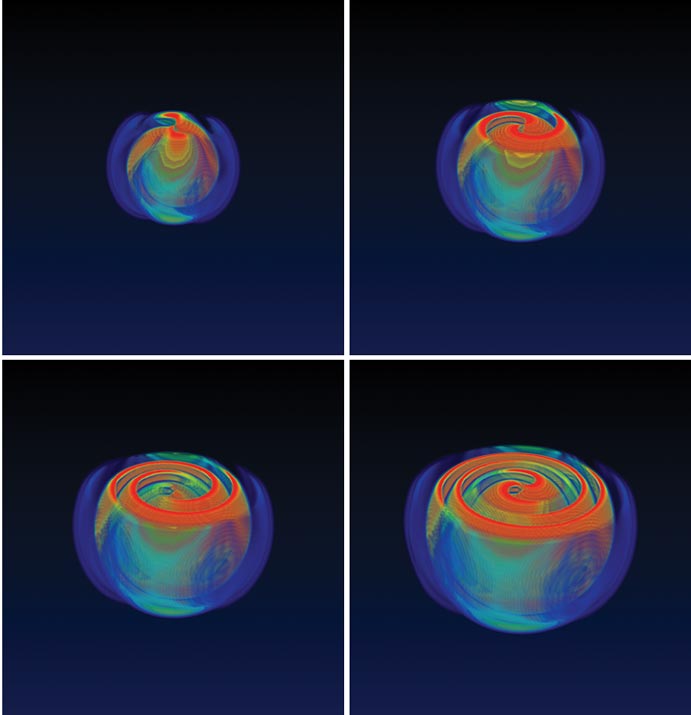

The observation of gravitational waves will also be useful for charting the motions of matter that drive supernova explosions. These cataclysms begin with the collapse of the core of a massive star, an event that releases an enormous amount of energy. Much is known about these explosions, but there is a long-standing mystery regarding how this energy can escape through the dense layers of star material. Although numerical simulations suggest that convection and rotation of the core may play a crucial role, faithfully modeling these motions remains an intractable computational problem. It's clear, however, that gravitational waves will be emitted during the explosion if and only if the explosion is asymmetric. Gravitational-wave observations thus offer the means to probe the engines driving these explosions in a way that complements conventional astronomy, which can only reveal the material blown away some hours after the initial burst.

From www.astronomynotes.com, courtesy of Nick Strobel.

More ambitiously yet, cosmologists hope that gravitational waves might help to reveal how mass moved in the first moments after the Big Bang. The gravitational waves emitted at that time continue to bathe the Earth with tiny geometrical fluctuations, analogous to the cosmic microwave background radiation, but arising from much earlier in the evolution of the universe, at a time when the cosmos remained opaque to electromagnetic waves.

Sensing gravitational waves should also show how mass and spacetime interact in extreme situations. The nonlinear equations of general relativity are expected to lead to complex behavior in such cases, with gravitational radiation dramatically affecting the evolution of astrophysical systems. These predictions may be tested by observations of high-mass, compact binaries, especially if one or both objects are spinning rapidly, and by observations of the strong and possibly chaotic waves given off when two massive objects merge.

Finally, physicists anticipate that this line of investigation will help to determine the true nature of gravity. General relativity, despite its spectacular success in various experimental tests, is not the only possible theory of gravity; others include so-called "scalar-tensor" theories, which, if valid, would have a direct influence on the nature of gravitational radiation. In particular, these theories predict that more than just two polarization states should be possible. Simultaneous observation of a reasonably strong source by multiple detectors would test these theories.

Although the scientific payoff promises to be enormous, direct detection of gravitational waves presents an extraordinary experimental challenge. The sources are expected to be either rare or intrinsically weak (or both). Consequently, the instruments must be very sensitive so that they can search a large volume of space in a reasonable amount of time. How sensitive? The amplitude of the strain expected from a typical gravitational wave reaching the Earth is about 10–21. That number is so tiny it is hard to fathom. It means that the distance between two objects separated, say, by the diameter of the Earth would shrink and stretch only by an amount equal to the size of an atomic nucleus! It would be easy to conclude that directly detecting gravitational waves is hopeless. But some physicists see this as a grand challenge.

The late Joseph Weber, a physicist at the University of Maryland, made the first serious attempts to measure gravitational waves in the 1960s. Weber's detector consisted of a large cylinder of solid aluminum, which was hung horizontally by a single wire around its middle. A sensitive transducer was placed at one end to measure vibrations of the cylinder at its resonant frequency, which could be induced by a passing gravitational wave.

Weber built many such detectors and reported tantalizing evidence of them being excited in coincidence, but other investigators were never able to reproduce his results. Nevertheless, Weber's "bar" design has been refined over the years, and several such detectors are now running. Two operate at a fraction of a degree above absolute zero, a tactic used to minimize noise from internal thermal motions.

Tom Dunne

Bar detectors are highly sensitive only to a fairly narrow band of frequencies (near the resonant frequency of the bar), which limits the types of sources one can hope to detect with them. Therefore, in recent years the focus has shifted to interferometry, the use of light to measure precisely the distances between widely separated mirrors. Interferometers have the advantage of being sensitive to a comparatively broad range of frequencies.

Optical configurations differ somewhat, but all of these interferometers are variations on the basic design Albert A. Michelson used in 1881 and again six years later with the help of Edward Morley for the famous Michelson-Morley experiment. That test disproved the existence of the "ether," the ghostly medium that many 19th-century physicists believed must exist to account for the passage of light waves through space.

In outline, a gravitational-wave interferometer works as follows: A partially reflective mirror divides light from a laser into two beams, which then propagate along perpendicular arms of the device. Mirrors that are freely suspended at the ends of these arms reflect the two beams of light, returning them to a common point at the beam-splitting mirror. The output from this beam splitter depends on the relative phase of the waves in the two beams when they recombine, which in turn depends on how far each had to travel. Thus an interferometer can sensitively gauge the difference in length between the two arms down to a small fraction of the wavelength of light employed.

Such a measurement is perfectly matched to the character of the displacements a gravitational wave induces. The amplitude of the strain in each arm depends on the arrival direction and polarization of the gravitational wave. Although an interferometer cannot detect waves with certain directions and polarizations, it responds significantly to the majority of cases. In this sense, a gravitational-wave interferometer doesn't "look" in any one direction. Rather, it "listens" to the universe all around it.

Modern lasers, optics, photodetectors and control systems permit vastly more stable and precise measurements than were available to Michelson and Morley. After decades of planning and prototyping, it is finally feasible to build large-scale interferometers capable of detecting the kinds of signals that might reasonably be reaching the Earth. As a result, large interferometers for the detection of gravitational waves have been constructed in Europe, Japan and the United States.

LIGO, the Laser Interferometer Gravitational-Wave Observatory, represents the U.S. effort in the field. Funded by the National Science Foundation with a construction cost of about $300 million, it has taken more than a decade to complete and definitely constitutes "big science." The LIGO Laboratory (a joint endeavor of the California Institute of Technology and the Massachusetts Institute of Technology, which manage the project) has observatory facilities at the Department of Energy's Hanford Site in Washington state and at Livingston, Louisiana.

Both installations contain a central building complex connected to two slender enclosures, which run for 4 kilometers in perpendicular directions. These long extensions house the laser beams. The Hanford Observatory has two independent interferometers, one with 4-kilometer arms and the other with 2-kilometer arms, which run side by side. Taken together, the three LIGO interferometers provide a powerful consistency check: The signal from a gravitational wave should appear in both Hanford detectors at the same time and with the same strain amplitude (that is, with a factor of two difference in absolute length change), and in the Livingston detector within the maximum light travel time between the two sites, 10 milliseconds earlier or later.

LIGO adds some clever refinements to the basic Michelson design. One improvement is to have the photons bounce to and fro in the arms some 50 times (on average) before heading back toward the beam splitter. Michelson's interferometer also folded the light path in each of its arms (eight times), using multiple mirrors to offset each segment slightly. In LIGO, light bounces back and forth between two mirrors over the same path, eventually escaping the way it arrived, through one of the bounding mirrors, which is partially transparent.

Proper operation requires that the distance between these two mirrors be controlled to the nanometer level so that they constitute an optical cavity, held very near resonance (that is, so that some large, fixed number of light wavelengths are made to fit between them). This condition is maintained by measuring, among other things, the difference in arm lengths (as revealed by the intensity of the recombined light beam that arrives at the photodetector) and using this information to adjust electromagnets that push or pull on permanent magnets glued to the mirrors.

This feedback system is carefully designed to apply force to the mirrors only at low frequencies, where ground motion (seismic noise) and mechanical resonances are the worst. At higher frequencies, where the interferometer is well insulated from external disturbances, the mirrors are free to respond to any potential signal. Thus the difference in arm lengths is a very sensitive indicator of a gravitational wave at high frequencies. Even at somewhat lower frequencies, where the feedback system has some influence, gravitational waves can be sought by accounting for the feedback.

Each of the three LIGO interferometers uses a 10-watt laser, the output of which must be well stabilized. Although 10 watts isn't much, the intensity of the interfering light is made larger using another embellishment on Michelson's design: a partially reflective mirror between the laser and the beam splitter. So instead of coming back from the beam splitter toward the laser, light is made to "recycle" within the interferometer. This tactic is equivalent to boosting the power of the laser by a factor of 30.

To avoid the problems of scattered light, the various optical components are contained in vacuum chambers, which together run the full length of the arms, making this the world's largest ultrahigh-vacuum system. Its construction was contracted out to the Chicago Bridge and Iron Company, which fabricated the long chambers by joining many short cylindrical sections, each created by welding flat rolls of thin stainless steel into a spiral, just as cardboard is commonly formed into tubes.

Courtesy of Rusty Gautreaux, Aero-Data.

Although assembly of the vacuum system was tough enough, the more difficult task has been to isolate the mirrors from the ground and the laboratory environment, which shake considerably at frequencies below a few hertz. Wire "pendulum" suspensions holding the mirrors provide the first line of defense. In addition, the tables on which the suspended mirrors rest are supported by massive columns with damped springs, which further protect the mirrors from high-frequency vibrations.

All of this engineering allows the interferometers to be highly sensitive to gravitational waves between about 40 hertz and 2 kilohertz. At lower frequencies, ground motions feed into the system too strongly, despite the suppression afforded by the pendulum suspensions and vibration-dampening supports. At higher frequencies, the quantum nature of the laser beam (made of discrete photons, albeit a large number of them) limits the precision of the measurement. Increased laser power would reduce the problem of quantum noise, but ultimately the LIGO interferometers are not suited to measuring gravitational waves that stretch or shrink the arms much more rapidly than the time a photon typically remains in the optical cavity, which is roughly a millisecond for these interferometers.

Scientific activities for this project, including analysis of the data collected by the interferometers, are the responsibility of the LIGO Scientific Collaboration, a group of more than 400 investigators from dozens of institutions around the world. The data obtained from LIGO consists of many "channels," each sensing a different part of the hardware and recording a continuous sequence of digitized values. One channel from each interferometer contains a measurement of the moment-to-moment difference in arm lengths, sampled at 16,384 hertz; this is where a gravitational wave would appear. Hundreds of auxiliary channels record diagnostic signals from the interferometer: feedback signals as well as data from sensors tracking various environmental conditions.

The goal of data analysis is to detect weak signals buried in noisy data. If the waveform of the signal is known, as for the inspiral of a binary neutron- star system or for the continuous-wave signal from a spinning asymmetric neutron star, my colleagues and I can use matched filtering to achieve optimal sensitivity—that is, we can look for correlations between the measurements and the presumed signal pattern. If the exact waveform of the signal is unknown, we must resort to more general techniques, such as a test for a short burst of anomalously large signal power in some frequency band. However, we have to be careful, because we expect that the detector will experience short-term glitches, the result of environmental disturbances or instrumental artifacts. So we will use the auxiliary channels to "veto" candidate events for which we can identify mundane causes.

There is a growing spirit of cooperation between LIGO investigators and those working with the three other gravitational-wave interferometers: GEO600 (a British-German project that is commissioning a 600-meter instrument near Hannover), TAMA300 (a 300-meter interferometer that has been operating off and on for the past few years in Japan), and Virgo (a French-Italian interferometer with 3-kilometer arms, located near Pisa). Combining data from multiple interferometers makes it possible to perform further consistency checks and to test the fundamental properties of gravitational waves once a signal has been established. In the not-so-distant future, the worldwide network may grow to include additional large interferometers now being considered in Japan, China and Australia.

LIGO investigators conducted their first "science run" over a 17-day period in the summer of 2002 and followed that exercise with two more such sessions during 2003, each about two months long. The time in between has been spent tracking down noise sources and making various changes to improve performance. As a result, the sensitivities of the interferometers have steadily improved and are getting rather close to the target. We expect to reach design sensitivities next year, at which point we will begin collecting data more-or-less continuously. It is fitting that 2005 has been designated the World Year of Physics, with a particular emphasis on celebrating Einstein's revolutionary contributions to modern science.

Even after the LIGO interferometers begin operating at full sensitivity, there are no guarantees that we will find anything. Indeed, no one can say for sure when, or even if, gravitational waves will be directly detected with today's interferometers—after all, this is exploratory science! We can, however, make educated guesses for some of the potential sources. For instance, the rate of binary neutron-star inspirals that take place close enough to yield a detectable signal is probably less than one per decade, on average. Thus, we would have to get lucky for LIGO to register such an event anytime soon. For various other sources, we are more ignorant of how often they should crop up, and our goal for the near future is to be prepared for whatever surprises nature might offer.

Gravitational waves cannot remain hidden indefinitely. Scientists and engineers are already developing improved detector technologies to be used in future interferometers. A proposed upgrade, called Advanced LIGO, would take advantage of these developments and increase the sensitivities of the three interferometers by roughly an order of magnitude, allowing a thousand-fold increase in the volume of space that can be searched. If approved, we would be certain to detect binary inspirals at a decent rate and would be much more likely to identify other sources.

As a complement to ground-based detectors, gravitational waves can also be sought by precisely tracking the distance to an interplanetary spacecraft. Past experiments of this type have used a radio beacon beamed from Earth and retransmitted by the distant craft. Currently, the European Space Agency and NASA are collaborating to design the Laser Interferometer Space Antenna (LISA), a set of three spacecraft that will most likely be put into orbit around the Sun sometime in the next decade. This approach to gravitational-wave astronomy completely avoids the problems of ground motion and can accommodate arms millions of kilometers long, with laser beams propagating through the vacuum of space. LISA will scan a lower frequency band than LIGO can hope to cover and is targeting different sources. And unlike any of today's ground-based detectors, LISA will be sensitive enough to register gravitational waves from known sources (such as the recently discovered double pulsar), which will provide valuable calibration standards for it.

Direct detection of gravitational waves is fantastically difficult, but decades of patient groundwork have brought us close to making it a reality. We look forward to finally sensing these minute ripples in spacetime in the not too distant future, and learning what they can tell us about distant astrophysical objects and about the nature of gravity itself.

Click "American Scientist" to access home page

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.