Reasonable Versus Unreasonable Doubt

By David B. Allison, Gregory Pavela, Ivan Oransky

Although critiques of scientific findings can be used for misleading purposes, skepticism still plays a crucial role in producing robust research.

Although critiques of scientific findings can be used for misleading purposes, skepticism still plays a crucial role in producing robust research.

Doubt has been considered essential to science since long before the scientific method was established in the 17th century. But the idea that doubt is a virtue is not without its critics. Some people, perhaps focused on the potential for doubt to harm scientific and social progress, argue that doubt is a sin, and that all doubters are ignorant or malicious “deniers.” Whether doubt is more appropriately viewed as a sin or a virtue depends on the context. If we remind ourselves of the origins and value of each of these two views of doubt, and of the potential for both to be exploited, we will be in a better position to appropriately exercise and assess doubt in science.

The process by which doubt can evolve from being viewed as virtuous to being viewed as sinful has four steps: reasonable doubt; its illegitimate cooption; condemnation of its cooption; and cooption of its condemnation to attack those who express reasonable doubts. The first three steps are well recognized and accepted in the scientific community; however, the fourth step and its negative effects on healthy scientific dialogue and self-critique has been less acknowledged.

Wikimedia Commons

First, there is legitimate doubt. The value of doubt for the advancement of knowledge has been recognized (and simultaneously found to be abhorrent), at least by some thinkers, since the time of Socrates. The Greek philosopher valued doubt, but his fellow Athenians tried him and ultimately put him to death, in part because of his professed ignorance. Speaking to the jury, Socrates said he would rather possess the wisdom to know what he didn’t know than have some knowledge but be unaware of his own ignorance.

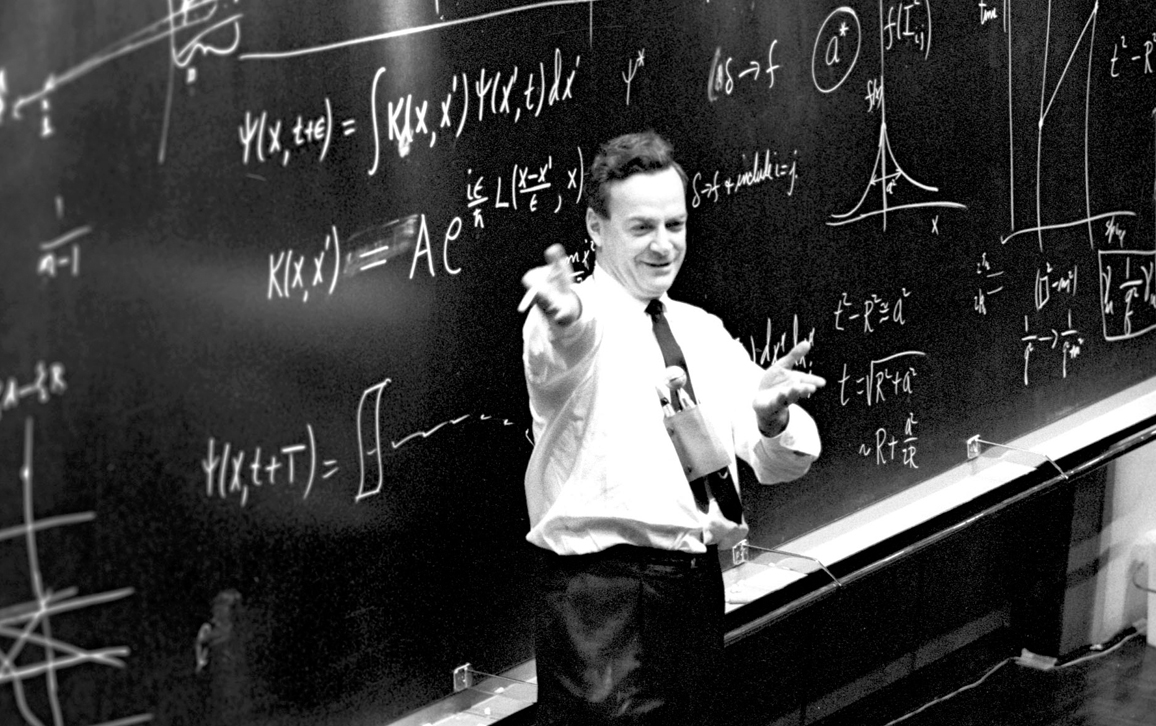

That sentiment has traveled throughout the ages and has been reaffirmed frequently by scientists and scholars. One of the best-known and most vigorous advocates for incorporating doubt into investigative thinking was Richard Feynman, who spoke passionately about inherent curiosity and the mystery of science: “I think that when we know that we actually do live in uncertainty, then we ought to admit it; it is of great value to realize that we do not know the answers to different questions,” he wrote. “This attitude of mind—this attitude of uncertainty—is vital to the scientist, and it is this attitude of mind which the student must first acquire. It becomes a habit of thought. Once acquired, one cannot retreat from it anymore.”

It was doubt about the rigor and probative value of others’ experiments that led Louis Pasteur to overturn the theory of spontaneous generation. Well-founded doubt in a framework of experimental rigor led Johns Hopkins University physicist Robert Wood to correctly debunk a purportedly new type of radiation, dubbed N-rays by French physicist Prosper-René Blondlot in 1903. More recently, well-founded doubt unmasked the corrupt nature of the evidence purportedly suggesting that vaccines caused autism. It also led to the withdrawal of thalidomide from the market (one might argue that greater doubt might have prevented its approval in the first place), and it has inspired many other efforts to increase the rigor and reproducibility of research.

CERN

Then there is the second step, the illegitimate cooption of doubt. Although doubt is widely recognized as fundamental to the scientific method, it can also be exploited inappropriately. Notably, the guise of healthy scientific skepticism can be used to question claims that are beyond reasonable doubt, such as the following: The Earth is closer to 4.5 billion than to 6,000 years old. Species evolve by Darwinian selection. Smoking tobacco causally increases the risk of lung cancer. Our planet is, on average, getting warmer at least in part because of human action.

The same tools used to discredit disingenuous expressions of doubt can be used against those who express well-supported doubt.

Harvard University historian of science Naomi Oreskes and California Institute of Technology historian of science and technology Erik Conway wrote about such illegitimate cooption of doubt in Merchants of Doubt: How a Handful of Scientists Obscured the Truth on Issues from Tobacco Smoke to Global Warming, illustrating the dangers of misrepresented and exaggerated scientific uncertainty. Doubt, they argue, is vulnerable to exploitation. The truth of that statement is well established. However, the authors’ argument is easily and mistakenly extended to advance that all doubt is antithetical to scientific and social progress. That stance is easily misused as a rhetorical tactic to dismiss critical scientists whose goal is better science—and not nihilistic doubt.

Indeed, Oreskes and Conway describe the essential uncertainty of science:

Doubt-mongering also works because we think science is about facts—cold, hard, definite facts. If someone tells us that things are uncertain, we think that means that the science is muddled. This is a mistake. There are always uncertainties in any live science, because science is a process of discovery…

Doubt is crucial to science—in the version we call curiosity or healthy skepticism, it drives science forward—but it also makes science vulnerable to misrepresentation.

The third step in the evolution of doubt is the legitimate condemnation of the illegitimate cooption of doubt. When doubt is fomented about propositions that are beyond any reasonable doubt, authors who condemn the resulting “manufactured doubt” perform a service to the scientific community and society at large. In these instances, doubt is seen as consisting solely of disingenuous expressions of skepticism, motivated by financial or other nonscientific interests, which are allowed to pervert scientific interests. However, the common strategies of treating doubt as sin and using ad hominem language—referring to doubters as “deniers,” “shills,” “fringe” persons, and the like—are perhaps ill-advised in the long run, even though they may be effective rhetorical tools.

Finally, this cycle all comes full circle, with some individuals illegitimately coopting the rhetoric used to condemn manufactured doubt, and using it instead to condemn reasonable doubt. Indeed, the existence and acceptance of doubt as sin and use of the accompanying rhetorical tools have paved the way for a second and illegitimate coopting of an otherwise legitimate argument. The same tools used to discredit disingenuous expressions of doubt can be used against those who express well-supported doubt. Those with particular political views may declare some doubt to be unreasonable, even if it is actually quite reasonable.

In this cooption, the declaration that the doubt is unreasonable is again accompanied by the rhetoric of doubt as “sin,” and of doubters as “deniers.” Loose comparisons with tobacco companies have become so common that the tactic has earned its own sobriquet: reductio ad tabacum. In some ways, it is scientists’ version of Godwin’s law, which states that as debate on an internet forum grinds on, the chance that someone will compare an opponent to Hitler approaches 100 percent. And this kind of creeping doubt has the danger of discouraging scientists from checking on the reproducibility of their results.

Gene Hettel

Scientific skepticism ought to extend to one’s own work. Sometimes, this self-regulation may lead to one of the most painful outcomes for a scientist: retraction. For instance, Pamela Ronald is a plant pathologist and geneticist at the University of California, Davis, who works on genetically modified organisms (GMOs), and has long been under attack by anti-GMO groups. GMO researchers and anti-GMO activists have long argued back and forth about the appropriate role of doubt regarding questions of GMO safety. In the midst of this exchange, Ronald realized that her lab had erred in a few studies carried out in 2009 and 2011, because she discovered that the results could not be replicated. The error was traced back to the mislabeling of two bacterial strains. Ronald corrected the scientific record in 2013, and how she did so is a model of “doing the right thing.” She added a note to the now-retracted paper many months before it was retracted, rather than wait for what can be a very drawn-out process, and she even reached out to at least one scientist who had written about the paper to let him know about the issues. She then wrote extensively about the entire process. Finding the error did not change her overall view on the benefits of GMOs. Her goal was to weed out erroneous results. Nonetheless, she was vilified by those opposed to GMOs.

Willingness to extend skepticism to one’s own published work and to acknowledge mistakes ought to be championed. But retractions can be weaponized. Instead of being recognized as a possible outcome for any scientist, given human fallibility, they are instead used as evidence that an entire body of work or area of research is deeply flawed. Thus, for Ronald, doubt as a virtue with consequences for one’s own work has been coopted by those who oppose not just her work but who are against her entire field of science. The resulting attacks could be a disincentive for other researchers to be similarly forthcoming.

Although some may weaponize retractions to unfairly cast doubt on an entire body of work, there are examples of more constructive dialogue between individuals with opposing views. Michael Pollan, a professor at the University of California, Berkeley, Graduate School of Journalism, who is not a defender of GMOs, invited Ronald to speak in 2014 to an audience of 700 students and to make her case for the safety and usefulness of GMOs. Members of the press who attended the debate reported that it was contentious, but mostly courteous.

How can we condemn the promulgation of unreasonable doubts while also condemning the use of doubt-as-sin rhetoric to dismiss scientists who express reasonable doubts that do not accord with the advocates’ expressions of certainty?

The key lies in the phrases reasonable doubts and unreasonable doubts. We must resist the urge to use ad hominem arguments and instead focus on the scientific evidence. If the evidence is as multifaceted, reproducible, and consistent as it is, for example, in the case of Darwinism, then we would expect that showing the evidence should be enough. That said, whether it is enough is open to question. Other tactics beyond merely presenting the evidence may help scientists make their points more effectively, but if these points are extrascientific, does their introduction by scientists undermine the true basis for the epistemic authority of scientists qua scientists?

When citizens and scientists fail to heed facts and well-reproduced studies, we may find ourselves thinking that unreasonable doubt is tantamount to denialism. Even then, though, we should resist the use of rhetorical devices that dismiss doubt as sinful and rely on extrascientific reasoning, regardless of how effective such tactics may be. Indeed, recent research by James Weatherall at the University of California, Irvine, and his colleagues suggests that ad hominem arguments can have as much influence on patterns of thought as do arguments that are critical of empirical data.

Other fallacious arguments may be even more effective. For example, comedian John Oliver may have meant well on his television show Last Week Tonight in 2014 when he had 97 people march on stage in white lab coats to represent the 97 percent of scientists who have concluded that global warming is occurring and that human action contributes to it. His punchline, that he couldn’t hear the three dissenting scientists on stage over the 97 others, was meant to debunk the false appearance of one-to-one, even debate between the two bodies of evidence. But in doing so, he switched from evidence-based argument to argumentum ad verecundiam or argumentum ad populum. The reliance on these tactics invites the use of similar nonscientific reasoning in situations where the evidence in doubt is not as multifaceted, reproducible, and consistent as it is for a subject such as climate change or evolution. Such tactics make for entertaining television, but they are not a useful template for scientific discourse.

We must resist the urge to use ad hominem arguments, and instead focus on the scientific evidence.

At the same time, the presentation of facts alone may not be enough to change minds, and the study of humans’ behavior when they are confronted with evidence challenging their beliefs is an active area of research. Public health specialist Sara E. Gorman and Mount Sinai School of Medicine psychiatrist and neuroscientist Jack M. Gorman suggest that individuals are physiologically rewarded when they filter out contradictory information and focus on information that confirms existing beliefs. An article reviewing the Gormans’ book, Denying to the Grave, along with the research of others is pessimistically subtitled “Why reason and evidence won’t change our minds.”

Fortunately, not all research on human cognition leads to such pessimistic conclusions. Concerns about the “backfire effect”—the theory that when we are presented with facts that contradict our ideology, our mistaken beliefs are strengthened—may be overblown. A 2016 study by Thomas Wood of Ohio State University and Ethan Porter of George Washington University did not find evidence of a backfire effect, concluding more optimistically that “By and large, citizens heed factual information, even when such information challenges their partisan and ideological commitments.” Other tactics that may be effective include factual arguments that are framed to emphasize similar goals across opposing sides of an issue.

Inspiration for the use of thoughtful dialogue about contentious issues can be taken from the following observation by 19th-century French polymath Henri Poincaré: “To doubt everything and to believe everything are two equally convenient solutions; each saves us from thinking.” If we want to live in a world governed by reason, and for others to attend to science because scientists say that their statements are based on reason, then scientists must rely on reason, and reason must be encouraged to include a healthy amount of self-examination. We must especially rely on reason to resolve inevitable disagreements—inevitable because, as Oreskes and Conway observe, “There are always uncertainties in any live science, because science is a process of discovery.” We might need to explore additional ways of getting across reasonable arguments, but reason must always be at the core.

Even if nonscientific rhetorical devices lead to short-term gains in silencing the merchants of doubt, such advantages may be outweighed by the long-term disadvantages of normalizing ad hominem attacks and other tactics as part of the scientific discourse. Utilitarianism would advise against the use by scientists of persuasive tactics other than those that are evidence-based.

There truly are people—some of them in positions of authority—who are promoting disingenuous and unreasonable expressions of doubt. However, if we slip and rely on nonscientific rhetorical devices to argue against them, then we invite others to use these rhetorical devices to dismiss cases in which scientific doubt is reasonable and even essential. As scientists and scholars, we need to rise above that, stick to the science, and never give up the virtue of doubt.

Click "American Scientist" to access home page

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.