This Article From Issue

July-August 2008

Volume 96, Number 4

Page 334

DOI: 10.1511/2008.73.334

Digital Dice: Computational Solutions to Practical Probability Problems. Paul J. Nahin. xiv + 263 pp. Princeton Univertsity Press, 2008. $27.95

The Monty Hall affair was a sobering episode for probabilists. In 1990 Marilyn vos Savant, a columnist for Parade magazine, discussed a puzzle based on the television game show "Let's Make a Deal," hosted by Monty Hall. The problem went roughly like this: A prize is hidden behind one of three doors. You choose a door, then Monty Hall (who knows where the prize is) opens one of the other doors, revealing that the prize is not there. Now you have the option of keeping your original choice or switching to the third door. Vos Savant advised that switching doors doubles your chance of winning. Thousands of her readers disagreed, among them quite a few mathematicians.

How can we settle such a controversy? Both sides can offer plausible-sounding arguments to support their positions. One faction reasons that any door is as likely as another to hide the prize, and so switching can never change the probabilities. The other side points out that if your chance of being right initially was 1/3, then your chance of being wrong was 2/3; after one of the unchosen doors is eliminated, the full 2/3 probability that your initial choice was wrong is unchanged. That leaves only a 1/3 probability that the other remaining door is the wrong choice, and you can take advantage of this by switching your bet to it.

At least one of these explanations must be faulty—but which one?

Paul J. Nahin's new book advocates an empirical approach to resolving such quandaries. When reason fails you, try the experiment! I've just done so, writing a short computer program to simulate the Monty Hall game. In a series of 100,000 trials, a contestant who always switched doors won the prize 66,841 times; in a second series of the same length, a contestant who never switched won 33,329 times. Thus the evidence seems to support vos Savant.

Digital Dice is an invitation to learn and apply techniques like these. It is Nahin's second book on the same theme; Duelling Idiots and Other Probability Puzzlers was published in 2000. Nahin is also the author of half a dozen other books on popular mathematics. In the new collection, 21 problems are set forth in chapters of two or three pages each. Readers are strongly encouraged to write some programs and get some answers before turning to the book's longer second section, where Nahin presents his own solutions, coded in MATLAB. Here are distillations of some sample problems:

The N runners in a marathon are issued sequential numbers from 1 through N . During the race you note the numbers of 10 randomly selected competitors; the largest of these numbers is 450. Can you estimate N ?

When you're waiting for an elevator, what are the odds that the first car to arrive will be going the wrong way? How does this probability vary depending on which floor you're waiting at?

Popes are elected by a two-thirds vote of the college of cardinals, where the cardinals are both electors and candidates. If each cardinal casts a random vote for one member of the college (possibly voting for himself), how many ballots will be needed before this haphazard process yields a pope?

The longest and most elaborate treatment in Digital Dice goes to a pair of classic problems, which turn out to be closely related: the probability that wandering neutrons will trigger a nuclear chain reaction, and the probability that a family name will become extinct after the passage of some generations. The neutron problem inspired Stanislaw Ulam and others to perform some of the first probabilistic computer simulations during the Manhattan Project; they resorted to computational methods because the chain-reaction calculation was just too messy for an analytic solution. The technique they devised, known as the Monte Carlo method, is the basis of most of the programs discussed in Digital Dice.

The study of family names is eerily similar to this physics problem, merely substituting offspring who propagate a name for the neutrons that sustain a chain reaction. But the surname problem was solved in 1845 by Irénée-Jules Bienaymé and then in 1874 by Francis Galton and Henry Watson. Those savants had no computing machinery to assist them: Score one for the traditional pencil-and-paper methods of probability theory.

The tension between the traditional methods and computer-intensive simulations lurks just beneath the surface in much of this book. For me the issue came into sharp focus as I was working through another of the 21 problems: Parrondo's paradox, which comes from game theory. Here is Nahin's introduction to the subject:

Imagine that we have two games, called A and B , both based on flipping coins. Game A is quite easy to understand: you flip a so-called biased coin, i.e., a coin with unequal probabilities of showing heads and tails. Let's say that if the coin shows heads (with probability 1/2 – ε, ε > 0), you win one dollar, otherwise you lose one dollar. It takes no great mathematical insight to appreciate that, on average, game A is a losing game. . . .

Our other game is game B , with slightly more complicated rules: now you have two biased coins. If, at the time just before you select one of the coins to flip, your capital M is a multiple of three dollars, you will choose coin 1, which shows heads with probability 1/10 – ε. Otherwise you choose coin 2, which shows heads with probability 3/4 – ε. . . . It is not as obvious as it is with game A , but game B is a losing game, too. . . .

Suppose now that you do the following: during a long sequence of games you randomly switch back and forth between playing loser game A and playing loser game B . It would seem obvious to most people, I think, that your capital M will definitely decline as you play.

The result is not the obvious one, of course—otherwise, they wouldn't call it a paradox. Randomly alternating between the two losing games turns out to be a winning strategy. On average, your capital slowly increases (provided ε is not too large). This curious phenomenon was discovered about a decade ago by the Spanish physicist Juan Parrondo. Nahin suggests writing a Monte Carlo simulation to explore the behavior of the game. I did so, and I learned something from the experiment, but I'm not sure that the simulation alone is enough to bring real understanding.

Brian Hayes

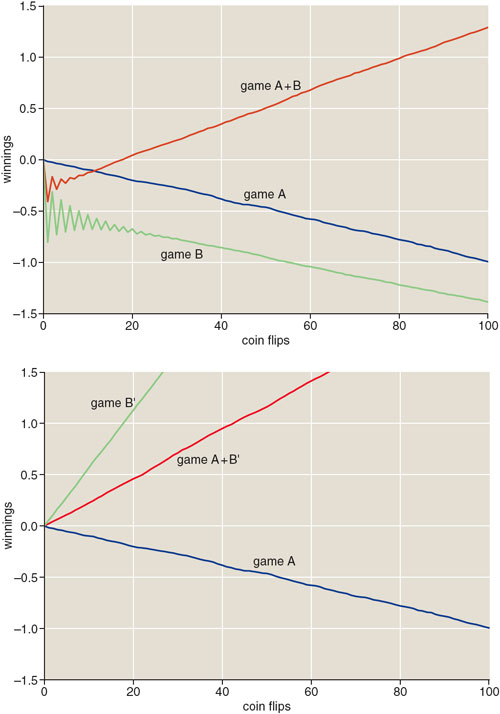

The upper graph in the illustration above shows the result of a Parrondo simulation. As promised, games A and B are both losers. A player who switches randomly between A and B sustains an initial loss but gets back to the breakeven point after 15 or 20 coin tosses and thereafter gains steadily. Seeing the A + B player's fortunes rise as the program runs certainly tends to dispel any doubts about the reality of the Parrondo effect. On the other hand, the simulation by itself really didn't help me figure out why two losers make a winner in this game.

When I turned to Nahin's solutions chapter, I was still frustrated. He gives a careful line-by-line explanation of his MATLAB code, but he offers only a reference to the original literature for those who want to delve deeper into the conceptual roots of the paradox.

What's most disappointing about being left in the lurch this way is that the computational approach Nahin favors can indeed produce insight; you just have to poke at the problem a little harder. In this case, one good starting point is the curious rule for choosing which coin to flip in game B . We toss the unfavorable coin 1 whenever the current fortune is divisible by 3.

At first, this rule looks like a mere randomizing mechanism: As the player's fortune fluctuates over the course of a long game, you might expect the amount to be divisible by 3 roughly a third of the time. This suggests an experiment: Instead of choosing coin 1 whenever the capital is divisible by 3, choose between the coins at random, with coin 1 having a probability of 1/3. The result of this innocent-seeming change is shown in the lower graph in the illustration above. The modified game B (call it B ' ) is a big winner! Game A + B ' is also a winner, but that's no longer paradoxical; game A + B ' is simply the average of game A and game B ' .

Having run this experiment, I began to suspect that the real mystery in Parrondo's paradox is not how A + B turns two losers into a winner, but rather how the winning game B ' degenerates into the losing game B . It seems the divisible-by-3 rule is not just a way of choosing a coin with probability 1/3. A bit of further computation shows that in game B the frequency of dollar amounts divisible by 3 is not 33 percent (as it would be in the random case) but almost 39 percent. Interactions between successive plays lead to this excess and make the game a loser. In game A + B , interleaved episodes of game A disrupt the interactions and reduce the bias to about 35 percent, allowing the player to eke out a small gain.

The idea of using computer experiments to answer questions like these is hardly new, but it's exciting to see the answers tumble out. Still, the aim is not just to get an answer, or a number; it's to gain understanding and insight. Suppose a series of computer runs yields probability estimates that come out 0.495, 0.492, 0.501, . . . . Eventually you may feel justified in concluding that the real probability is 1/2, but I don't think there's much satisfaction to be had until you understand why it's 1/2. Sometimes computation can help here, too, but more often what's needed is careful reasoning about mechanisms. Although Nahin goes through the steps of such reasoning for some of the 21 problems, more analysis of this kind would be welcome.

Another area that Nahin might have covered more thoroughly is the trustworthiness of simulation methods. If you can't trust yourself to correctly analyze probabilities in the Monty Hall problem, can you trust yourself to write a bug-free program? If a simulation disagrees with an analytic calculation, which should you believe? If they agree, is it safe to assume they are both right? Nahin's readers will have to wrestle with these issues on their own.

But if Digital Dice is not quite a complete guide for the aspiring computational probabilist, it's a very good start. The problems are accessible but still realistic enough to be engaging, and the solutions in the back of the book will get you through any sticky spots. Writing your own versions of a few of these programs will acquaint you with a useful approach to problem solving and a novel style of thinking. It might even improve your chances on "Let's Make a Deal."

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.