Piles of PCs

By Mike May

Clusters of PCs compete with the world's fastest machines

Clusters of PCs compete with the world's fastest machines

DOI: 10.1511/1998.21.0

If you tend to pull for the underdog, the so-called Beowulf class of computers will probably appeal to you. Named after the hero who defeated one giant foe after another in the Old English epic, Beowulf computers faceoff against the world's fastest machines, the supercomputers. They consist—sometimes literally—of piles of personal computers that are connected through a network. Perhaps surprisingly, some of them can crank out more than one billion operations each second.

A few years ago, investigators at NASA wanted more computing power for less money. Many projects in space science, including visualization and simulation of galaxies, require enormous numbers of calculations. Thomas Sterling, a principal scientist in the high-performance computing systems group at NASA's Jet Propulsion Laboratory, thought a so-called gigaflop computer—one capable of a billion floating-point operations per second—could be built for less than $50,000 by using off-the-shelf components. Today, many research groups are implementing that idea. In essence, the resulting machines break a computing problem into pieces that can be distributed to the individual personal computers, so that many steps can be processed simultaneously.

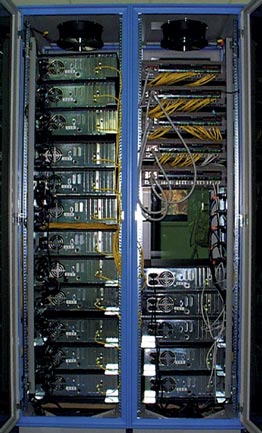

In today's elegant computer world, some Beowulf-class computers may seem clunky. In fact, some of these machines look more like odds and ends in a used-computer store than a high performance–low cost machine. For example, Forrest Hoffman of Oak Ridge National Laboratory says, "Our Beowulf cluster has 42 nodes.... The machines are 486 and 586 [central processing units] with 20 megabytes of memory and about 400 megabytes of disk space. While we don't quote remarkable benchmark speeds, we built this machine out of surplus equipment at no cost. And we are adding more nodes every week."

With the widespread use of local-area networks, or LANs, most of us can at least imagine connecting such a conglomeration of hardware. But how do you make it run like one machine? Simply loading Windows NT won't do the trick—at least, not yet. Fortunately, a public-domain operating system called Linux can be used along with a variety of widely available tools, including ones for constructing application programs that can be distributed among many machines. In addition, Don Becker of NASA Goddard Space Flight Center developed networking software that is used in virtually all Beowulf-class computers.

NASA Goddard Space Flight Center

Of course, Beowulf builders can simplify their task even further by sticking with uniform hardware. For instance, Drexel University's Beowulf-style computer consists of 17 150-megahertz Pentium Pro processors all connected with two Fast Ethernet links. Although this cluster of computers has not been clocked officially, its builders think that it probably reaches about 300 megaflops, or 300 million floating-point operations per second. Nevertheless, Michel Vallieres, head of the Department of Physics and Atmospheric Science at Drexel, says that a pile of PCs "is a concept which will grow significantly in university settings, where the utmost reliability and ease of use is not necessary." In fact, Beowulf-class computers can be cantankerous. Anyone who has managed a conventional network knows that problems can appear unexpectedly and remain elusive—even when you do not hope to make the machines work together on a single problem.

The first Beowulf-class computers that achieved the gigaflops goal appeared at Supercomputing '96 in Pittsburgh. One of those came from a collaboration between Caltech and the Jet Propulsion Laboratory and the other from Los Alamos National Laboratory. Both systems consisted of 16 200-megahertz Pentium Pro processors and were built for about $50,000 in the fall of 1996. One year later, the same machines could be built for about $30,000. To see just how fast these computers could run, the investigators put them to work calculating the gravitational interactions between nearly 10 million bodies in space, and both machines topped 1 gigaflops in the exercise. When the investigators hooked those two machines together, so they worked as one, they reached 2.19 gigaflops. In a paper for the 1997 supercomputing meeting—simply called SC97—Michael Warren of Los Alamos and his colleagues wrote: "We have no particular desire to build and maintain our own computer hardware. If we could buy a better system for the money, we would be using it instead." Of course, faster systems exist. The Los Alamos Thinking Machines CM-5 ran the 10 million-body simulation at more than 14 gigaflops, and Caltech's Intel Paragon reached 13.7 gigaflops, but these machines come with price tags in the millions of dollars.

Another Caltech Beowulf, composed of 88 200-megahertz Pentium Pros, actually bested a supercomputer in a rendering contest. In a process called photorealistic rendering, a computer simulates the patterns of light in a three-dimensional space and then draws an image of it. For example, a problem may require simulating how light from fluorescent bulbs reflects and scatters around tables and chairs in a classroom or how sunlight shines through a stained-glass window. In a series of photorealistic-rendering challenges, this 88-processor Beowulf beat the Cornell Theory Center's IBM SP2 by 10 to 20 percent. Moreover, this Beowulf could be built for about five percent of the price of the IBM supercomputer.

Sterling says, "There is clearly going to be an explosive growth of Beowulf-class computing across the country and around the world. It is happening now. The reasons are many, but accessibility and low cost are two of the drivers." Alan Heirich, a research scientist at Tandem Laboratories (a Compaq subsidiary), adds that, "The interesting challenges will be to scale them to larger configurations, to increase the reliability and make them compatible not only with Unix environments but also with the large and inexpensive base of applications that is available under Microsoft NT."

Despite the accomplishments of these piles of PCs, don't expect to see even your most hardware-savvy neighbor building one any time soon. Although you might slay dragon-size problems with these conglomerations of chips, only a handful of dragon slayers would take on this technological challenge.—Mike May

Click "American Scientist" to access home page

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.