This Article From Issue

March-April 2007

Volume 95, Number 2

Page 171

DOI: 10.1511/2007.64.171

Useless Arithmetic: Why Environmental Scientists Can't Predict the Future. Orrin H. Pilkey and Linda Pilkey-Jarvis. xvi + 230 pp. Columbia University Press, 2006. $29.50.

What happens when an immature and incomplete science meets a societal demand for information and direction? The spectacle is not pretty, as we learn from Useless Arithmetic, a new book that describes a long list of incompetent and sometimes mindless uses of fragmentary scientific ideas in the realm of public policy. The troubling anecdotes that authors Orrin H. Pilkey and Linda Pilkey-Jarvis provide cross diverse fields, including fisheries management, nuclear-waste disposal, beach erosion, climate change, ore mining, seed dispersal and disease control. Their extended examples of the misuse of science are both convincing and depressing. The book is a welcome antidote to the blind use of supposedly quantitative models, which may well represent the best one can do, but which are not yet capable of producing useful information.

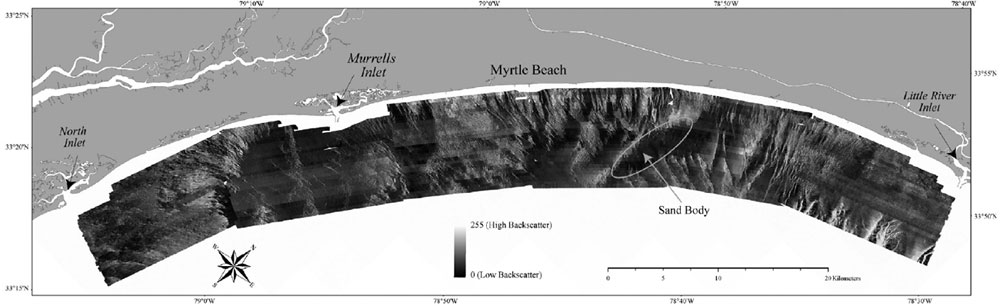

From Useless Arithmetic (which credits Jane Denny and the U.S. Geological Survey for this image).

Unfortunately, the impression of the issues one gets from the book is sometimes misleading. The authors' target is "mathematical modeling" as practiced in science. Examples abound of theories being applied grossly beyond the limits of their demonstrable usefulness, leading to absurd results or producing "answers" to questions that are themselves absurd (What will be the hydrological cycle in Yucca Flat one million years in the future?).

But are fisheries management and nuclear-waste disposal scientific problems? The authors' examples are not really problems of science but of the application of science to a practical end (a definition of engineering); politics, economics, the legal system and even psychology are involved. When science is not ready to answer specific questions, but the political universe insists that policies must be put in place (How large a catch can the fishery sustain? Is malaria in Africa a greater problem than HIV? How rapidly will this beach erode?), the outcome is almost inevitable: Someone will rush forward claiming that the answer is at hand, and the political system, driven to cope with a public threat or desire, will likely implement some insupportable policy. When the science is incomplete, one enters the world of P. T. Barnum, medical nostrums and the carnival.

This story is a very old and very complicated one. The authors do a good, readable job of explaining the large variety of assumptions that go into the quantitative description of numerous complex physical systems. But the stories do confound science with its applications. Poor Lord Kelvin is yet again raked over the coals for having calculated the apparent age of the Earth without having accounted for natural radioactive decay (then unknown). The anecdote is offered up as though it represented a serious scientific failure. It is, instead, a nice example of successful science: The best physics of the day produced an estimate of the age of the Earth that clearly contradicted the ever-more-convincing estimates from geology and evolutionary biology. Although the model itself was, with hindsight, incomplete, lacking not only radioactive decay but also interior convection (as Philip England and colleagues have noted in the January 2007 issue of GSA Today), the assumptions and details of the calculation were there for all to see, understand and debate—and still are, more than 100 years later. Presenting this story in the context of the book's attempt to demonstrate mathematics being used to bring about bad public policy is not very helpful. Building a geothermal power plant on the basis of Kelvin's model would have been poor engineering, but as science, his calculation cannot be faulted.

What is new in the mix is the availability of complicated computer models. Before cheap, large, fast computers existed, "mathematical modeling" was indistinguishable from the construction of mathematical "theories" describing particular phenomena. Calculations were commonly done by scientists who had a grounding in differential and partial differential equations—a grounding that was often based on fluid dynamics, electromagnetic theory, Schrödinger's equation and the like. Those scientists (like their counterparts today) were familiar with a wide range of approximate and asymptotic methods. Lord Kelvin himself is a good example.

With modern computers, it is now possible for a graduate student or a practicing engineer to acquire a very complex computer code, hundreds of thousands of lines long, worked over by several preceding generations of scientists, with a complexity so great that no single individual actually understands either the underlying physical principles or the behavior of the computer code—or the degree to which it actually represents the phenomenon of interest. These codes are accompanied by manuals explaining how to set them up and how to run them, often with a very long list of "default" parameters. Sometimes they represent the coupling of two or more submodels, each of which appears well understood, but whose interaction can lead to completely unexpected behavior (as when a simple pendulum is hung on the end of another simple pendulum). One hundred years in the future, who will be able to reconstruct the assumptions and details of these calculations?

Pilkey and Pilkey-Jarvis could have done more to help the nonscientist reader distinguish bad computer models from bad science. In the right hands, the crudest of models leads to deep insights (for example, Kepler's elliptical orbits). Few nonscientists seem to understand how science is done, its ambiguities and its use of consensus—a word that has come, remarkably, to be pejorative in some lay usage, whereas scientists recognize that almost all of science is an evolving working consensus.

The book does, very sensibly, advocate the use of order-of-magnitude estimates, basic principles, constant testing against observations and qualitative judgment. Any good scientist or engineer, using a complex model, would attempt to compare in detail her order-of-magnitude estimates with the model result and with whatever actual observations are available. Weather-forecast models are at least as complicated as any described. These models produce very useful forecasts out to about 10 days because several generations of meteorologists have been able to make detailed comparisons between the models and observations, hour to hour and day by day. The time horizon is short, and the economic and other consequences of failed forecasts are apparent to all. After 50 years of numerical weather forecasting, a great deal has been accomplished.

When the model is, however, being used to predict an outcome decades to thousands of years in the future, testing that is analogous to what weather forecasters do becomes impossible. One can attempt instead to replicate previous time-histories, subject as they are to incomplete and poorly understood observations often made under very different conditions than those predicted for the future; moreover, doing so raises complicated issues of statistical independence. Such models then call for the most sophisticated of users, who can separate the reliable elements from the likely flawed ones. If an amateur using a chain saw manages to damage himself or someone's property, one does not condemn the saw—rather, one might express the need for users of such power tools to have proper training and to understand where and when those implements should be employed.

Bad science can be done with simple models as well. The authors, who gleefully point at others' published errors, themselves fall into the trap of quoting mistaken concepts and ideas in which no computer model is used at all (for example, the false alarm of the failure of the oceanic wind-driven circulation). An annoying feature of the book is the unreferenced employment of provocative statements from outsiders, either as straw men to be knocked down or as support for the authors' point of view.

An analogy may be made to the plight faced by astronomers, who ultimately managed to distinguish themselves, at least among other scientists, from astrologers. Astrologers provided welcome predictions of what was going to transpire in human lives. Are models of fisheries, beach erosion, climate and the like analogues of astrology, or of astronomy—or perhaps of something of both? Astrology led to astronomy. What will come next in large-scale computer simulations?

Politicians must make and implement public policy even when science cannot provide truly skillful forecasts. Society has to make decisions in the face of major uncertainty about the outcome. More discussion of that necessity and how to cope with it would have been welcome in this book. As it is, the authors usefully raise the alarm about the misuse of poorly understood models and the illusion that because those models are complex, they must be meaningful. In the wrong hands, the best of models can be grossly misleading. To find many examples of that, read this book.

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.