Medical Imaging in Increasing Dimensions

By Ge Wang

Combining technologies and moving into virtual space makes seeing into the body more accurate and beneficial.

Combining technologies and moving into virtual space makes seeing into the body more accurate and beneficial.

In May 2022, I had a dizzy spell and went to Albany Medical Center. Worried that I might be having a stroke, my care team ordered computed tomography (CT) and magnetic resonance imaging (MRI) scans. Both are needed to determine whether a patient should receive thrombolytic therapy to destroy blood clots (if brain vessel blockage is shown by CT) or other interventions to save neurologic functions (as evaluated by MRI). I had the CT scan first, which took only a few seconds, but I had to wait until the next day for the MRI scan, which took more than 20 minutes.

Fortunately, both scans showed healthy brain vasculature and tissues. I was lucky that my case wasn’t a real emergency, or my results might have come too late to be useful. As a researcher who has been working on medical imaging for decades, I was especially reassured by seeing my CT and MRI results. These scans are the eyes of modern medicine, noninvasively peeking into the human body and rapidly making a patient virtually transparent to reveal subtle features in a region of interest.

Current imaging exams are built on a deep foundation of development to make them the reliable medical workhorses we know them to be today. But as my personal experience demonstrated, there’s still a lot of room for developing better and faster imaging techniques to help patients. Over the past three decades, my colleagues and I have been working to make those advances happen, by adding new dimensions to the ways we view the body.

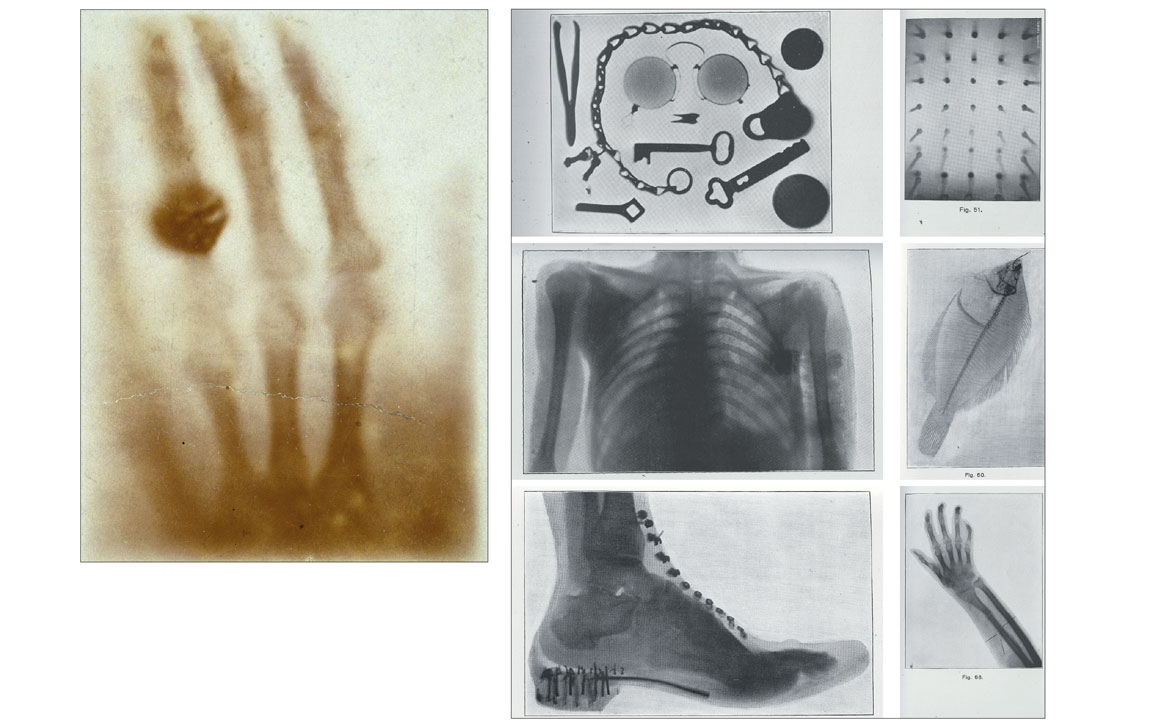

Modern CT scans are the culmination of a series of imaging technologies that began with the 1895 discovery of x-rays by Prussian physicist Wilhelm Röntgen. In 1901, he won a Nobel Prize for this discovery, which soon revolutionized medical diagnoses.

Barbara Aulicino; medical scans from Wikimedia Commons: deradrian; Mikael Häggström, M.D.; Photojack50; Soichi Oka, Kenji Ono, Kenta Kajiyam, and Katsuma Yoshimatsu

Although visible light cannot penetrate our bodies, an x-ray beam can pass through us easily. During the x-ray imaging process, an x-ray beam’s intensity is attenuated, or dampened, by the material elements it meets along its path. The degree of attenuation depends on the type of material, such as bones (more attenuating) or soft tissues (less attenuating). An x-ray image is produced from many x-rays that emanate from a single source and illuminate an x-ray sensitive film, screen, or detector array. Much like the way that pixels together compose an image on a cell phone screen, the collection of detected x-ray attenuation signatures combine to form a picture of the body, rendering bright, white skeletons (low exposure) against dark, murky masses of tissue (higher exposure).

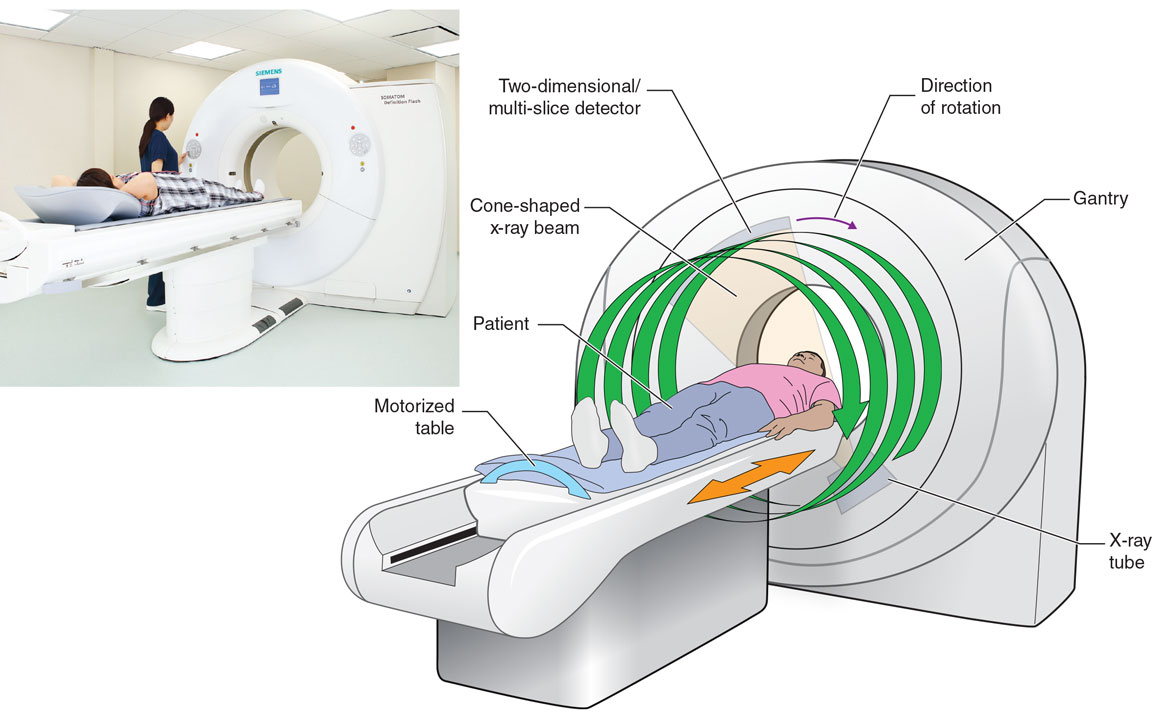

In the late 1960s, Godfrey Hounsfield, an engineer working on radar and guided missiles at the British conglomerate EMI, and Allan Cormack, a Tufts University mathematician, developed a way to put together multiple one-dimensional x-ray profiles from various angles to generate a cross-sectional image inside a patient. They called it computerized axial tomography (CAT), also referred to as computed tomography (CT). Tomography derives from the Greek word tomos, or section. A traditional CT scan combines many x-ray profiles by moving an x-ray source and detector assembly around a patient’s body, then computationally reconstructing two-dimensional images of the internal organs. Hounsfield and Cormack won a Nobel Prize in 1979 for their work.

Two-dimensional CT images offered a tremendous improvement on traditional x-ray radiograms where anatomical structures are overlapped along x-ray paths, but within decades the capabilities of medical imaging had been dramatically increased again by adding additional image dimensions. Each new dimension represents a breakthrough in our ability to perform medical diagnoses and treat patients.

Both the first x-ray radiogram and the first CT images were two-dimensional, which limited their ability to capture our three-dimensional anatomy. Modern CT scanners generate 3D images by working in what’s called a spiral or helical cone-beam scanning mode. In that mode, an x-ray source and a 2D detector array rotate together while the patient is moved through the CT scanner gantry. This process results in a spiral trajectory of the x-ray focal spot relative to the patient, which produces a cone-shaped beam. Then the collected cone-shaped x-ray beam data are used to reconstruct a volumetric (3D) image within seconds.

These scans are the eyes of modern medicine, noninvasively peeking into the human body and rapidly making a patient virtually transparent to reveal subtle features in a region of interest.

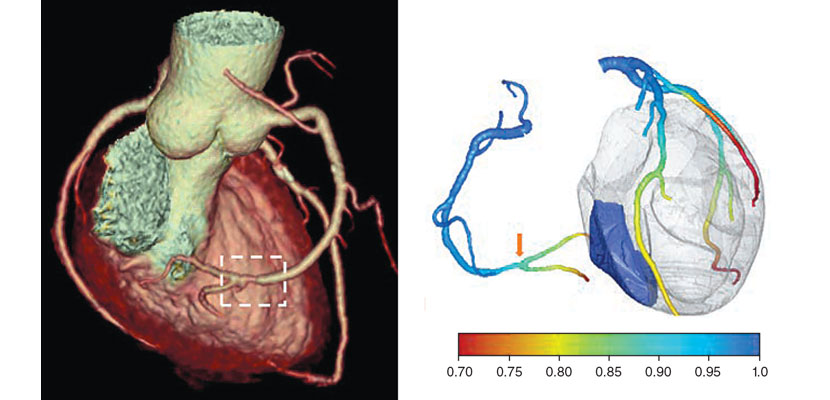

In the early 1990s, my colleagues and I formulated the first spiral cone-beam CT image reconstruction algorithm. Along with many of our peers, we later developed a number of more refined algorithms for this purpose. Although our first spiral cone-beam algorithm performed only approximate image reconstruction, modern algorithms enable highly accurate image reconstruction of much-improved image quality at much-reduced radiation dosages. A scan today can generate a 3D model of a patient’s heart that includes color-coded identification of different plaques and tissues along with detailed information about blood flow and blockages in arteries; intra-abdominal scans can detect abnormalities with 95 percent accuracy. In total, there are about 200 million CT scans worldwide annually, most of which use the spiral cone-beam mode.

Efforts to develop better treatments for cardiac disease, the leading cause of death worldwide, prompted the push for four-dimensional CT scans—that is, 3D scans that incorporate the added dimension of time. Solving the problem of capturing rapid and irregular heart motion remained elusive until the early 2000s with the development of accelerated CT scanning and advanced image reconstruction algorithms aided by an individual electrocardiogram (ECG), which records heart activity in a graph. Both technologies were major engineering challenges. In order to capture the rapid motion of a beating heart, scanning needed to happen much faster than was possible before. But spinning the CT gantries at higher rates resulted in extreme g-forces that required new, high-precision hardware. Then engineers needed to create more sophisticated algorithms to solve the mathematical problem of how to accurately match data segments with cardiac cycles.

With the new hardware, the x-ray source and detector could rotate three to four turns per second while the ECG-aided algorithms synchronized the collected data segments to their corresponding heartbeat phases in order to reconstruct an image at each ECG phase. As a result, human cardiac motion could in many cases be effectively frozen in time, revealing such features as cardiac vessel narrowing and calcification.

Wikimedia Commons/Wellcome Images; National Library of Medicine/History of Medicine

For example, from a coronary CT scan, an algorithm can build an individualized blood flow model of the beating heart. It does this by solving partial differential equations, which involve rate changes that depend on multiple variables, in order to describe blood flow through biological tissues and to extract physiological parameters of the coronary arteries. Because every patient is different, a personalized model can help optimize diagnosis and tailor treatment for each individual. Cardiac CT has now become the first-line imaging technique for patients with suspected coronary artery diseases.

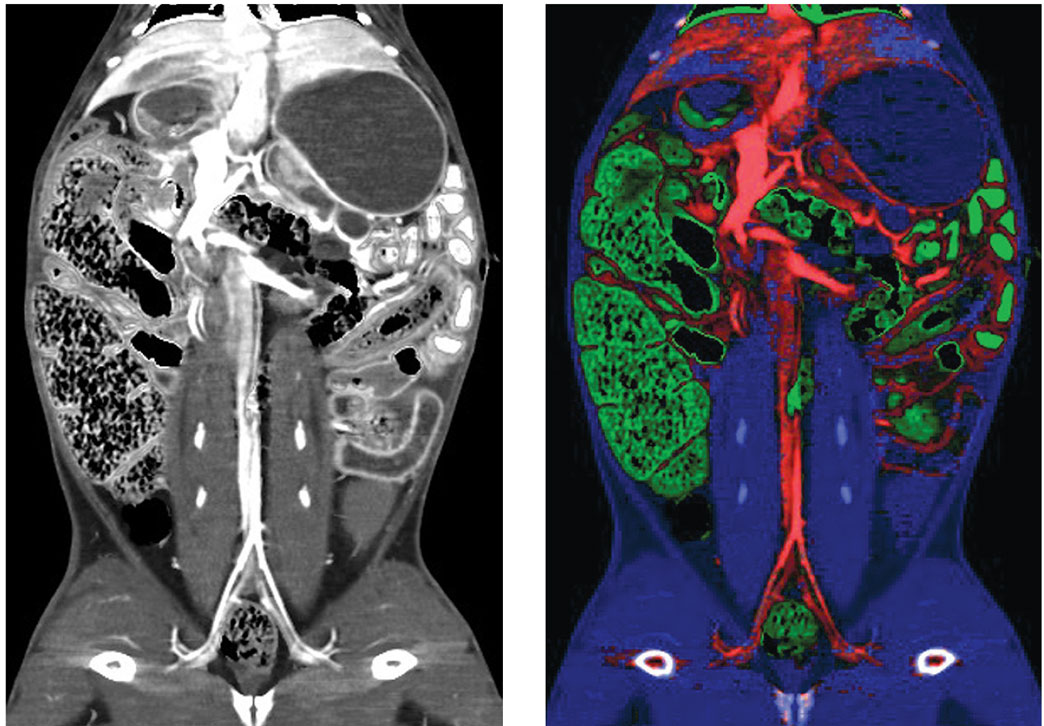

Going beyond 4D, biomedical engineers have added a fifth dimension to CT by incorporating spectral data, considering the wavelengths of the x-ray radiation used to create images. This so-called photon-counting CT creates images that are precisely colorized to differentiate between biological materials as well as to track injected contrast agents.

Wikimedia Commons

Previously, all x-ray radiograms and CT images were in grayscale. But the potential for color was there. Just as an incandescent light emits a whole range of colors, from longer red wavelengths to shorter purple ones, a common x-ray tube emits photons over a wide spectrum of x-ray “colors,” with wavelengths in a range of nanometer lengths. The most common type of CT scanner, however, uses an energy-integrating detector array that accumulates all the energy deposits from incoming x-ray photons together into a single total. As a result, the differences between the energy-specific deposits are lost in translation at the final image, leaving just shades of gray.

Over the past several years, a major technological frontier in the CT field has been the photon-counting CT detector, which individually records x-ray photons along with their wavelengths or energy levels, from soft x-rays (longer wavelengths and less energetic) to hard x-rays (shorter wavelengths and more energetic). In this way, the photon-counting detector can sense the whole x-ray spectrum. The transition from grayscale CT to photon-counting spectral CT is happening now in much the same way as the transition from grayscale to color television happened almost a century ago.

A prerequisite for precision medicine is to have an understanding of where, and for how long, all of the roughly 100,000 types of key proteins are in the human body.

Five-dimensional scanners use two main types of photon-counting detectors: cadmium zinc telluride–based ones used by MARS Bioimaging and Siemens, and silicon-based ones used by General Electric. The former type is much more compact because of its higher-density sensing material, which enables it to absorb and detect x-rays with thinner sensors. However, the latter type is more reliable because it is based on more mature technology that has been used for decades in the semiconductor industry.

The major clinical advantage of either type of photon-counting spectral CT is its ability to distinguish between similar but subtly different materials. For example, it can better differentiate stable plaques from risky ones in main blood vessels that are responsible for heart attacks and strokes. High-risk or vulnerable plaque has three signs: a lipid core (submillimeter to a few millimeters in size), a thin fibrous cap (less than 100 micrometers in thickness), and microcalcifications (on the order of tens of microns). Stable plaques do not contain these structures. X-rays absorb in each of these materials differently depending on their characteristics and the photon energies used in the scan.

A prerequisite for precision medicine is to have an understanding of where, and for how long, all the roughly 100,000 types of key proteins are in the human body. Each protein adds another type of information, and hence another dimension, to the scan. To further improve CT imaging, an increasing number of contrast agents and nanoparticles are being developed to be injectable into humans and animals for more accurate and reliable quantification of biologically relevant features, including proteins. These agents, like bones and tissues, each have their own spectrally distinct attenuation signatures, and they can be selectively bound to molecular and cellular features or accumulated in vasculature to enhance image-based biomarkers.

Dr. Ofer Benjaminov, Rabin Medical Center, Israel

For instance, a cancer patient undergoing therapy today can be injected with various nanoparticles to label cancer cells and therapeutic drugs. Then a nanoparticle-enhanced CT scan can reveal their distributions in the patient’s body. A major advantage of this method over previous anatomical- only imaging is that it can more accurately distinguish malignant from benign tumors. This method differs from photon-counting CT scans because benign and malignant tumors might have similar densities and thus appear to be similar based on photon counting, whereas a nanoparticle can be tagged to only cancerous cells.

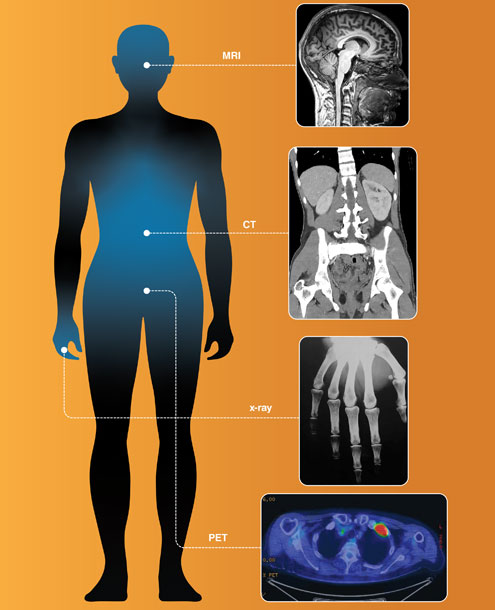

CT alone is insufficient to image all functions and forms of biological targets in a patient. Inspired by x-ray radiography and CT, other researchers have developed a number of additional imaging technologies that are now in widespread use, including magnetic resonance imaging (MRI), positron emission tomography (PET), single-photon emission computed tomography (SPECT), ultrasound imaging, optical coherence tomography (OCT), and photo-acoustic tomography (PAT). These various types of scans view the body through different lenses in terms of their unique physical interactions with biological tissues.

Courtesy of Benjamin Yeh, MD, UCSF

In the medical imaging field, researchers have been combining two or more of these technologies to perform what’s known as multimodality imaging. The first medical multimodality imaging success was the PET-CT scanner in 1998. In this type of machine, functional information from PET is labeled with a radioactive tracer in cancer regions that can be overlaid onto the anatomical structure defined by CT. More recently, Siemens designed the first PET-MRI scanner for the same purpose, because MRI images contain much richer soft tissue information than CT. This advance has enabled or improved important clinical applications, such as eliminating radiation doses from CT and allowing doctors to identify the stage of progression for ailments including cancer, neurological disorders, and heart disease, all with greater accuracy than traditional imaging techniques.

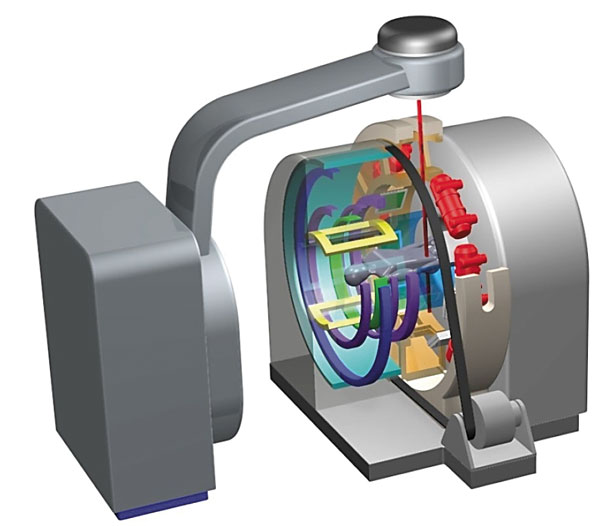

As a long-term and rather challenging goal, my colleagues and I hope to develop omni-tomography, for all-in-one and all-at-once imaging. All-in-one means that all tomographic imaging modalities are integrated into a single machine gantry. All-at-once means that diverse datasets can be collected simultaneously. This hybrid data stream would automatically register in space, time, and diverse contrasts to provide even higher-dimensional—and therefore more informative—imaging.

Adding imaginary dimensions is common in mathematics, but it would be revolutionary to medical imaging.

True omni-tomography is not yet possible, but as a step in that direction my collaborators and I have been promoting the idea of simultaneous CT-MRI. Such a CT-MRI machine would be ideal for an acute stroke patient, because the window of opportunity for effective treatment is within a few hours. In addition to speeding up diagnoses, simultaneous CT-MRI would combine dimensions of both images, reduce misregistration (image misalignment) between them, and optimize diagnostic performance.

For truly comprehensive biomedicine analysis, we need to perform imaging studies at various scales. For example, x-ray imaging can cover six orders of magnitude in terms of image resolution and object size, from nanometer-sized subcellular features to cells and meter-sized human bodies. The case is similar with MRI, ultrasound, and other imaging modalities. This scale dimension is critically important—for example, to link a genetic change to a physiological outcome—because biological architectures are intrinsically organized at multiple scales.

Courtesy of Ge Wang, et al.

Artificial intelligence software that can determine the 3D structures of proteins from their amino acid sequences is now widely available—notably, Google DeepMind made its AlphaFold2 system free and open source in 2021. The advent of such powerful new tools suggests the enormous potential of medical imaging to produce much higher dimensional and much more complex datasets for us to look into life’s innermost secrets.

Now that medical imaging can provide multiple dimensions of information on the patient, the three spatial dimensions and one temporal dimension we perceive might seem to be limiting factors in interpreting all those data. One way to compensate for that is to combine medical scans from the real world with virtual datasets to create an augmented view of the human body. Doing so relies on using the imaginary number space in addition to the typical x-, y-, and z-axes we use to describe the “real” space of our day-to-day lives. This technique draws on the same types of technologies that define, among other things, virtual worlds like those we inhabit in online gaming.

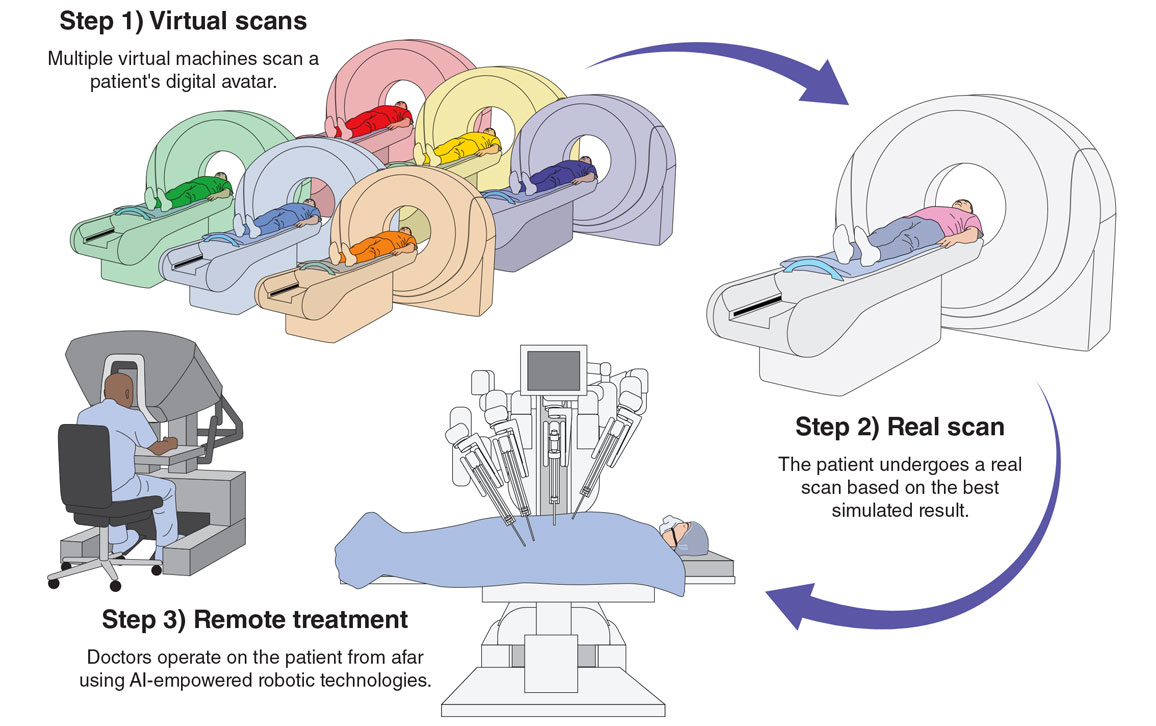

Looking forward, a number of cutting-edge technologies could come together to make medical imaging faster, more informative, and ultimately more lifesaving.

Information from virtual worlds, known in mathematics as complex-valued space, could enhance medical imaging through the so-called metaverse. In the metaverse, we could make digital twins of existing physical scanners and avatars of patients. Before the patient undergoes a real physical scan, their avatar could be modified to have different pathological features of relevance and scanned on a digital version of each scanner to determine which imaging technique would be most useful.

For example, a patient suspected of having a coronary artery disease might have their avatar modified to display a pathology their physician would expect to find, such as narrowed vessels. After putting the avatar through a virtual scan on every type of machine, the corresponding AI software reconstructs and analyzes the images. Once the patient and their care team determine which result is most informative, an actual physical scan can take place. Finally, if it is clinically indicated, the patient could be automatically and even remotely operated on in a robotic surgical suite or radiotherapy facility.

Jason McAlexander

In addition to making diagnosis and treatment more personalized, effective, and efficient, with appropriate security and permission, the images, tomographic raw data, and other relevant information could be made available to researchers and regulatory agencies. Then another revolution would be possible: All these simulated and real images could be used in augmented clinical trials, perhaps to enhance the statistical power of the AI machines or to match similar cases to aid in health care decision-making.

The future health care metaverse that we envision would be a complex-valued mathematical space, orders of magnitude more powerful and informative than our real, 3D, physical space. Looking forward, I see a number of cutting-edge technologies and unprecedented ideas from many disciplines coming together to make medical imaging faster, more informative, and ultimately more lifesaving. A health care metaverse awaits where the boundaries between real and virtual worlds blur, and where AI models will enable intelligent medicine and prevention wherever and whenever it’s needed, with maximum accessibility for all patients.

Click "American Scientist" to access home page

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.