This Article From Issue

September-October 2011

Volume 99, Number 5

Page 420

DOI: 10.1511/2011.92.420

PATTERN THEORY: The Stochastic Analysis of Real-World Signals. David Mumford and Agnès Desolneux. xii + 407 pp. A K Peters, 2010. $79.95.

A friend whispers in your ear, creating minute pressure fluctuations that make your eardrum flutter; milliseconds later you not only hear the sound but also understand the words and recognize the voice. When you turn to look at your friend, a mottled pattern of light and shadow flickers across your retina; milliseconds later, you see and identify a familiar face. How do people accomplish such amazing feats of perception? This is a question that interests workers in many disciplines, including neurobiology, psychology, computer science and engineering. In Pattern Theory the question becomes the subject of a mathematical inquiry.

David Mumford, the senior author of Pattern Theory, had an illustrious career in pure mathematics in the 1960s and 1970s, winning a Fields Medal and other honors. Then he turned to areas of applied mathematics, where he has done equally distinguished work and accumulated more awards, including a MacArthur fellowship and the National Medal of Science. Most of his early work was done at Harvard; since 1996 he has been at Brown University, where he has focused on the mathematics of perception, signal processing and similar problems.

Mumford’s coauthor, Agnès Desolneux, was a student at the École Normale Supérieure in 1998 when Mumford came to Paris to deliver a series of lectures on pattern theory. The present book is based on those lectures and on Desolneux’s notes made at the time, although more recent ideas have also been woven into the narrative. Desolneux is now an applied mathematician at the Université de Paris René Descartes.

A third presence in these pages—not as an author but as a presiding influence—is Ulf Grenander, who is Mumford’s colleague at Brown and the inventor of the approach to pattern theory advocated here. A reading of Grenander’s own works would provide a useful prequel or sequel to the Mumford-Desolneux volume. Grenander’s Elements of Pattern Theory (1996) is particularly accessible.

Perception entails not just sensing the world but also making sense of it. When you listen to orchestral music, you hear oboes, violins, timpani and so on, each playing distinct notes. But the sound waves reaching your ears do not come packaged as separate channels for the winds, the strings and the percussion section; the signal the ear detects is nothing more than air pressure changing as a function of time, p(t). In effect, the sound of the whole orchestra is condensed into a single wiggly line. Notes and chords, melodies and harmonies are all abstractions created by the interpretive brain; they are “hidden variables” to be inferred from an analysis of the signal.

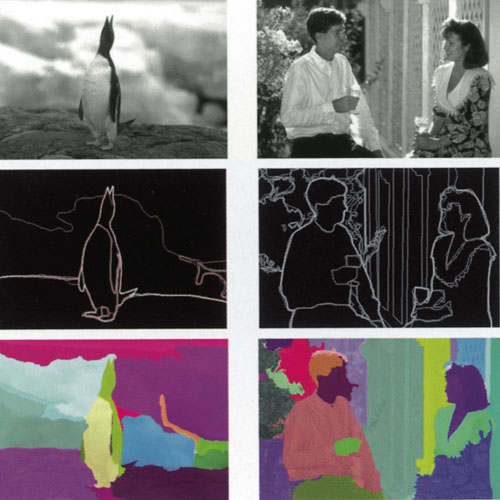

From Pattern Theory.

Vision involves a similar inferential process. The array of receptor cells in the retina of the eye is not too different from the array of light-sensitive elements in the sensor chip of a digital camera; they both record color and brightness as a function of position. But what we see when we look around the world is not a two-dimensional mosaic of discrete, colored pixels. Somehow we organize the flickering map of brightness and color into surfaces, textures, shapes and objects embedded in a three-dimensional space.

If we could understand how higher-level concepts such as musical notes and visual shapes emerge from raw sensory data, we might duplicate these wonders of perception with computer programs. Early efforts in this direction relied mainly on deductive, deterministic methods, in which specific features of the signal were taken as reliable indicators of structure or meaning. Mumford and Desolneux cite the example of speech recognition, where linguists compiled lists of audible features associated with various vowels and consonants. For example, the consonant sounds p and t are both classified as “anterior,” but p is “labial” whereas t is “coronal.” By detecting dozens of features like these, a program might be able to analyze the wiggly line of an audio signal and identify a sequence of speech sounds, which would then be assembled into words and sentences. Linguists worked hard to implement this rule-based plan for speech recognition, but the results were disappointing. Mumford and Desolneux remark:

The identical story played out in vision, in parsing sentences from text, in expert systems, and so forth. In all cases, the initial hope was that deterministic laws of physics plus logical syllogisms for combining facts would give reliable methods for decoding the signals of the world in all modalities. These simple approaches seem to always fail because the signals are too variable and the hidden variables too subtly encoded.

An alternative strategy has gained favor in recent years. It is usually described as “statistical” or “probabilistic,” but these terms don’t tell the whole story. There’s more to it than just assigning probabilities to those elusive hidden variables. The methods presented in Pattern Theory require active engagement with the data—not just scanning for a sequence of telltale features but also forming and evaluating hypotheses, building conceptual models, and applying iterative procedures to refine the models or replace them when necessary.

The nature of this “active” approach to perception is illustrated by an end-of-chapter exercise in Pattern Theory. A computer program generates some meaningless, random text according to a known probability distribution, and then it removes all the word spaces from the text. The following small example is based on the probability of sequences of letters in the text of Huckleberry Finn:

donetimeofthewidowitgotaghost

Your assignment is to restore the word boundaries—to break the garbled string of letters into a sequence of plausible words. Mumford and Desolneux are looking for an algorithmic solution, but it’s also fun to try the puzzle without the aid of a computer. How does the human mind solve such problems? When I stare at the string of letters, I find that a few words pop out spontaneously, brought to my attention by some process operating just below the level of conscious awareness; then, in a more deliberate phase, I search left and right for a set of words consistent with the initial choice. In other words, I find myself employing an iterative scheme of forming and testing hypotheses, with backtracking when necessary. (For the example given above, the reading that first leapt out at me was “done time of the widow it got a ghost,” but there are many other possibilities, such as “do net i me oft hew i do wit go tag host.”)

Although this exercise is somewhat artificial (since we don’t ordinarily omit word spaces in written language), segmentation is a crucial step in understanding many other kinds of signals, including speech and genetic sequences. And the problem of segmenting images into distinct objects or features is quite tricky. The usual starting point is to identify contours with strong contrast—boundaries where the image is dark on one side, light on the other. But only some of these high-contrast edges are real boundaries of objects; others might be shadows, for example. And often there are physical boundaries with little contrast. To deal with these challenges, Mumford and Desolneux introduce some elaborate algorithms that come from rather far afield. In particular they discuss the Ising model, which began in physics as a theory of ferromagnetic materials. In the image-processing version of the model, nearby parts of the image “want” to belong to the same object, just as nearby magnetic atoms “want” to adopt the same orientation. The ultimate interpretation of the image emerges gradually as conflicting constraints are resolved. It’s intriguing to think that the visual cortex of the brain might be routinely solving Ising models to find the configuration of lowest energy—a process that’s known to be computationally hard.

Yet, whatever the brain is doing when we see and hear, the task can’t be too hard, or the hidden variables too subtly encoded. Mumford and Desolneux suggest one limit on pattern complexity:

In the movie Contact, the main character discovers life in outer space because it broadcasts a signal encoding the primes from 2 to 101. If infants had to recognize this sort of pattern in order to learn to speak, they would never succeed. By and large, the patterns in the signals received by our senses are correctly learned by infants. . . . This is a marvelous fact, and pattern theory attempts to understand why this is so.

Pattern Theory is not intended as a practical guide to the design of algorithms for signal or image processing; the “theory” in the title is to be taken seriously. The aim is to explore the kinds of patterns that appear frequently in our environment, in the hope of identifying and understanding some characteristic themes or features. This emphasis is most clearly apparent in the book’s final chapter, where whole databases of images are submitted to statistical analysis, as a way of describing the generic or bulk properties of the visual world. (The result in a nutshell: high kurtosis, or in other words an overabundance of extremes and outliers.)

Pattern Theory is also not an easy read. A decade ago, Mumford and two coauthors published Indra’s Pearls, a gorgeously illustrated essay on the symmetries of the geometric transformations called Möbius mappings. That book reached out to a broad spectrum of readers, who might come to this volume expecting something similar. But Pattern Theory is meant for a narrower audience; it demands closer attention and harder work to discover the hidden variables.

Brian Hayes is Senior Writer for American Scientist. He is the author most recently of Group Theory in the Bedroom, and Other Mathematical Diversions (Hill and Wang, 2008).

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.