Computational Photography

By Brian Hayes

New cameras don't just capture photons; they compute pictures

New cameras don't just capture photons; they compute pictures

DOI: 10.1511/2008.70.94

The digital camera has brought a revolutionary shift in the nature of photography, sweeping aside more than 150 years of technology based on the weird and wonderful photochemistry of silver halide crystals. Curiously, though, the camera itself has come through this transformation with remarkably little change. A digital camera has a silicon sensor where the film used to go, and there's a new display screen on the back, but the lens and shutter and the rest of the optical system work just as they always have, and so do most of the controls. The images that come out of the camera also look much the same—at least until you examine them microscopically.

Ramesh Raskar

But further changes in the art and science of photography may be coming soon. Imaging laboratories are experimenting with cameras that don't merely digitize an image but also perform extensive computations on the image data. Some of the experiments seek to improve or augment current photographic practices, for example by boosting the dynamic range of an image (preserving detail in both the brightest and dimmest areas) or by increasing the depth of field (so that both near and far objects remain in focus). Other innovations would give the photographer control over factors such as motion blur. And the wildest ideas challenge the very notion of the photograph as a realistic representation. Future cameras might allow a photographer to record a scene and then alter the lighting or shift the point of view, or even insert fictitious objects. Or a camera might have a setting that would cause it to render images in the style of watercolors or pen-and-ink drawings.

Digital cameras already do more computing than you might think. The image sensor inside the camera is a rectangular array of tiny light-sensitive semiconductor devices called photosites. The image created by the camera is also a rectangular array, made up of colored pixels. Hence you might suppose there's a simple one-to-one mapping between the photosites and the pixels: Each photosite would measure the intensity and the color of the light falling on its surface and assign those values to the corresponding pixel in the image. But that's not the way it's done.

In most cameras, the sensor array is overlain by a patchwork pattern of red, green and blue filters, so that a photosite receives light in only one band of wavelengths. In the final image, however, every pixel includes all three color components. The pixels get their colors through a computational process called de-mosaicing, in which signals from nearby photosites are interpolated in various ways. A single pixel might combine information from a dozen photosites.

The image-processing computer in the camera is also likely to apply a "sharpening" algorithm, accentuating edges and abrupt transitions. It may adjust contrast and color balance as well, and then compress the data for more-efficient storage. Given all this internal computation, it seems a digital camera is not simply a passive recording device. It doesn't take pictures; it makes them. When the sensor intercepts a pattern of illumination, that's only the start of the process that creates an image.

Up to now, this algorithmic wizardry has been directed toward making digital pictures look as much as possible like their wet-chemistry forebears. But a camera equipped with a computer can run more ambitious or fanciful programs. Images from such a computational camera might capture aspects of reality that other cameras miss.

We live immersed in a field of light. At every point in space, rays of light arrive from every possible direction. Many of the new techniques of computational photography work by extracting more information from this luminous field.

Here's a thought experiment: Remove an image sensor from its camera and mount it facing a flat-panel display screen. Suppose both the sensor and the display are square arrays of size 1,000x1,000; to keep things simple, assume they are monochromatic devices. The pixels on the surface of the panel emit light, with the intensity varying from point to point depending on the pattern displayed. Each pixel's light radiates outward to reach all the photosites of the sensor. Likewise each photosite receives light from all the display pixels. With a million emitters and a million receivers, there are 1012 interactions. What kind of image does the sensor produce? The answer is: A total blur. The sensor captures a vast amount of information about the energy radiated by the display, but that information is smeared across the entire array and cannot readily be recovered.

Now interpose a pinhole between the display and the sensor. If the aperture is small enough, each display pixel illuminates exactly one sensor photosite, yielding a sharp image. But clarity comes at a price, namely throwing away all but a millionth of the incident light. Instead of having 1012 exchanges between pixels and photosites, there are only 106.

A lens is less wasteful than a pinhole: It bends light, so that an entire cone of rays emanating from a pixel is made to reconverge on a photosite. But if the lens does its job correctly, it still enforces a one-pixel, one-photosite rule. Moreover, objects are in focus only if their distance from the lens is exactly right; rays originating at other distances are focused to a disk rather than a point, causing blur.

Photography with any conventional camera—digital or analog—is an art of compromise. Open up the lens to a wide aperture and it gathers plenty of light, but this setting also limits depth of field; you can't get both ends of a horse in focus. A slower shutter (longer exposure time) allows you to stop down the lens and thereby increase the depth of field; but then the horse comes out unblurred only if it stands perfectly still. A fast shutter and a narrow aperture alleviate the problems of depth of field and motion blur, but the sensor receives so few photons that the image is mottled by random noise.

Computational photography can ease some of these constraints. In particular, capturing additional information about the light field allows focus and depth of field to be corrected after the fact. Other techniques can remove motion blur.

A digital camera sensor registers the intensity of light falling on each photosite but tells us nothing about where the light came from. To record the full light field we would need a sensor that measured both the intensity and the direction of every incident light ray. Thus the information recorded at each photosite would be not just a single number (the total intensity) but a complex data structure (giving the intensity in each of many directions). As yet, no sensor chip can accomplish this feat on its own, but the effect can be approximated with extra hardware. The underlying principles were explored in the early 1990s by Edward H. Adelson and John Y. A. Yang of the Massachusetts Institute of Technology.

One approach to recording the light field is to construct a gridlike array of many cameras, each with its own lens and photosensor. The cameras produce multiple images of the same scene, but the images are not quite identical because each camera views the scene from a slightly different perspective. Rays of light coming from the same point in the scene register at a different point on each camera's sensor. By combining information from all the cameras, it's possible to reconstruct the light field. (I'll return below to the question of how this is done.)

Experiments with camera arrays began in the 1990s. In one recent project Bennett Wilburn and several colleagues at Stanford University built a bookcase-size array of 96 video cameras, connected to four computers that digest the high-speed stream of data. The array allows "synthetic aperture photography," analogous to a technique used with radio telescopes and radar antennas.

Images courtesy of Ren Ng.

A rack of 96 cameras is not something you'd want to lug along on a family vacation. Ren Ng and another Stanford group (Marc Levoy, Mathieu Brédif, Gene Duval, Mark Horowitz and Pat Hanrahan) implemented a conceptually similar scheme in a smaller package. Instead of ganging together many separate cameras, they inserted an array of "microlenses" just in front of the sensor chip inside a single camera. The camera is still equipped with its standard main lens, shutter and aperture control. Each microlens focuses an image of the main lens aperture onto a region of the sensor chip. Thus instead of one large image, the sensor sees many small images, viewing the scene from slightly different angles.

Images courtesy of Ren Ng.

Whereas a normal photograph is two-dimensional, a light field has at least four dimensions. For each element of the field, two coordinates specify position in the picture plane and another two coordinates represent direction (perhaps as angles in elevation and azimuth). Even though the sensor in the microlens camera is merely a planar array, the partitioning of its surface into subimages allows the two extra dimensions of directional information to be recovered. One demonstration of this fact appears in the light-field photograph of a sheaf of crayons reproduced at right. The image was made at close range, and so there are substantial angular differences across the area of the camera's sensor. Selecting one subimage or another changes the point of view. Note that these shifts in perspective are not merely geometric transformations such as the scalings or warpings that can be applied to an ordinary photograph. The views present different information; for example, some objects are occluded in one view but not in another.

Shifting the point of view is one of the simpler operations made possible by a light-field camera; less obvious is the ability to adjust focus and depth of field.

When the image of an object is out of focus, light that ought to be concentrated on one photosite is spread out over several neighboring sites—covering an area known as the circle of confusion. The extent of the spreading depends on the object's distance from the camera, compared with the ideal distance for the focal setting of the lens. If the actual distance is known, then the size of the circle of confusion can be calculated, and the blurring can be undone algorithmically. In essence, light is subtracted from the pixels it has leaked into and is restored to its correct place. The operation has to be repeated for each pixel.

To put this scheme into action, we need to know the distance from the camera to each point in the scene—the point's depth. For a conventional photograph, depth cues are hard to come by, but the light-field camera encodes a depth map within the image data. The key is parallax: an object's apparent shift in position when the viewer moves. In general, an object will occupy a slightly different set of pixels in each of the subimages of the microlens camera; the magnitude and direction of the displacements depend on the object's depth within the scene. The depth information is similar to that in a stereoscopic photograph, but based on data from many images instead of just two.

Recording a four-dimensional light field allows for more than just fixing a misfocused image. With appropriate software for viewing the stored data set, the photographer can move the plane of focus back and forth through the scene, or can create a composite image with high depth of field, where all planes are in focus. With today's cameras, focus is something you have to get right before you click the shutter, but in the future it could join other parameters (such as color and contrast) that can be adjusted after the fact.

The microlens array is not the only approach to computing focus and depth of field. Anat Levin, Rob Fergus, Frédo Durand and William T. Freeman of MIT have recently described another technique, based on a "coded aperture." Again the idea is to modify a normal camera, but instead of inserting microlenses near the sensor, a patterned mask or filter is placed in the aperture of the main lens. The pattern consists of opaque and transparent areas. The simplest mask is a half-disk that blocks half the aperture. You might think such a screen would merely cast a shadow over half the image, but in fact rays from the entire scene reach the entire sensor area by passing through the open half of the lens. The half-occluded aperture does alter the blurring of out-of-focus objects, however, making it asymmetrical. Detecting this asymmetry provides a tool for correcting the focus. The ideal mask is not a simple half-disk but a pattern with openings of various sizes, shapes and orientations. It is called a coded aperture because it imposes a distinctive code or signature on the image data.

Further experiments with coded masks have been reported by Ashok Veeraraghavan, Ramesh Raskar and Amit Agrawal of the Mitsubishi Electric Research Laboratory and Ankit Mohan and Jack Tumblin of Northwestern University. They show that such masks are useful not only in the aperture of the lens but also near the sensor plane, where they perform the same function as the array of microlenses in Ng's light-field camera. The "dappling" of the image by the nearby mask encodes the directional information needed to recover the light field.

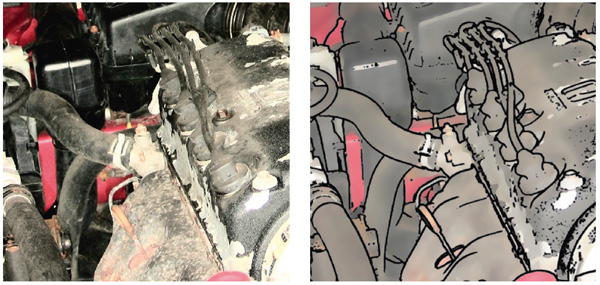

Patterns encoded in a different dimension—time rather than space—provide a strategy for coping with motion blur. Raskar, Agrawal and Tumblin have built a camera that removes the fuzzy streak created by an object that moves while the shutter is open.

Images courtesy of Ramesh Raskar.

Undoing motion blur would seem to be easier than correcting misfocus because motion blur is essentially one dimensional. You just gather up the pixels along the object's trajectory and separate the stationary background from the elements in motion. Sometimes this method works well, but ambiguities can spoil the results. When an object is greatly elongated by motion blur, the image may offer few clues to the object's true length or shape. Guessing wrong about these properties introduces unsightly artifacts.

A well-known trick for avoiding motion blur is stroboscopic lighting: a brief flash that freezes the action. Firing a rapid series of flashes gives information about the successive positions of a moving object. The trouble is, stroboscopic equipment is not always available or appropriate. Raskar and his colleagues have turned the technique inside out. Instead of flashing the light, they flutter the shutter. The camera's shutter is opened and closed several times in rapid succession, with the total amount of open time calculated to give the correct overall exposure. This technique converts one long smeared image into a sequence of several shorter ones, with boundaries that aid in reconstructing an unblurred version.

A further refinement is to make the flutter pattern nonuniform. Blinking the shutter at a fixed rate would create markers at regular intervals in the image, or in other words at just one spatial frequency. For inferring velocity, the most useful signal is one that maximizes the number of distinct spatial frequencies. As with a coded aperture, this coded exposure adds an identifiable signature to the image data.

Some cameras come equipped with a mechanical stabilizer designed to suppress a particular kind of motion blur—that caused by shaking of the camera itself. The shutter-flutter mechanism could handle this task as well.

Computational photography is currently a hot topic in computer graphics. (Computer devoted a special issue to the subject in 2006.) There's more going on than I have room to report, but I want to mention two more particularly adventurous ideas.

One of these projects comes from Raskar and several colleagues (Kar-Han Tan of Mitsubishi, Rogerio Feris and Matthew Turk of the University of California, Santa Barbara, and Jingyi Yu of MIT). They are experimenting with "non-photorealistic photography"—pictures that come out of the camera looking like drawings, diagrams or paintings.

For some purposes a hand-rendered illustration can be clearer and more informative than a photograph, but creating such artwork requires much labor, not to mention talent. Raskar's camera attempts to automate the process by detecting and emphasizing the features that give a scene its basic three-dimensional structure, most notably the edges of objects. Detecting edges is not always easy. Changes in color or texture can be mistaken for physical boundaries; to the computer, a wallpaper pattern can look like a hole in the wall. To resolve this visual ambiguity Raskar et al. exploit the fact that only physical edges cast shadows. They have equipped a camera with four flash units surrounding the lens. The flash units are fired sequentially, producing four images in which shadows delineate changes in contour. Software then accentuates these features, while other areas of the image are flattened and smoothed to suppress distracting detail. The result is reminiscent of a watercolor painting or a drawing with ink and wash.

Another wild idea, called dual photography, comes from Hendrik P. A. Lensch, now of the Max-Planck-Institut für Informatik in Saarbrucken, working with Stephen R. Marschner of Cornell University and Pradeep Sen, Billy Chen, Gaurav Garg, Mark Horowitz and Marc Levoy of Stanford. Here's the setup: A camera is focused on a scene, which is illuminated from another angle by a single light source. Obviously, a photograph made in this configuration shows the scene from the camera's point of view. Remarkably, though, a little computation can also produce an image of the scene as it would appear if the camera and the light source swapped places. In other words, the camera creates a photograph that seems to be taken from a place where there is no camera.

This sounds like magic, or like seeing around corners, but the underlying principle is simple: Reflection is symmetrical. If the light rays proceeding from the source to the scene to the camera were reversed, they would follow exactly the same paths in the opposite direction and return to their point of origin. Thus if a camera can figure out where a ray came from, it can also calculate where the reversed ray would wind up.

Sadly, this research is not likely to produce a camera you can take outdoors to photograph a landscape as seen from the sun. For the trick to work, the light source has to be rather special, with individually addressable pixels. Lensch et al. have adapted a digital projector of the kind used for PowerPoint presentations. In the simplest algorithm, the projector's pixels are turned on one at a time, in order to measure the brightness of that pixel in "reversed light." Thus we return to the thought experiment where each of a million pixels in a display shines on each of a million photosites in a sensor. But now the experiment is done with hardware and software rather than thoughtware.

Some of the innovations described here may never get out of the laboratory, and others are likely to be taken up only by Hollywood cinematographers. But a number of these ideas seem eminently practical. For example, the flutter shutter could be incorporated into a camera without extravagant expense. In the case of the microlens array for recording light fields, Ng is actively working to commercialize the technology. (See refocusimaging.com.)

If some of these techniques do catch on, I wonder how they will change the way we think about photography. "The camera never lies" was always a lie; and yet, despite a long history of airbrush fakery followed by Photoshop fraud, photography retains a special status as a documentary art, different from painting and other more obviously subjective and interpretive forms of visual expression. At the very least, people tend to assume that every photograph is a photograph of something—that it refers to some real-world scene.

Digital imagery has already altered the perception of photography. In the age of silver emulsions, one could think of a photograph as a continuum of tones or hues, but a digital image is a finite array of pixels, each displaying a color drawn from a discrete spectrum. It follows that a digital camera can produce only a finite number of distinguishable images. That number is enormous (perhaps 10100,000,000), so you needn't worry that your camera will run out of pictures or start to repeat itself. Still, the mere thought that images are a finite resource can bring about a change in attitude.

Acknowledging that a photograph is a computed object—a product of algorithms—may work a further change. It takes us another step away from the naive notion of a photograph as frozen photons, caught in mid-flight. Neuroscientists have recognized that the faculty of vision resides more in the brain than in the eye; what we "see" is not a pattern on the retina but a world constructed through elaborate neural processing of such patterns. It seems the camera is evolving in the same direction, that the key elements are not photons and electrons, or even pixels, but higher-level structures that convey the meaning of an image.

© Brian Hayes

Click "American Scientist" to access home page

American Scientist Comments and Discussion

To discuss our articles or comment on them, please share them and tag American Scientist on social media platforms. Here are links to our profiles on Twitter, Facebook, and LinkedIn.

If we re-share your post, we will moderate comments/discussion following our comments policy.